Web site: www.cs.umd.edu/hcil/saphari

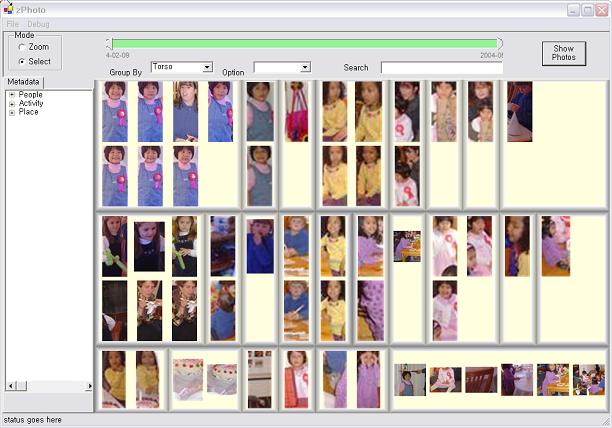

Annotation is important for personal photo collections because acquired metadata

plays a crucial role in image management and retrieval. Bulk annotation, where

multiple images are annotated at once, is a desired feature for image

management tools because it reduces users' burden when making annotations.

The SAPHARI (Semi-Automatic PHoto Annotation and Recognition Interface) automatically creates meaningful

photo clusters for efficient bulk annotation. It integrates automatically

detected metadata into a bulk annotation interface where users can manually

correct errors. The SAPHARI incorporates two automatic techniques; hierarchical

event clustering and clothing based human identification. Hierarchical event

clustering provides multiple levels of "event" groups. For identifying people

in photos, we introduce a new technique which uses clothing information rather

than human facial features.

Web site: www.cs.umd.edu/hcil/brqlayer

BRQLayer is an application designed to generate Bi-level Radial Quantum (BRQ) layouts, which consist of a primary region surrounded by any number of secondary regions (four, in the picture on the left). The thumbnails resize and the regions shift around dynamically as the layout is resized, and as the primary region is moved and/or sized. Applications include dynamic photo websites, browsing real estate listings, generation of large collages for conferences, and photo database navigation.

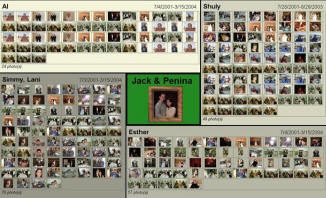

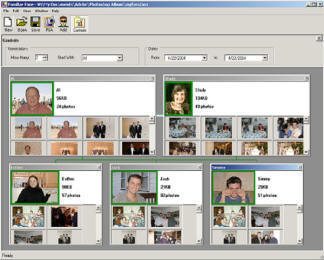

Web site: www.cs.umd.edu/hcil/photopres

Photo annotation is widely recognized to be simultaneously advantageous and time-consuming. By and large, users do not spend the effort required to do quality annotation, and as a result most photos end up in a computer-equivalent of the photo shoebox.

SNAPshots are Selective, Navigable, Automated Presentations that are enabled by a collection of well-annotated photos. They are selective in that they show a particular subset of the photo collection, and they are navigable in the ability dynamically change the parameters of the subset. For example, FamiliarFace is a prototype application designed to motivate better annotation by providing an archetype application that is enabled by existing annotations. Users are able to add family members one at a time, define relationships between them (parent, child, spouse, etc.). Once this is done, a presentation is automatically generated based on photos in the collection that contain the various people on the family tree.

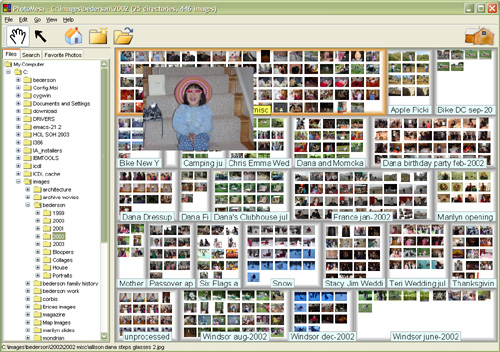

Website: www.cs.umd.edu/hcil/photomesa

PhotoMesa is a zoomable image browser. It allows the user to view multiple directories of images in a zoomable environment, and uses a set of simple navigation mechanisms to move through the space of images. It also supports grouping of images by metadata available from the file system. It requires only a set of images on disk, and does not require the user to add any metadata, or manipulate the images at all before browsing, thus making it easy to get started with existing images.

While many image management systems have focused on annotating and searching, PhotoMesa concentrates on browsing. The interface lets you concentrate on the images, without having to manage scrollbars, menus, or pop-up windows. It also lets you group images by available metadata, such as directory location, image creation date, and words in the filename. We have built it intending it to be useful in informal situations, such as looking at photos together among family members. Others have found it useful as a way of finding what is on their hard disk.

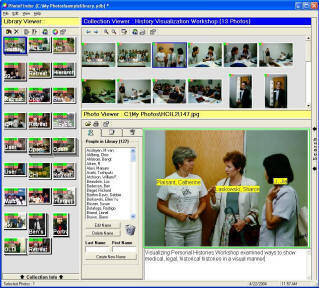

Website: www.cs.umd.edu/hcil/photolib

The University of Maryland Human Computer Interaction Laboratory has developed the PhotoFinder prototypes, as part of its research effort on Personal Photo Libraries.

Our goal was to develop an understanding of user needs, appropriate tasks, and innovative designs for consumer users of digital photos. As digital cameras, scans of existing photos, PhotoCDs, and photos by email become more common, users will have to manage hundreds and then thousands of photos. Their goals are to be to view, explore, locate, reorganize, and then use photos of interest.