26 Virtual Memory I

Dr A. P. Shanthi

The objectives of this module are to discuss the concept of virtual memory and discuss the various implementations of virtual memory.

All of us are aware of the fact that our program needs to be available in main memory for the processor to execute it. Assume that your computer has something like 32 or 64 MB RAM available for the CPU to use. Unfortunately, that amount of RAM is not enough to run all of the programs that most users expect to run at once. For example, if you load the operating system, an e-mail program, a Web browser and word processor into RAM simultaneously, 32 MB is not enough to hold all of them. If there were no such thing as virtual memory, then you will not be able to run your programs, unless some program is closed. With virtual memory, we do not view the program as one single piece. We divide it into pieces, and only the one part that is currently being referenced by the processor need to be available in main memory. The entire program is available in the hard disk. As the copying between the hard disk and main memory happens automatically, you don’t even know it is happening, and it makes your computer feel like is has unlimited RAM space even though it only has 32 MB installed. Because hard disk space is so much cheaper than RAM chips, it also has a n economic benefit.

Techniques that automatically move program and data blocks into the physical main memory when they are required for execution are called virtual-memory techniques. Programs, and hence the processor, reference an instruction and data space that is independent of the available physical main memory space. The binary addresses that the processor issues for either instructions or data are called virtual or logical addresses. These addresses are translated into physical addresses by a combination of hardware and software components. If a virtual address refers to a part of the program or data space that is currently in the physical memory, then the contents of the appropriate location in the main memory are accessed immediately. On the other hand, if the referenced address is not in the main memory, its contents must be brought into a suitable location in the memory before they can be used. Therefore, an address used by a programmer will be called a virtual address, and the set of such addresses the address space. An address in main memory is called a location or physical address. The set of such locations is called the memory space, which consists of the actual main memory locations directly addressable for processing. As an example, consider a computer with a main-memory capacity of 32M words. Twenty-five bits are needed to specify a physical address in memory since 32 M = 225. Suppose that the computer has available auxiliary memory for storing 235, that is, 32G words. Thus, the auxiliary memory has a capacity for storing information equivalent to the capacity of 1024 main memories. Denoting the address space by N and the memory space by M, we then have for this example N = 32 Giga words and M = 32 Mega words.

The portion of the program that is shifted between main memory and secondary storage can be of fixed size (pages) or of variable size (segments). Virtual memory also permits a program’s memory to be physically noncontiguous , so that every portion can be allocated wherever space is available. This facilitates process relocation. Virtual memory, apart from overcoming the main memory size limitation, allows sharing of main memory among processes. Thus, the virtual memory model provides decoupling of addresses used by the program (virtual) and the memory addresses (physical). Therefore, the definition of virtual memory can be stated as, “ The conceptual separation of user logical memory from physical memory in order to have large virtual memory on a small physical memory”. It gives an illusion of infinite storage, though the memory size is limited to the size of the virtual address.

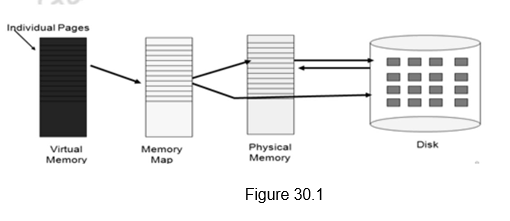

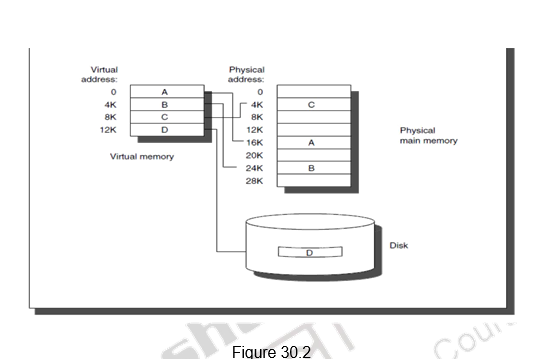

Even though the programs generate virtual addresses, these addresses cannot be used to access the physical memory. Therefore, the virtual to physical address translation has to be done. This is done by the memory management unit (MMU). The mapping is a dynamic operation, which means that every address is translated immediately as a word is referenced by the CPU. This concept is depicted diagrammatically in Figures 30.1 and 30.2. Figure 30.1 gives a general overview of the mapping between the logical addresses and physical addresses. Figure 30.2 shows how four different pages A, B, C and D are mapped. Note that, even though they are contiguous pages in the virtual space, they are not so in the physical space. Pages A, B and C are available in physical memory at non-contiguous locations, whereas, page D is not available in physical storage.

Address mapping using Paging: The address mapping is simplified if the informa tion in the address space and the memory space are each divided into groups of fixed size. The physical memory is broken down into groups of equal size called page frames and the logical memory is divided into pages of the same size. The programs are also considered to be split into pages. Pages commonly range from 2K to 16K bytes in length. They constitute the basic unit of information that is moved between the main memory and the disk whenever the translation mechanism determines that a move is required. Pages should not be too small, because the access time of a magnetic disk is much longer than the access time of the main memory. The reason for this is that it takes a considerable amount of time to locate the data on the disk, but once located, the data can be transferred at a rate of several megabytes per second. On the other hand, if pages are too large it is possible that a substantial portion of a page may not be used, yet this unnecessary data will occupy valuable space in the main memory. If you consider a computer with an address space of 1M and a memory space of 64K, and if you split each into groups of 2K words, you will obtain 29 (512) pages and thirty-two page frames. At any given time, up to thirty-two pages of address space may reside in main memory in anyone of the thirty-two blocks.

In order to do the mapping, the virtual address is represented by two numbers: a page number and an offset or line address within the page. In a computer with 2 p words per page, p bits are used to specify an offset and the remaining high-order bits of the virtual address specify the page number. In the example above, we considered a virtual address of 20 bits. Since each page consists of 211 = 2K words, the high order nine bits of the virtual address will specify one of the 512 pages and the low-order 11 bits give the offset within the page. Note that the line address in address space and memory space is the same; the only mapping required is from a page number to a block number.

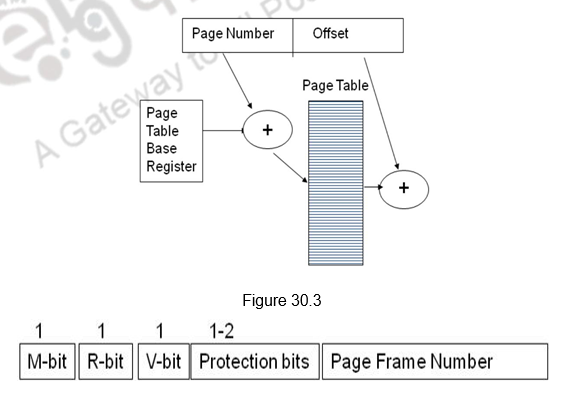

The mapping information between the pages and the page frames is available in a page table. The page table consists of as many pages that a virtual address can support. The base address of the page table is stored in a register called the Page Table Base Register (PTBR). Each process can have one or more of its own page tables and the operating system switches from one page table to another on a context switch, by loading a different address into the PTBR. The page number, which is part of the virtual address, is used to index into the appropriate page table entry. The page table entry contains the physical page frame address, if the page is available in main memory. Otherwise, it specifies wherein secondary storage, the page is available. This generates a page fault and the operating system brings the requested page from secondary storage to main storage. Along with this address information, the page table entry also provides information about the privilege level associated with the page and the access rights of the page. This helps in p roviding protection to the page. The mapping process is indicated in Figure 30.3. Figure 30.4 shows a typical page table entry. The dirty or modified bit indicates whether the page was modified during the cache residency period.

Figure 30.4

Figure 30.4

- M – indicates whether the page has been written (dirty)

- R – indicates whether the page has been referenced (useful for replacement)

- V – Valid bit

- Protection bits – indicate what operations are allowed on this page

- Page Frame Number says where in memory is the page

A virtual memory system is thus a combination of hardware and software tech-niques. The memory management software system handles all the software operations for the efficient utilization of memory space. It must decide the answers to the usual four questions in a hierarchical memory system:

- Q1: Where can a block be placed in the upper level?

- Q2: How is a block found if it is in the upper level?

- Q3: Which block should be replaced on a miss?

- Q4: What happens on a write?

The hardware mapping mechanism and the memory management software together constitute the architecture of a virtual memory and answer all these questions .

When a program starts execution, one or more pages are transferred into main memory and the page table is set to indicate their position. Thus, the page table entries help in identifying a page. The program is executed from main memory until it attempts to reference a page that is still in auxiliary memory. This condition is called a page fault. When a page fault occurs, the execution of the present program is suspended until the required page is brought into main memory. Since loading a page from auxiliary memory to main memory is basically an I/O operation, the operating system assigns this task to the I/O processor. In the meantime, control is transferred to the next program in memory that is waiting to be processed in the CPU. Later, when the memory block has been assigned and the transfer completed, the original program can resume its operation. It should be noted that it is always a write back policy that is adopted, because of the long access times associated with the disk access. Also, when a page fault is serviced, the memory may already be full. In this case, as we discussed for caches, a replacement has to be done. The replacement policies are again FIFO and LRU. The FIFO replacement policy has the advantage of being easy to implement. !t has the disadvantage that under certain circumstances pages are removed and loaded from memory too frequently. The LRU policy is more difficult to implement but has been more attractive on the assumption that the least recently used page is a better candidate for removal than the least recently loaded page as in FIFO. The LRU algorithm can be implemented by associating a counter with every page that is in main memory. When a page is referenced, its associated counter is set to zero. At fixed intervals of time, the counters associated with all pages presently in memory are incremented by 1. The least recently used page is the page with the highest count. The counters are often called aging registers, as their count indicates their age, that is, how long ago their associated pages have been referenced.

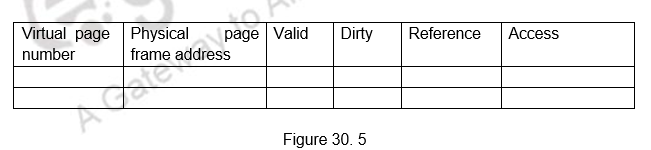

Drawback of Virtual memory: So far we have assumed that the page tables are stored in memory. Since, the page table information is used by the MMU, which does the virtual to physical address translation, for every read and write access, every memory access by a program can take at least twice as long: one memory access to obtain the physical address and a second access to get the data. So, ideally, the page table should be situated within the MMU. Unfortunately, the page table may be rather large, and since the MMU is normally implemented as part of the processor chip, it is impossible to include a complete page table on this chip. Therefore, the page table is kept in the main memory. However, a copy of a small portion of the page table can be accommodated within the MMU. This portion consists of the page table entries that correspond to the most recently accessed pages. A small cache, usually called the Translation Lookaside Buffer (TLB) is incorporated into the MMU for this purpose. The TLB stores the most recent logical to physical address translations. The operation of the TLB with respect to the page table in the main memory is essentially the same as the operation we have discussed in conjunction with the cache memory. Figure 30.5 shows a possible organization of a TLB where the associative mapping technique is used. Set-associative mapped TLBs are also found in commercial products. The TLB gives information about the validity of the page, status of whether it is available in physical memory, protection information, etc. apart from the physical address.

An essential requirement is that the contents of the TLB be coherent with the contents of page tables in the memory. When the operating system changes the contents of page tables, it must simultaneously invalidate the corresponding entries in the TLB. The valid bit in the TLB is provided for this purpose. When an entry is invalidated, the TLB will acquire the new information as part of the MMU’s normal response to access misses.

With the introduction of the TLB, the address translation proceeds as follows. Given a virtual address, the MMU looks in the TLB for the referenced page. If the page table entry for this page is found in the TLB, the physical address is obtained immediately. If there is a miss in the TLB, then the required entry is obtained from the page table in the main memory and the TLB is updated.

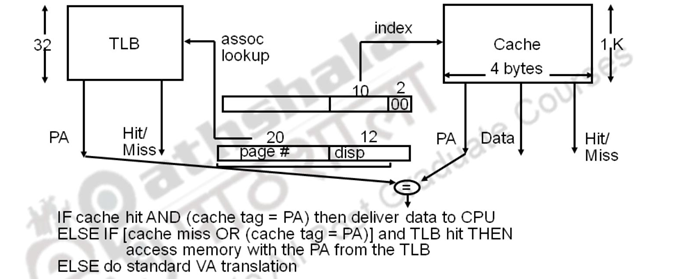

Recall that the caches need a physical address, unless we use virtual caches. As discussed with respect to cache optimizations, machines with TLBs go one step further to reduce the number of cycles/cache access. They overlap the cache access with the TLB access. That is, the high order bits of the virtual address are used to look in the TLB while the low order bits are used as index into the cache. The flow is as shown below.

The overlapped access only works as long as the address bits used to index into the cache do not change as the result of VA translation. This usually limits things to small caches, large page sizes, or high n-way set associative caches if you want a large cache.

Finally, we shall have a word on the types of misses that can occur in a hierarchical memory system. This is again similar to the misses that we have already discussed with respect to cache memory. The misses are summarized as follows:

• Compulsory Misses:

– Pages that have never been paged into memory before

– How might we remove these misses?

• Prefetching: loading them into memory before needed

• Need to predict future somehow!

• Capacity Misses:

– Not enough memory. Must somehow increase size.

– Can we do this?

- One option: Increase amount of DRAM

- Another option: If multiple processes in memory: adjust percentage of memory allocated to each one!

- Conflict Misses:

– Technically, conflict misses don’t exist in virtual memory, since it is a “fully-associative” cache

- Policy Misses:

– Caused when pages were in memory, but kicked out prematurely because of the replacement policy

– How to fix? Better replacement policy

To summarize, we have looked at the need for the concept of virtual memory. Virtual memory is a concept implemented using hardware and software. The restriction placed on the program si ze is not based on the RAM size, but based on the virtual memory size. There are three different ways of implementing virtual memory. The MMU does the logical to physical address translation. Paging uses fixed size pages to move between main memory and secondary storage. Paging uses page tables to map the logical addresses to physical addresses. Thus, virtual memory helps in dynamic allocation of the required data, sharing of data and providing protection. The TLB is used to store the most recent logical to physical address translations.