Youngmin Kim |

|

|

Knight Capital Group 545 Washington Blvd. Jersey City, NJ 07310 |

|

| Email: |  |

|

|

|||||||||

|

|

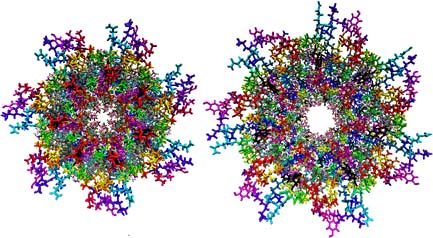

Mesh Saliency and Human Eye Fixations Y. Kim, A. Varshney, D. W. Jacobs, and F. Guimbretiere, ACM TAP, 2010. We present a user study that compares the previous mesh saliency approaches with human eye movements. To quantify the correlation between mesh saliency and fixation locations for 3D rendered images, we introduce the normalized chance-adjusted saliency by improving the previous chance-adjusted saliency measure. Our results show that the current computational model of mesh saliency can model human eye movements significantly better than what can be expected by a purely random model and by a curvature-based model. |

||

|

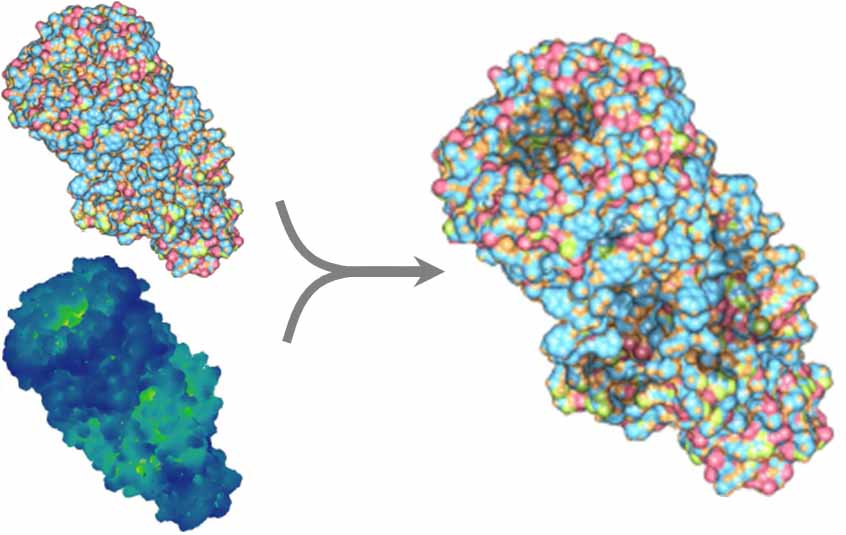

Salient Frame Detection for Molecular Dynamics Simulations R. Patro, A. Varshney, Y. Kim, C. Y. Ip, D. P. O'Leary, A. Anishkin, and S. Sukharev, Schloss Dagstuhl Scientific Visualization Workshop, 2009. Fast-previewing of time-series datasets is important for the purpose of summarization and abstraction in various fields. We formulate the time-series sequences such as Molecular Dynamics Simulations by a matrix, and analyze it to automatically detect salient time frames. Our approach is especially effective in detecting non-repetitive salient frames. |

||

|

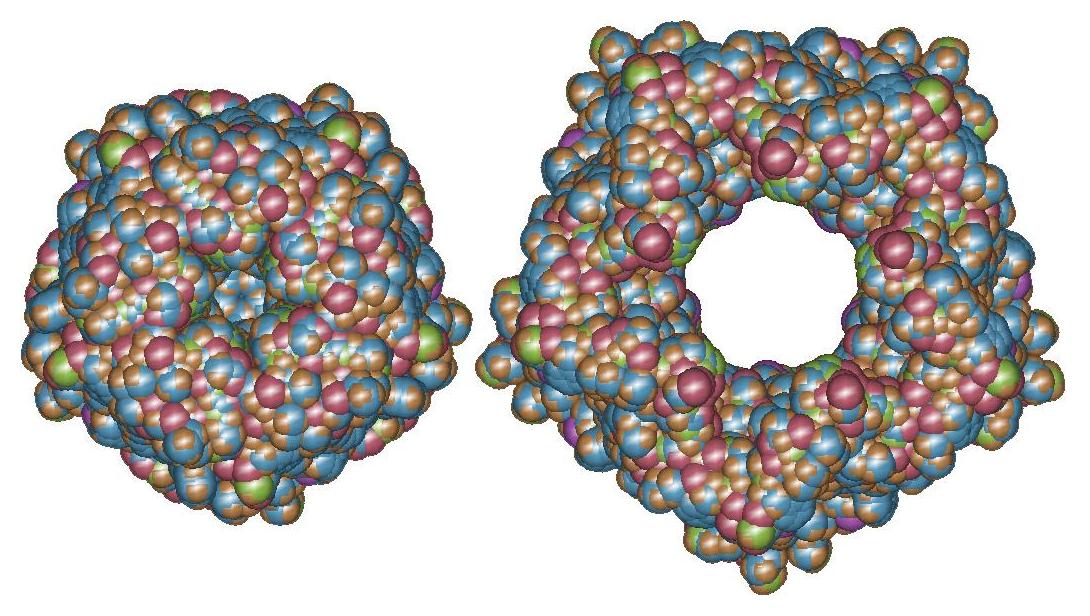

Saliency-guided Lighting for Molecular Graphics C. H. Lee, Y. Kim, and A. Varshney, IEICE TIS, 2009. The visualization of macromolecular surfaces is challenging since they have rough surfaces due to the spherical representation of atoms. We explore how the saliency of 3D objects can be used to guide lighting to emphasize important regions and suppress less important ones. Our salient lighting can clearly distinguish the central channel and the oblique clefts on the side in the Ecoli membrane channel.. |

||

|

Second Life and the New Generation of Virtual Worlds S. Kumar, J. Chhugani, C. Kim, D. Kim, A. Nguyen, P. Dubey, C. Bienia, and Y. Kim, IEEE Computer, 2008. We present a detailed characterization and analysis of Second Life: one of the most popular virtual worlds today. Our measurements show that this application places significant demands on servers, clients, and the network. Our analysis shows that as Virtual Worlds evolve to support more complex worlds and phenomena, its requirements will be considerably larger. Consequently, these applications will play an important role in the design of future computer systems. |

||

|

CPU-GPU Cluster [Project Page, Video: 14MB] The Maryland CPU-GPU Cluster is a computational infrastructure that leverages the synergistic cluster coupling of CPUs, GPUs, displays, and storage. The GPU programming model is an approximation of the streaming-model of data computation. We use the CPU-GPU cluster and LCD display wall for projects related to Graphics and Scientific Computing. These include large scale data visualization, rendering from compressed data, parallel rendering, and highly parallel matrix computations. My role in this project has been to explore multicore rendering from compressed data. |

||

|

Persuading Visual Attention through Geometry Y. Kim and A. Varshney, IEEE TVCG, June 2008. Artists, illustrators, photographers, and cinematographers have long used the principles of contrast and composition to guide visual attention. We introduce geometry modification as a tool to persuasively direct visual attention. This operator is based on the center-surround mechanisms at multiple scales and invert the process of the saliency computation at each scale. We validate the effectiveness of our enhancement technique over the previous method through an eye-tracking-based user study. |

||

|

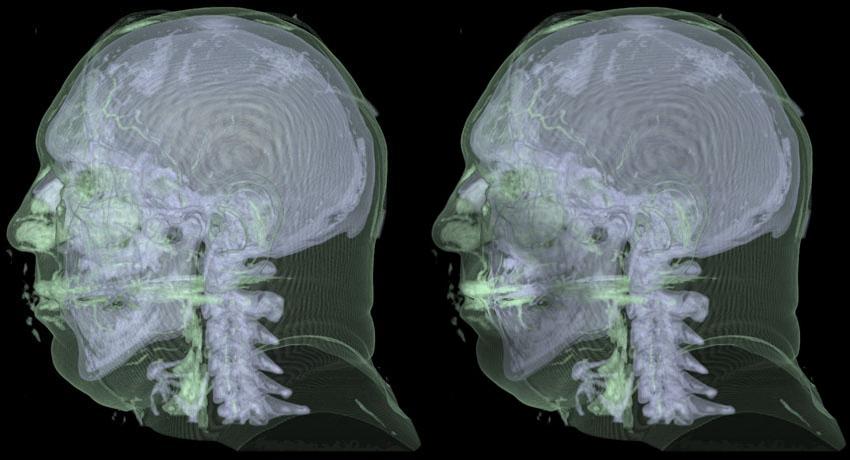

Saliency-guided Enhancement for Volume Visualization [Paper,

BibTex,

Project Page] Y. Kim and A. Varshney, IEEE TVCG, October 2006 (Proc. IEEE Visualization 2006). We present a visual-saliency-based operator to enhance selected regions of a volume. We show how we use such an operator on a user-specified saliency field to compute an emphasis field. We further discuss how the emphasis field can be integrated into the visualization pipeline through its modifications of regional luminance and chrominance. We validate our work using an eye-tracking-based user study. |

|

Vertex-Transformation Streams [Paper,

BibTex,

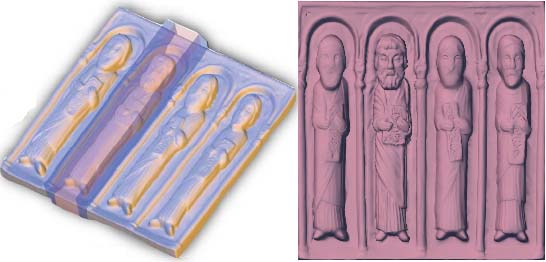

Project Page] Y. Kim, C.H. Lee, and A. Varshney, Graphical Models, July 2006. We introduce the idea of creating vertex and transformation streams that represent large point data sets via their interaction. We discuss how to factor such point datasets into a set of source vertices and transformation streams by identifying the most common translations amongst vertices. We validate our approach by integrating it with a view-dependent point rendering system and show significant improvements in input geometry bandwidth requirements as well as rendering frame rates. |

||

|

Unsupervised Learning Applied to Progressive Compression of Time-Dependent Geometry [Paper,

BibTex,

Project Page] T. Baby, Y. Kim, and A. Varshney, Computers and Graphics, June 2005. We propose a new approach to progressively compress time-dependent geometry. Our approach exploits correlations in motion vectors to achieve better compression. We use unsupervised learning techniques to detect good clusters of motion vectors. For each detected cluster, we build a hierarchy of motion vectors using pairwise agglomerative clustering, and succinctly encode the hierarchy using entropy encoding. We demonstrate our approach on a client-server system that we have built for downloading time-dependent geometry. |

|

Visualization of Richtmyer-Meshkov Instability Simulation [Video: 16MB or 40MB] I have worked on the visualization of Richtmyer-Meshkov Instability Simulation conducted by Lawrence Livermore National Laboratory. The dataset has 273 times steps, each of which consists of 7.5GB of data. This results in a total of 2TB of data. I have implemented iso-surface extraction of this time-varying dataset by using marching-cube algorithm. Even in the 8-to-1 downsampled version, the marching cube algorithm has returned up to 2.47M of triangles. It is a real challenge to extract iso-surfaces from the entire dataset and to render them at an interactive rate. We are currently exploring the visualization of this dataset and algorithms for parallel rendering by using our GPU-CPU cluster. |

||

|

Eyetracker Calibration [Details] Y. Kim, W. Wang, and F. Guimbretiere We have improved the calibration of the eyetracker which is used for my perception studies in graphics. We used the ISCAN ETL-500 eye-tracker, which can record eye movements continuously at 60 Hz. The standard calibration of ETL-500 eye-tracker was performed with 4 corner points and one center point. However, this was not sufficiently accurate for our purposes due to non-linearities in the eye-tracker-calibrated screen space. We used the second calibration step, which involves a more densely-sampled calibration phase, with 13 additional points. Most of subjects were able to successfully calibrate to within an accuracy of 30 pixels (about .75 degree). |

|

Particle Growing [Presentation] Y. Kim, H. Song, A. Varshney, and I. Bitter This was a semester-long project in which I collaborated with Dr. Ingmar Bitter of NIH for the advanced graphics course in Spring 2005. In this project, we designed and implemented a new segmentation method, named particle growing. It uses a speed image, each value of which determines the amount of progress that each control point can proceed per iteration. It is very fast for segmenting convex regions like the ventricle of the brain compared to other segmentation methods because a user can specify the step size (which is usually bigger than 1), as an input parameter, at each iteration. Since the results of the segmentation are lines in 2D and triangles in 3D (in the future), we can directly use them for rendering. |

||

|

Fighting Game with 2D and 3D Graphics [Project Page] I have designed and implemented four stages of course project as a TA for the graphics course in Fall 2003. Assignments involved simulating martial arts kicking with 2D and 3D graphics. The final assignment included all the basic elements of computer graphics such as camera movement, illumination and shading, depth of field, soft shadows, texture mapping, and environment mapping. |

|

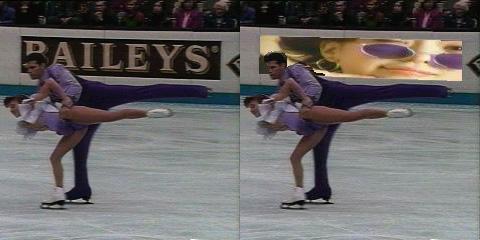

Video Manipulation [Results] This was the project done with Dr. Yiannis Aloimonos in the Computer Processing of Pictorial Information course. The purpose of this project was to alter a video's content by inserting or deleting particular objects. I removed the content of a board (Bailey's banner) in the background of a video sequence and replaced it with another texture (a woman wearing glasses). The picture was properly projected onto the hockey rink wall. The replacement involved the automatic identification of the board corners by using the previous image and the detection of objects moving in front of the board, in order to avoid wrong pixel replacements. |