Kyungjun Lee

Ph.D. Candidate in Computer Science @ University of Maryland, College Park

I am interested in understanding human interactions with computer systems and transferring the interactions into inclusive designs of such systems. In particular, enabling AI to understand human's intentions through interactions, I design and develop intelligent systems to augment users' experiences and capabilities.

I am on the job market and actively looking for industrial research positoins in human-centered AI, accessibility, and HCI.

About me

Kyungjun Lee is a 6th-year Ph.D. candidate in Computer Science at the University of Maryland, College Park, and a member of Human-Computer Interaction Lab and Intelligent Assistant Machines Lab, advised by Hernisa Kacorri. Kyungjun has been exploring human interactions with AI, AR, and wearable cameras to design a system that can capture the user's intent better. His Ph.D. dissertation is to design intelligent camera systems to help blind people access their visual surroundings. He has also worked as a research intern in the Lookout team at Google Research and in the HCI group at Snap Research and collaborated with Cognitive Assistance Lab at Carnegie Mellon University.

News

- Oct 2021 Our From the Lab to People's Home paper accepted to W4A 2022!

- Oct 2021 Our Leveraging Hand-Object Interactions paper accepted to TPAMI!

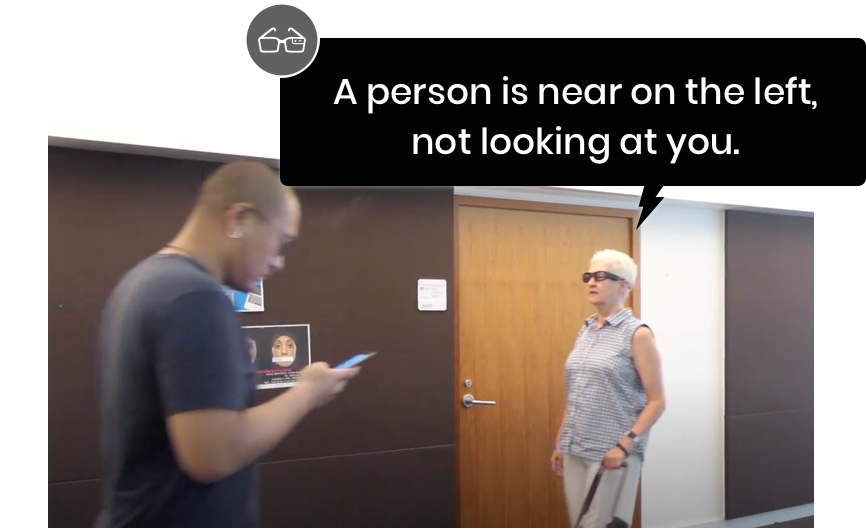

- Jun 2021 Our Accessing Passersby Proxemic Signals through Smart Glasses paper accpeted to ASSETS 2021!

- May–Aug 2021 Internship at Google Research with wonderful mentors, Andreina Reyna and Arjun Karpur.

- Jan 2021 Became Future Faculty Fellow at the University of Maryland, College Park.

- Jan 2021 Successfully proposed my dissertation on egocentric vision in assistive technologies for and by the blind!

- Jun–Aug 2020 Internship at Snap Research with awesome mentors, Rajan Vaish, Brian A. Smith, and Yu Jiang Tham.

- May 2020 Received HCIL Maryland Way Award for Research Excellence

Selected publications

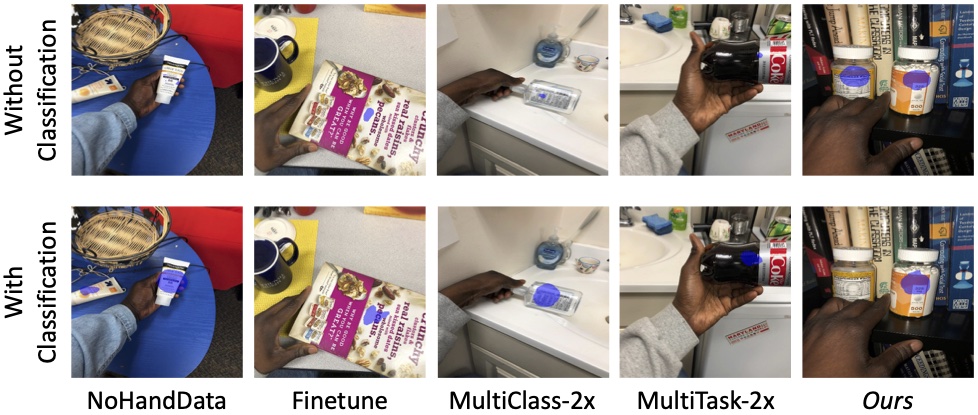

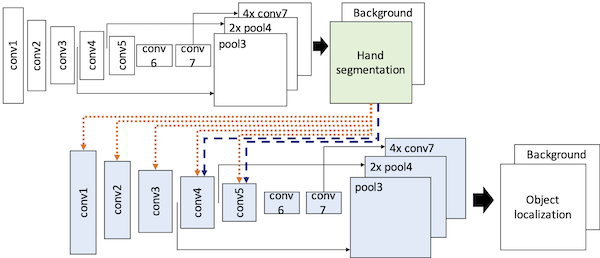

Leveraging Hand-Object Interactions in Assistive Egocentric Vision

Kyungjun Lee, Abhinav Shrivastava, Hernisa Kacorri

IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2021

IEEE

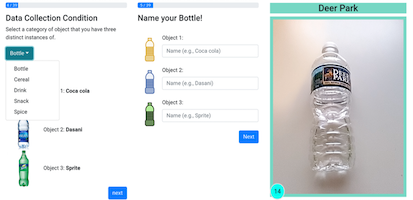

Exploring Machine Teaching for Object Recognition with the Crowd

Jonggi Hong, Kyungjun Lee, June Xu, Hernisa Kacorri

Extended Abstracts of ACM CHI Conference on Human Factors in Computing Systems (CHI), 2019

ACM

© 2022 Kyungjun Lee