Past and Future Developments in Memory Design

The Humble Origins

In the beginning......ENIAC

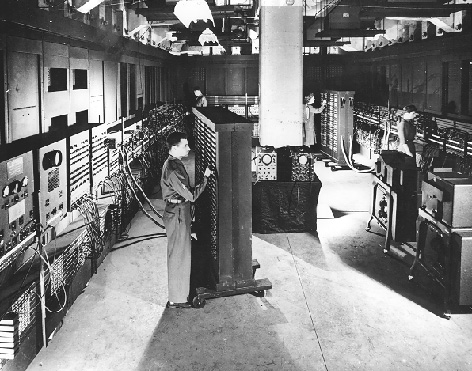

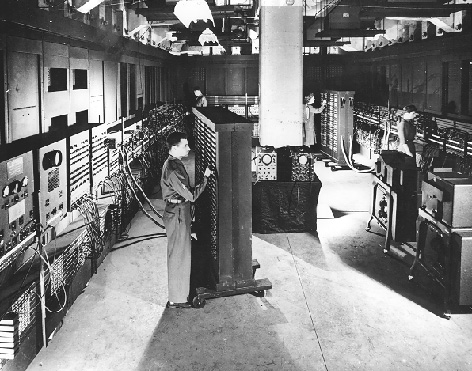

The US Army's ENIAC project was the first computer to have memory storage capacity in any

form. Assembled in the Fall of 1945, ENIAC was the pinnacle of modern technology (well, at

least at the time). It was a 30 ton monster, with thiry seperate units, plus power supply

and forced-air cooling. 19,000 vaccum tubes, 1,500 relays, and hundreds of thousands of

resistors, capacitors, and inductors consuming almost 200 kilowatts of electrical power,

ENIAC was a glorified calculator, capable of addition, subtraction, multiplication,

division, sign differentiation, and square root extraction.

Despite it's unfavorable comparison to modern computers, ENIAC was state-of-the-art for in

it's time. ENIAC was the world's first electronic digital computer, and many of it's

features are still used in computers today. The standard circuitry concepts of gates

(logical "and"), buffers (logical "or"), and the use of flip-flops as

storage and control devices first appeared in the ENIAC.

ENIAC had no central memory, per se. Rather, it had a series of twenty accumulators, which

functioned as computational and storage devices. Each accumulator could store one signed

10-digit decimal number. The accumulator functioned as follows:

ENIAC operated under the control of a series of pulses from a cycling

unit, which emitted pulses at 10 microsecond intervals.

ENIAC led the computer field through 1952. In 1953, ENIAC's memory capacity was increased

with the addition of a 100-word static magnetic-memory core. Built by the Burroughs

Corporation, using binary coded decimal number system, the memory core was the first ever

of it's kind. The core was operational three days after installation, and run until ENIAC

was retired.....quite a feat, given the breakdown-prone ENIAC.

ENIAC: 30 tons of fury

Core Dump

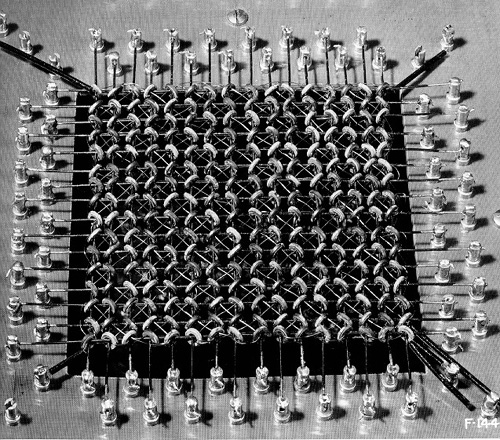

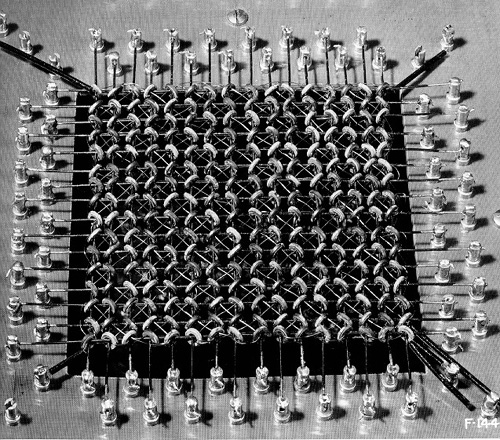

After it's creation in 1952, core memory remained the fastest form of memory

available until the late 1980's. Core memory (also known as main memory), is

composed of a series of donut-shaped magnets, called cores. The cores were treated

as binary switches. Each core could be polarized in either a clockwise or counterclockwise

fashion, thus altering the positive or negative charge of the "donut" changed

it's state.

Until fairly recently, core was the prominent form of memory for computing (by 1976, 95%

of the worlds computers used core memories). It had several features that made it

attractive for computer hardware designers. First, it was cheap. Dirt cheap. In 1960, when

computers were beginning to be widely used in large commercial enterprises, core memory

cost about 20 cents a bit. By 1974, with the wide-spread use of semi-conductors in

computers, core memory was running slightly less than a penny per bit. Secondly, since

core memory relied on changing the polarity of ferrite magnets for retaining information,

core would never lose the information it contained. A loss of power would not effect the

memory, since the states of the magnets wouldn't be changed. In a similar vein, radiation

from the machine would have no effect on the state of the memory.

Core memory is organized in 2-dimensional matrices, usually in planes of 64X64 or 128X128.

These planes of memory (called "mats") were then stacked to form memory banks,

with each individual core barely visible to the human eye.. The core read/write wires were

split into two wires (column, row), each wire carrying half of the necessary threshold

switching current. This allowed for the addressing of specific memory cores in the matrix

for reading and writing.

Currently, molecular memory is being

A 1951 core memory design. Each core show here is several millimeters in diameter.

It's Glorious Future

With the rapid changes in computing technology that occur almost daily, it shouldn't be

suprising that new paradigms of RAM are being researched. Below are some examples of where

the future of RAM technology may be heading.....

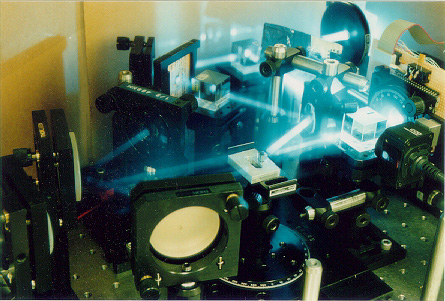

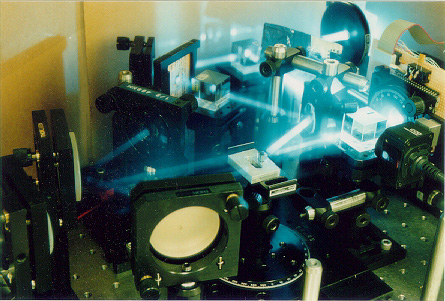

Holographic Memory

We are all familiar with holograms; those cool little optical tricks that create 3D images

that seem to follow you around the room. You can get them for a buck at the Dollar Store.

But I bet you didn't realize that holograms have the potential to store immense quantities

of data in a very compact space. While it's still a few years away from being a commercial

product, holographic memory modules have the potential to put gigabytes of memory capacity

on your motherboard. Now, I'm not going to jive you: I don't understand this stuff on a

very technical level. It's still pretty theoretical. But I'll explain what I do know, and

hopefully you'll be able to grasp just how this exciting old technology can be used as a

computing medium.

First, what is a hologram? Well, when two coherent beams of light

(such as laser beams) intersect in a holographic medium (such as liquid crystal), they

leave an interference pattern in the medium at the point of intersection. This

interference pattern is what we call a hologram. It has the property that if one of the

beams of light is shown through the pattern, it appears as though the second source of

light is also illuminating the pattern, making the pattern "jump out at you".

Now, instead of having an image projected, imagine that the source was a page of data. By

having a source illuminate a pattern created with the data page, the page of data

"comes out". Imagine futher that a series of patterns had been grated into the

medium, each with a different page of data. With one laser beam, you could read the data

off the holographic crystal. And by simply rotating the crystal a fraction of a degree,

the laser would be intersecting a different interference pattern, which would access a

whole new page of data! Instead of accessing a series of bits, you would be accessing

megabytes of data at a time!

Pretty neat, huh?

The implications are pretty obvious. A tremendous amount of data could be stored on a tiny

chip. The only limitations would be in the size of the reading and writing optical

systems. While this technology is not ready for widespread use, it is exciting to think

that computers are approaching this level of capability at.....well, at the speed of

light.

Here is an example of a holographic memory system. Obviously not ready for PC use.

Molecular Memory

Here is a form of memory under research that is very theoretical.

Conventional wisdom says that although current production methods can cram large amounts

of data into the small space of a chip, eventually there will reach a point where we can't

cram anymore on there. So, what do we do? According to researchers, we look for something

smaller to write on; say, about the size of a molecule, or even an atom.

Current research is focused on bacteriorhodopsin, a protein found in the membrane

of a microorganism called halobacterium halobium, which thrives in salt-water

marshes. The organism uses these proteins for photosynthesis when oxygen levels in the

environment are too low for it to use respiration for energy. The protein also has a

feature that attracts it to researchers: under certain light conditions, the protein

changes it's physical structure.

The protein is remarkably stable, capable of holding a give state for years at a time

(researchers have molecular memory modules which have held their data for two years, and

it is theorized that they could remain stable for up to five years). The basis of this

memory technology is that in it's different physical strucutres, the bacteriorhodopsin

protein will absorb different light spectra. A laser of a certain color is used to flip

the proteins from one state to the other. To read the data (and this is the slick part), a

red laser is show upon a page of data, with a photosensitive receptor behind the memory

module. Protein in the O state (binary 0) will absorb the red light, while protein

in the Q state (binary 1) will let the beam pass through onto the photoreceptor,

which reads the binary information.

Current molecular memory design is a 1" X 1" X 2" transparent curvette. The

proteins are held inside the curvette by a polymerized gel. Theoretically, this dinky chip

can contain upwards of a terabyte of data (roughly one million megabytes). In practice,

researchers have only managed to store 800 megs of data. Still, this is an impressive

accomplishment, and the designers have realistic expectations to to have this chip hold

1.7 gigs of data within the next few years. And while the reading is somewhat slow

(comparable to some slower forms of todays memory), it returns over a meg of data per

read. Not too shabby......

Below is a schematic, taken from Byte online magazine, which shows how molecular

memory works:

Q&A

(and you thought you didn't have homework, didn't you?)

Question 1

Your company is switching out the old core memory in it's mainframes in favor of a solid

state DRAM memory. But is this necessarily a vast improvement? What is an advantage of

using core? What aspects will be lost by switching to DRAM?

Question 2

Suppose core memory has a memory access time of 2 microseconds (2 millionths of a second).

The new DRAM memory has an average access time of 60 nano seconds (60 billionths of a

second). How much faster is the new memory, given that the CPU of the mainframe runs at

150 MHz?

Question 3

Pretend we just invented a cool new holographic memory system. It holds an immmese

amount of data, but it's SLOW!!! If a hunk of data isn't in main memory, we have to go to

holographic memory (which we will use in place of virtual memory in this instance) and we

wait for the access.....and wait.....and wait......

What kind of memory writing (storage) scheme would best be used to reduce the wait time

for writing (think: where will a block be placed in the holographic memory? Is there an

organizational structure?.....) What would be the disadvantage of this scheme?

Answers

(No peeking!)

1. While core memory may be outdated, it does have a few thigs going for it. First, it's

cheap. Dirt cheap (ring any bells?). Second, since it's state is dependent on the polarity

of an iron magnet, the memory is non-volatile, and cannot be lost due to power shortages

or irradiation.

2. The 150 MHz was thrown in there to throw you off: it has no bearing on the question.

It's a simple proportion problem. Speed up is the speed of the old divided by speed of the

new. So.....

speed of old .000002

--------------- = ---------------

speed of new .000000009

You can pound this out on a calculator if you wish. The answer is roughly 222 times

faster.

3. We figure a FULLY ASSOCIATIVE organization for the holographic memory would reduce

the time it took to write to it. Just throw the memory in there. The downside is that it

would take longer to find once it's in there (have to search the entire memory for a piece

of data).

- This page (c) 1998, Jason Martin -- Last Modified: 12/17/98 -

This page has been created as an academic reference. All sources within

have been used without permission for adademic purposes.