CMSC216 Project 5: Heaps and Performance Optimization

- Due: 11:59pm Fri 12-Dec-2025

- Approximately 4.0% of total grade

- Submit to Gradescope

- Projects are individual work: no collaboration with other students is allowed. Seek help from course staff if you get stuck for too long.

CODE/TEST DISTRIBUTION: p5-code.zip

Video Overview: https://umd.instructure.com/courses/1388320/pages/week14-videos

CHANGELOG:

- Mon Dec 8 10:42:40 AM EST 2025

- According to Post 709, removed stale references to a "writeup" document in grading criteria for problem 2; this was a leftover from a previous version of the assignment which is not relevant to the current version.

Table of Contents

- 1. Introduction

- 2. Download Code and Setup

- 3. Problem 1 : EL Malloc

- 3.1. EL Malloc Data Structures

- 3.2. Block Header/Footer

- 3.3. Blocks Above/Below

- 3.4. Block Lists and Global Control

- 3.5. Pointers and "Actual" Space

- 3.6. Doubly Linked List Operations

- 3.7. Allocation via Block Splitting

- 3.8. Freeing Blocks and Merging

- 3.9. Expanding the Heap

- 3.10. Overall Code Structure of EL Malloc

- 3.11. Demo Run using EL Malloc

- 3.12. Grading Criteria for Problem 1

- 4. Problem 2: MATATA Operation

- 4.1. Overview

- 4.2. Optimize MATATA

- 4.3. Evaluation on Grace

- 4.4.

matata_print.cTesting Program - 4.5. Optimization Suggestions and Documentation

- 4.6. Hints on Cache-Friendly Memory Traversals

- 4.7. Hints on Multi-threading

- 4.8. Constraints

- 4.9. Additional Optimizations for MAKEUP Credit

- 4.10. Grading Criteria for Problem 1 (55%)

- 5. Assignment Submission

1 Introduction

This project features a two required problems pertaining to the final topics discussed in the course.

- EL Malloc implements a simple, explicit list memory allocator.

This manages heap memory in doubly linked lists of Available and

Used memory blocks to provide

el_malloc() / el_free(). It could be extended with some work to be a drop-in replacement formalloc() / free(). - A baseline implementation for a function operating on a matrix is provided and students will create an optimzied version of it which calculates the same result but in a shorter time. Doing so will involve exploiting knowledge of the memory hierarchy to favor cache and enabling multi-threading to further boost performance.

2 Download Code and Setup

Download the code pack linked at the top of the page. Unzip this which will create a project folder. Create new files in this folder. Ultimately you will re-zip this folder to submit it.

| File | State | Notes |

|---|---|---|

Makefile |

Provided | Problem 1 & 2 Build file |

testy |

Testing | Test running script |

el_malloc.h |

Provided | Problem 1 header file |

el_demo.h |

Provided | Problem 1 demo main() |

el_malloc.c |

COMPLETE | Problem 1 implemented REQUIRED functions |

test_el_malloc.c |

Testing | Problem 1 Testing program for El Malloc |

test_el_malloc.org |

Testing | Problem 1 Testing script for El Malloc |

matata_optm.c |

EDIT | Problem 2 create and fill in optimized function definition |

matata_benchmark.c |

Provided | Problem 2 main benchmark |

matata_print.c |

Provided | Problem 2 testing program |

matata_base.c |

Provided | Problem 2 baseline function to beat |

matata.h |

Provided | Problem 2 header file |

matata_util.c |

Provided | Problem 2 utility functions for matrices/vectors |

test_matata.org |

Testing | Tests to check for memory issues in problem 2 |

3 Problem 1 : EL Malloc

A memory allocator is small system which manages heap memory,

sometimes referred to as the "data" segment of a program. This

portion of program memory is a linear set of addresses that form a

large block which can expand at runtime by making requests to the

operating system. Solving the allocation problem forms backbone of

what malloc()/free() do by keeping track of the space used and

released by a user program. Allocators also see use in garbage

collected languages like Java where there are no explicit free()

calls but the allocator must still find available space for new

objects.

One simple way to implement an allocator is to overlay linked lists on the heap which track at a minimum the available chunks of memory and possibly also the used chunks. This comes with a cost: some of the bytes of memory in the heap are no longer available for the user program but are instead used for the allocator's book-keeping.

In this problem, an explicit list allocator is developed, thus the

name of the system el_malloc. It uses two lists to track memory in

the heap:

- The Available List of blocks of memory that can be used to answer

calls to

malloc() - The Used List of blocks that have been returned by

malloc()and should not be returned again until they arefree()'d

Most operations boil down to manipulating these two lists in some form.

- Allocating with

ptr = el_malloc(size);searches the Available List for a block with sufficient size. That block is split into two blocks. One block answers the request and is given aboutsizebytes; it is moved to the Used List. The second block comprises the remainder of the space and remains on the Available List. - Deallocating with

el_free(ptr);moves the block referenced byptrfrom the Used List to the Available List. To prevent fragmentation of memory, the newly available block is merged with adjacent available blocks if possible.

3.1 EL Malloc Data Structures

Several data structures defined in el_malloc.h should be studied so

that one is acquainted with their intent. The following sections

outline many of these and show diagrams to indicate transformation

the required functions should implement.

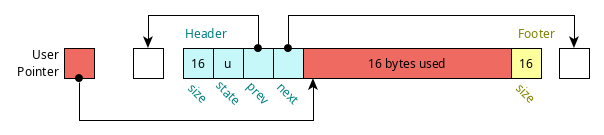

3.2 Block Header/Footer

Each block of memory tracked by EL Malloc is preceded and succeeded by

some bytes of memory for book keeping. These are referred to as the

block "header" and "footer" and are encoded in the el_blockhead_t

and el_blockfoot_t structs.

// type which is a "header" for a block of memory; containts info on

// size, whether the block is available or in use, and links to the

// next/prev blocks in a doubly linked list. This data structure

// appears immediately before a block of memory that is tracked by the

// allocator.

typedef struct block {

size_t size; // number of bytes of memory in this block

char state; // either EL_AVAILABLE or EL_USED

struct block *next; // pointer to next block in same list

struct block *prev; // pointer to previous block in same list

} el_blockhead_t;

// Type for the "footer" of a block; indicates size of the preceding

// block so that its header el_blockhead_t can be found with pointer

// arithmetic. This data appears immediately after an area of memory

// that may be used by a user or is free. Immediately after it is

// either another header (el_blockhead_t) or the end of the heap.

typedef struct {

size_t size;

} el_blockfoot_t;

As indicated, the blocks use doubly linked nodes in the header which will allow easy re-arrangement of the list.

A picture of a block with its header, footer, and user data area is shown below.

Figure 1: Block data preceded by a header (el_blockhead_t) and followed by a footer (el_blockfoot_t)._

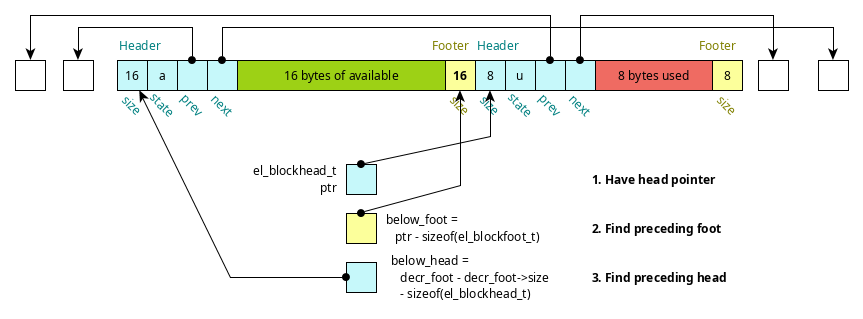

3.3 Blocks Above/Below

One might wonder why the footer appears. In tracking blocks, there will arise the need to work with a block that immediately precedes given block in memory in memory (not the previous in the linked list). The footer enables this by tracking the size of the user block of memory immediately beneath it.

This is illustrated in the diagram below.

Figure 2: Finding preceding block header using footer (el_block_below(header))

This operation is implemented in the function el_block_below(block) and

the similar operation el_block_above(block) finds the next header

immediately following one in memory.

The following functions use pointer arithmetic to determine block locations from a provided pointer.

el_blockfoot_t *el_get_footer(el_blockhead_t *block); el_blockhead_t *el_get_header(el_blockfoot_t *foot); el_blockhead_t *el_block_above(el_blockhead_t *block); el_blockhead_t *el_block_below(el_blockhead_t *block);

These functions benefit from macros defined in el_malloc.h that are

useful for doing pointer operations involving bytes.

// macro to add a byte offset to a pointer, arguments are a pointer // and a # of bytes (usually size_t) #define PTR_PLUS_BYTES(ptr,off) ((void *) (((size_t) (ptr)) + ((size_t) (off)))) // macro to add a byte offset to a pointer, arguments are a pointer // and a # of bytes (usually size_t) #define PTR_MINUS_BYTES(ptr,off) ((void *) (((size_t) (ptr)) - ((size_t) (off)))) // macro to add a byte offset to a pointer, arguments are a pointer // and a # of bytes (usually size_t) #define PTR_MINUS_PTR(ptr,ptq) (((size_t) (ptr)) - ((size_t) (ptq)))

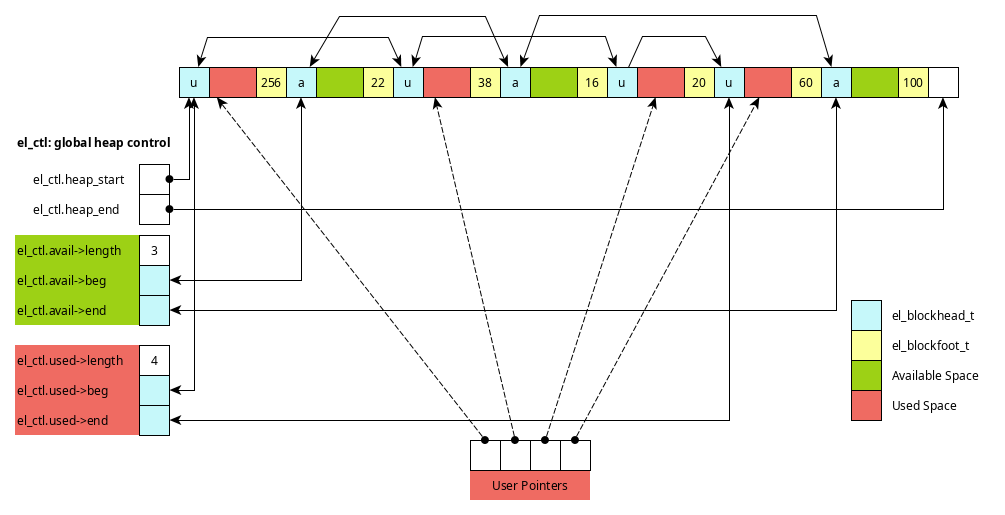

3.4 Block Lists and Global Control

The main purpose of the memory allocator is to track the available and used blocks in explicit linked lists. This allows used and available memory to be distributed throughout the heap. Below are the data structures that track these lists and the global control data structure which houses information for the entire heap.

// Type for a list of blocks; doubly linked with a fixed

// "dummy" node at the beginning and end which do not contain any

// data. List tracks its length and number of bytes in use.

typedef struct {

el_blockhead_t beg_actual; // fixed node at beginning of list; state is EL_BEGIN_BLOCK

el_blockhead_t end_actual; // fixed node at end of list; state is EL_END_BLOCK

el_blockhead_t *beg; // pointer to beg_actual

el_blockhead_t *end; // pointer to end_actual

size_t length; // length of the used block list (not counting beg/end)

size_t bytes; // total bytes in list used including overhead;

} el_blocklist_t;

// NOTE: total available bytes for use/in-use in the list is (bytes - length*EL_BLOCK_OVERHEAD)

// Type for the global control of the allocator. Tracks heap size,

// start and end addresses, total size, and lists of available and

// used blocks.

typedef struct {

void *heap_start; // pointer to where the heap starts

void *heap_end; // pointer to where the heap ends; this memory address is out of bounds

size_t heap_bytes; // number of bytes currently in the heap

el_blocklist_t avail_actual; // space for the available list data

el_blocklist_t used_actual; // space for the used list data

el_blocklist_t *avail; // pointer to avail_actual

el_blocklist_t *used; // pointer to used_actual

} el_ctl_t;

The following diagram shows some of the structure induced by use of a

doubly linked lists overlaid onto the heap. The global control

structure el_ctl has two lists for available and used space.

Figure 3: Structure of heap with several used/available blocks. Pointers from el_ctl lists allow access to these blocks.

The following functions initialize the global control structures, print stats on the heap, and clean up at the end of execution.

int el_init(int max_bytes); void el_print_stats(); void el_cleanup();

3.5 Pointers and "Actual" Space

In several structures, there appear pointers named xxx and structs

named xxx_actual. For example, in el_blocklist_t:

typedef struct {

...

el_blockhead_t beg_actual; // fixed node at beginning of list; state is EL_BEGIN_BLOCK

el_blockhead_t *beg; // pointer to beg_actual

...

} el_blocklist_t;

The intent here is that there will always be a node at the beginning

of the doubly linked list to make the programming easier. It makes

sense to have an actual struct beg_actual present. However, when

working with the list, the address of the beginning node is often

referenced making beg useful. In any case, beg will be initialized

to &beg_actual as appears in el_init_blocklist().

void el_init_blocklist(el_blocklist_t *list){

list->beg = &(list->beg_actual);

list->beg->state = EL_BEGIN_BLOCK;

list->beg->size = EL_UNINITIALIZED;

...

}

Similarly, since there will always be an Available List, el_ctl_t

has both an avail pointer to the list and avail_actual which is

the struct for the list.

3.6 Doubly Linked List Operations

A large number of operations in EL Malloc boil down to doubly linked list operations. This includes

- Unlinking nodes from the middle of list during

el_free() - Adding nodes to the beginning of a headed list (allocation and free)

- Traversing the list to print and search for available blocks

Recall that unlinking a node from a doubly linked list involves modifying the previous and next node as in the following.

node->prev->next = node->next; node->next->prev = node->prev;

while adding a new node to the front is typically accomplished via

node->prev = list->beg; node->next = list->beg->next; node->prev->next = node; node->next->prev = node;

You may wish to review doubly linked list operations and do some reading on lists with "dummy" nodes at the beginning and ending if these concepts are rusty.

The following functions pertain to block list operations.

void el_init_blocklist(el_blocklist_t *list); void el_print_blocklist(el_blocklist_t *list); void el_add_block_front(el_blocklist_t *list, el_blockhead_t *block); void el_remove_block(el_blocklist_t *list, el_blockhead_t *block);

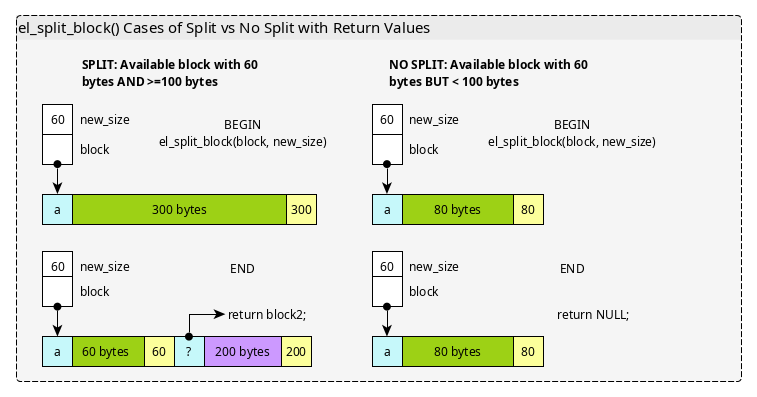

3.7 Allocation via Block Splitting

The basic operation of granting memory on a call to el_malloc(size)

involves finding an Available Block with enough bytes for the

requested amount of memory. In the event that the block is

significantly larger than the request and has enough space for a new

header and footer, it can be split into two blocks with one granting

the user request and another representing the remaining space.

Note that in some cases, blocks cannot be split: below is a diagram

showing case analysis for blocks that have sufficient size to meet

request but cannot be split. The diagram below illustrates the net

result of el_split_block() which may or may not split a block. Keep

in mind that any new block created is not assigned to either the

Available or Used lists but will be in later functions.

Figure 4: Shows the behavior of the el_split_block() function in two cases, a block large enough to split and one that is large enough to meet a request but cannot be split.

The rough steps for the el_malloc() function are shown below for

the case when block splitting occurs.

- A block that is large enough to split is located and removed from the Available list.

- If the block is large enough, it is cut into 2 parts: the requested size and the remainder with a head/foot in between; the original block is set to be part of the Used list and the remainder the Available list

- The blocks are added to the required lists and a pointer to the user data within the now Used block is returned.

Figure 5: Steps for el_malloc() when Splitting a block in an allocation request. The rough progression is shown after finding an appropriately sized block which is split into parts with list membership adjusted for both parts.

The following functions pertain to the location and splitting of blocks in the available list to fulfill allocation requests.

el_blockhead_t *el_find_first_avail(size_t size); el_blockhead_t *el_split_block(el_blockhead_t *block, size_t new_size); void *el_malloc(size_t nbytes);

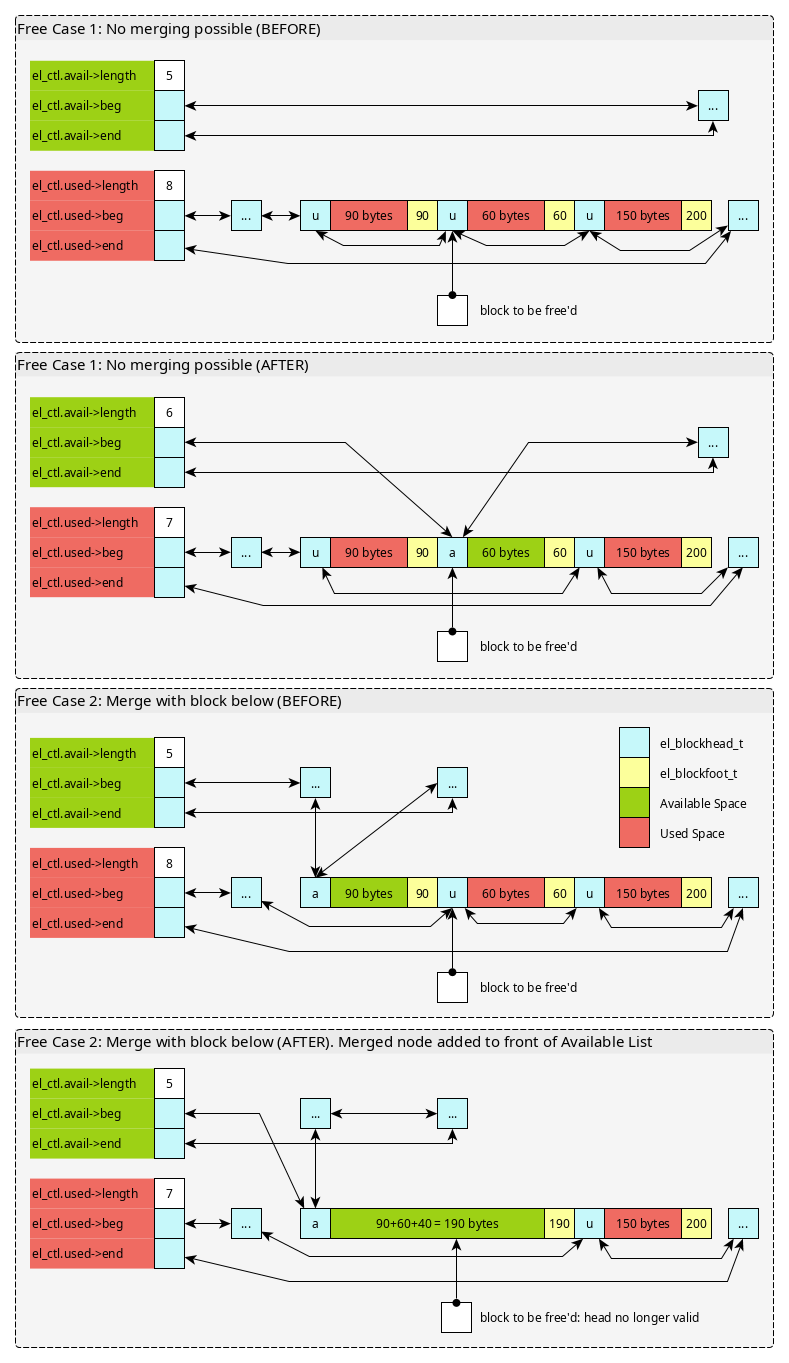

3.8 Freeing Blocks and Merging

Freeing memory passes in a pointer to the user area that was

granted. Immediately preceding this should be a el_blockhead_t and

it can be found with pointer arithmetic.

In order to prevent memory from becoming continually divided into

smaller blocks, on freeing the system checks to see if adjacent blocks

can be merged. Keep in mind that the blocks that can be merged are

adjacent in memory, not next/previous in some linked list. Adjacent

blocks can be located using el_block_above() and el_block_below().

To merge, the adjacent blocks must both be Available (not Used). A free can then have several cases.

- The freed block cannot be merged with any others

- The freed block can be merged with only the block above it

- The freed block can be merged with only the block below it

- The freed block can be merged with both adjacent blocks

The diagrams below show two of these cases.

Figure 6: Two cases of freeing blocks. The 2nd involves merging adjacent nodes with available space.

With careful use of the below functions and handling of NULL

arguments, all 4 cases can be handled with very little code. Keep in

mind that el_block_above()/below() should return NULL if there is

no block above or below due to that are being out of the boundaries of

the heap.

el_blockhead_t *el_block_above(el_blockhead_t *block); el_blockhead_t *el_block_below(el_blockhead_t *block); void el_merge_block_with_above(el_blockhead_t *lower); void el_free(void *ptr);

3.9 Expanding the Heap

El Malloc initializes the heap with just a single page of memory

(EL_PAGE_BYTES = 4096 bytes) during el_init(). While good for

testing, a real application would need more space than this. The

beginnings of heap expansion are provided via the following

function.

int el_append_pages_to_heap(int npages); // REQUIRED // Attempts to append pages of memory to the heap with mmap(). npages // is how many pages are to be appended with total bytes to be // appended as npages * EL_PAGE_BYTES. Calls mmap() with similar // arguments to those used in el_init() however requests the address // of the pages to be at heap_end so that the heap grows // contiguously. If this fails, prints the message // // ERROR: Unable to mmap() additional 3 pages // // and returns 1. Otherwise, adjusts heap size and end for the // expanded heap. Creates a new block for the freshly allocated pages // that is added to the available list. Also attempts to merge this // block with the block below it. Returns 0 on success. // // NOTE ON mmap() USAGE: mmap() returns one of three things if a // specific address is requested (its first argument): // // 1. The address requested indicating the memory mapping succeeded // // 2. A different address than the one requested if the requeste // address is in use // // 3. The constant MAP_FAILED if the address mapping failed. // // #2 and #3 above should trigger this function to immediate print an // #error message and return 1 as the heap cannot be made continuous // #in those cases.

The central idea of the function is to allocate more space for the

heap through mmap() calls. Since it is desirable to treat the heap

a contiguous block of memory, the calls to mmap() should attempt to

map new space for the heap to the el_ctl->heap_end address thereby

"appending" the pages the heap.

Here are a few implementation notes.

- In some cases,

NULLis passed as the first argument tommap()as a user program does not care what virtual address the OS uses for pages of memory. However, El Malloc will have specific addresses that it uses for the heap start and expansion. - Analyze the provided

el_init()function which initially usesmmap()to create the heap. Many aspects of the setup function can be transferred here. - One difference is that while

el_init()allocates the heap atEL_HEAP_START_ADDRESS,el_append_pages_to_heap()should map pages starting at the heap end. This will create a continuous virtual memory space for the heap as it expands. - The Return Value for

mmap()is important and will be one of three things as the docs indicate: the requested address, a different address, orMAP_FAILED. The latter two indicate that heap expansion has failed.

3.10 Overall Code Structure of EL Malloc

Below is the code structure of the EL Malloc library. Some of the

functions have been implemented already while those marked REQUIRED

must be completed for full credit on the problem.

// el_malloc.c: implementation of explicit list malloc functions.

#include "el_malloc.h"

////////////////////////////////////////////////////////////////////////////////

// Global control functions

el_ctl_t *el_ctl = NULL;

// Global control variable for the allocator. Must be initialized in

// el_init().

int el_init(uint64_t initial_heap_size);

// Create an initial block of memory for the heap using

// mmap(). Initialize the el_ctl data structure to point at this

// block. The initializ size/position of the heap for the memory map

// are given in the argument symbol and EL_HEAP_START_ADDRESS.

// Initialize the lists in el_ctl to contain a single large block of

// available memory and no used blocks of memory.

void el_cleanup();

// Clean up the heap area associated with the system which unmaps all

// pages associated with the heap.

////////////////////////////////////////////////////////////////////////////////

// Pointer arithmetic functions to access adjacent headers/footers

el_blockfoot_t *el_get_footer(el_blockhead_t *head);

// Compute the address of the foot for the given head which is at a

// higher address than the head.

el_blockhead_t *el_get_header(el_blockfoot_t *foot);

// REQUIRED

// Compute the address of the head for the given foot which is at a

// lower address than the foot.

el_blockhead_t *el_block_above(el_blockhead_t *block);

// Return a pointer to the block that is one block higher in memory

// from the given block. This should be the size of the block plus

// the EL_BLOCK_OVERHEAD which is the space occupied by the header and

// footer. Returns NULL if the block above would be off the heap.

// DOES NOT follow next pointer, looks in adjacent memory.

el_blockhead_t *el_block_below(el_blockhead_t *block);

// REQUIRED

// Return a pointer to the block that is one block lower in memory

// from the given block. Uses the size of the preceding block found

// in its foot. DOES NOT follow block->next pointer, looks in adjacent

// memory. Returns NULL if the block below would be outside the heap.

//

// WARNING: This function must perform slightly different arithmetic

// than el_block_above(). Take care when implementing it.

////////////////////////////////////////////////////////////////////////////////

// Block list operations

void el_print_blocklist(el_blocklist_t *list);

// Print an entire blocklist. The format appears as follows.

//

// {length: 2 bytes: 3400}

// [ 0] head @ 0x600000000000 {state: a size: 128}

// [ 1] head @ 0x600000000360 {state: a size: 3192}

//

// Note that the '@' column uses the actual address of items which

// relies on a consistent mmap() starting point for the heap.

void el_print_block(el_blockhead_t *block);

// Print a single block during a sequential walk through the heap

void el_print_heap_blocks();

// Print all blocks in the heap in the order that they appear from

// lowest addrses to highest address

void el_print_stats();

// Print out stats on the heap for use in debugging. Shows the

// available and used list along with a linear walk through the heap

// blocks.

void el_init_blocklist(el_blocklist_t *list);

// Initialize the specified list to be empty. Sets the beg/end

// pointers to the actual space and initializes those data to be the

// ends of the list. Initializes length and size to 0.

void el_add_block_front(el_blocklist_t *list, el_blockhead_t *block);

// REQUIRED

// Add to the front of list; links for block are adjusted as are links

// within list. Length is incremented and the bytes for the list are

// updated to include the new block's size and its overhead.

void el_remove_block(el_blocklist_t *list, el_blockhead_t *block);

// REQUIRED

// Unlink block from the list it is in which should be the list

// parameter. Updates the length and bytes for that list including

// the EL_BLOCK_OVERHEAD bytes associated with header/footer.

////////////////////////////////////////////////////////////////////////////////

// Allocation-related functions

el_blockhead_t *el_find_first_avail(size_t size);

// REQUIRED

// Find the first block in the available list with block size of at

// least `size`. Returns a pointer to the found block or NULL if no

// block of sufficient size is available.

el_blockhead_t *el_split_block(el_blockhead_t *block, size_t new_size);

// REQUIRED

// Set the pointed to block to the given size and add a footer to

// it. Creates another block above it by creating a new header and

// assigning it the remaining space. Ensures that the new block has a

// footer with the correct size. Returns a pointer to the newly

// created block while the parameter block has its size altered to

// parameter size. Does not do any linking of blocks nor changes of

// list membership: this is done elsewhere. If the parameter block

// does not have sufficient size for a split (at least new_size +

// EL_BLOCK_OVERHEAD for the new header/footer) makes no changes tot

// the block and returns NULL indicating no new block was created.

void *el_malloc(size_t nbytes);

// REQUIRED

// Return pointer to a block of memory with at least the given size

// for use by the user. The pointer returned is to the usable space,

// not the block header. Makes use of find_first_avail() to find a

// suitable block and el_split_block() to split it. Returns NULL if

// no space is available.

////////////////////////////////////////////////////////////////////////////////

// De-allocation/free() related functions

void el_merge_block_with_above(el_blockhead_t *lower);

// REQUIRED

// Attempt to merge the block lower with the next block in

// memory. Does nothing if lower is null or not EL_AVAILABLE and does

// nothing if the next higher block is null (because lower is the last

// block) or not EL_AVAILABLE. Otherwise, locates the next block with

// el_block_above() and merges these two into a single block. Adjusts

// the fields of lower to incorporate the size of higher block and the

// reclaimed overhead. Adjusts footer of higher to indicate the two

// blocks are merged. Removes both lower and higher from the

// available list and re-adds lower to the front of the available

// list.

void el_free(void *ptr);

// REQUIRED

// Free the block pointed to by the give ptr. The area immediately

// preceding the pointer should contain an el_blockhead_t with information

// on the block size. Attempts to merge the free'd block with adjacent

// blocks using el_merge_block_with_above(). If called on a NULL

// pointer or the block is not in state EL_USED, prints the error

//

// ERROR: el_free() not called on an EL_USED block

//

// and returns immediately without further action.

////////////////////////////////////////////////////////////////////////////////

// HEAP EXPANSION FUNCTIONS

int el_append_pages_to_heap(int npages);

// REQUIRED

// Attempts to append pages of memory to the heap with mmap(). npages

// is how many pages are to be appended with total bytes to be

// appended as npages * EL_PAGE_BYTES. Calls mmap() with similar

// arguments to those used in el_init() however requests the address

// of the pages to be at heap_end so that the heap grows

// contiguously. If this fails, prints the message

//

// ERROR: Unable to mmap() additional 3 pages

//

// and returns 1. Otherwise, adjusts heap size and end for the

// expanded heap. Creates a new block for the freshly allocated pages

// that is added to the available list. Also attempts to merge this

// block with the block below it. Returns 0 on success.

//

// NOTE ON mmap() USAGE: mmap() returns one of three things if a

// specific address is requested (its first argument):

//

// 1. The address requested indicating the memory mapping succeeded

//

// 2. A different address than the one requested if the requeste

// address is in use

//

// 3. The constant MAP_FAILED if the address mapping failed.

//

// #2 and #3 above should trigger this function to immediate print an

// #error message and return 1 as the heap cannot be made continuous

// #in those cases.

3.11 Demo Run using EL Malloc

Below is a run showing the behavior of a series of el_malloc() /

el_free() calls. They are performed in the provided el_demo.c

program.

Source for el_demo.c

// el_demo.c: Shows use cases for el_malloc() and el_free(). This file

// can be used for testing but is not itself a test.

#include <stdio.h>

#include <stdlib.h>

#include <assert.h>

#include "el_malloc.h"

void print_ptr(char *str, void *ptr){

if(ptr == NULL){

printf("%s: (nil)\n", str);

}

else{

printf("%s: %p\n", str, ptr);

}

}

int main(){

printf("EL_BLOCK_OVERHEAD: %lu\n",EL_BLOCK_OVERHEAD);

el_init(EL_HEAP_DEFAULT_SIZE);

printf("INITIAL\n"); el_print_stats(); printf("\n");

void *p1 = el_malloc(128);

void *p2 = el_malloc(48);

void *p3 = el_malloc(156);

printf("MALLOC 3\n"); el_print_stats(); printf("\n");

printf("POINTERS\n");

print_ptr("p3",p3);

print_ptr("p2",p2);

print_ptr("p1",p1);

printf("\n");

void *p4 = el_malloc(22);

void *p5 = el_malloc(64);

printf("MALLOC 5\n"); el_print_stats(); printf("\n");

printf("POINTERS\n");

print_ptr("p5",p5);

print_ptr("p4",p4);

print_ptr("p3",p3);

print_ptr("p2",p2);

print_ptr("p1",p1);

printf("\n");

el_free(p1);

printf("FREE 1\n"); el_print_stats(); printf("\n");

el_free(p3);

printf("FREE 3\n"); el_print_stats(); printf("\n");

p3 = el_malloc(32);

p1 = el_malloc(200);

printf("ALLOC 3,1 AGAIN\n"); el_print_stats(); printf("\n");

printf("POINTERS\n");

print_ptr("p1",p1);

print_ptr("p3",p3);

print_ptr("p5",p5);

print_ptr("p4",p4);

print_ptr("p2",p2);

printf("\n");

el_free(p1);

printf("FREE'D 1\n"); el_print_stats(); printf("\n");

el_free(p2);

printf("FREE'D 2\n"); el_print_stats(); printf("\n");

p1 = el_malloc(3438);

p2 = el_malloc(1024);

printf("P2 FAILS\n");

printf("POINTERS\n");

print_ptr("p1",p1);

print_ptr("p3",p3);

print_ptr("p5",p5);

print_ptr("p4",p4);

print_ptr("p2",p2);

el_print_stats(); printf("\n");

el_append_pages_to_heap(3);

printf("APPENDED PAGES\n"); el_print_stats(); printf("\n");

p2 = el_malloc(1024);

printf("P2 SUCCEEDS\n");

printf("POINTERS\n");

print_ptr("p1",p1);

print_ptr("p3",p3);

print_ptr("p5",p5);

print_ptr("p4",p4);

print_ptr("p2",p2);

el_print_stats(); printf("\n");

el_free(p1);

el_free(p2);

el_free(p3);

el_free(p4);

el_free(p5);

printf("FREE'D 1-5\n"); el_print_stats(); printf("\n");

el_cleanup();

return 0;

}

Output of El Malloc Demo

EL_BLOCK_OVERHEAD: 40

INITIAL

HEAP STATS (overhead per node: 40)

heap_start: 0x612000000000

heap_end: 0x612000001000

total_bytes: 4096

AVAILABLE LIST: {length: 1 bytes: 4096}

[ 0] head @ 0x612000000000 {state: a size: 4056}

USED LIST: {length: 0 bytes: 0}

HEAP BLOCKS:

[ 0] @ 0x612000000000

state: a

size: 4056 (total: 0x1000)

prev: 0x610000000018

next: 0x610000000038

user: 0x612000000020

foot: 0x612000000ff8

foot->size: 4056

MALLOC 3

HEAP STATS (overhead per node: 40)

heap_start: 0x612000000000

heap_end: 0x612000001000

total_bytes: 4096

AVAILABLE LIST: {length: 1 bytes: 3644}

[ 0] head @ 0x6120000001c4 {state: a size: 3604}

USED LIST: {length: 3 bytes: 452}

[ 0] head @ 0x612000000100 {state: u size: 156}

[ 1] head @ 0x6120000000a8 {state: u size: 48}

[ 2] head @ 0x612000000000 {state: u size: 128}

HEAP BLOCKS:

[ 0] @ 0x612000000000

state: u

size: 128 (total: 0xa8)

prev: 0x6120000000a8

next: 0x610000000098

user: 0x612000000020

foot: 0x6120000000a0

foot->size: 128

[ 1] @ 0x6120000000a8

state: u

size: 48 (total: 0x58)

prev: 0x612000000100

next: 0x612000000000

user: 0x6120000000c8

foot: 0x6120000000f8

foot->size: 48

[ 2] @ 0x612000000100

state: u

size: 156 (total: 0xc4)

prev: 0x610000000078

next: 0x6120000000a8

user: 0x612000000120

foot: 0x6120000001bc

foot->size: 156

[ 3] @ 0x6120000001c4

state: a

size: 3604 (total: 0xe3c)

prev: 0x610000000018

next: 0x610000000038

user: 0x6120000001e4

foot: 0x612000000ff8

foot->size: 3604

POINTERS

p3: 0x612000000120

p2: 0x6120000000c8

p1: 0x612000000020

MALLOC 5

HEAP STATS (overhead per node: 40)

heap_start: 0x612000000000

heap_end: 0x612000001000

total_bytes: 4096

AVAILABLE LIST: {length: 1 bytes: 3478}

[ 0] head @ 0x61200000026a {state: a size: 3438}

USED LIST: {length: 5 bytes: 618}

[ 0] head @ 0x612000000202 {state: u size: 64}

[ 1] head @ 0x6120000001c4 {state: u size: 22}

[ 2] head @ 0x612000000100 {state: u size: 156}

[ 3] head @ 0x6120000000a8 {state: u size: 48}

[ 4] head @ 0x612000000000 {state: u size: 128}

HEAP BLOCKS:

[ 0] @ 0x612000000000

state: u

size: 128 (total: 0xa8)

prev: 0x6120000000a8

next: 0x610000000098

user: 0x612000000020

foot: 0x6120000000a0

foot->size: 128

[ 1] @ 0x6120000000a8

state: u

size: 48 (total: 0x58)

prev: 0x612000000100

next: 0x612000000000

user: 0x6120000000c8

foot: 0x6120000000f8

foot->size: 48

[ 2] @ 0x612000000100

state: u

size: 156 (total: 0xc4)

prev: 0x6120000001c4

next: 0x6120000000a8

user: 0x612000000120

foot: 0x6120000001bc

foot->size: 156

[ 3] @ 0x6120000001c4

state: u

size: 22 (total: 0x3e)

prev: 0x612000000202

next: 0x612000000100

user: 0x6120000001e4

foot: 0x6120000001fa

foot->size: 22

[ 4] @ 0x612000000202

state: u

size: 64 (total: 0x68)

prev: 0x610000000078

next: 0x6120000001c4

user: 0x612000000222

foot: 0x612000000262

foot->size: 64

[ 5] @ 0x61200000026a

state: a

size: 3438 (total: 0xd96)

prev: 0x610000000018

next: 0x610000000038

user: 0x61200000028a

foot: 0x612000000ff8

foot->size: 3438

POINTERS

p5: 0x612000000222

p4: 0x6120000001e4

p3: 0x612000000120

p2: 0x6120000000c8

p1: 0x612000000020

FREE 1

HEAP STATS (overhead per node: 40)

heap_start: 0x612000000000

heap_end: 0x612000001000

total_bytes: 4096

AVAILABLE LIST: {length: 2 bytes: 3646}

[ 0] head @ 0x612000000000 {state: a size: 128}

[ 1] head @ 0x61200000026a {state: a size: 3438}

USED LIST: {length: 4 bytes: 450}

[ 0] head @ 0x612000000202 {state: u size: 64}

[ 1] head @ 0x6120000001c4 {state: u size: 22}

[ 2] head @ 0x612000000100 {state: u size: 156}

[ 3] head @ 0x6120000000a8 {state: u size: 48}

HEAP BLOCKS:

[ 0] @ 0x612000000000

state: a

size: 128 (total: 0xa8)

prev: 0x610000000018

next: 0x61200000026a

user: 0x612000000020

foot: 0x6120000000a0

foot->size: 128

[ 1] @ 0x6120000000a8

state: u

size: 48 (total: 0x58)

prev: 0x612000000100

next: 0x610000000098

user: 0x6120000000c8

foot: 0x6120000000f8

foot->size: 48

[ 2] @ 0x612000000100

state: u

size: 156 (total: 0xc4)

prev: 0x6120000001c4

next: 0x6120000000a8

user: 0x612000000120

foot: 0x6120000001bc

foot->size: 156

[ 3] @ 0x6120000001c4

state: u

size: 22 (total: 0x3e)

prev: 0x612000000202

next: 0x612000000100

user: 0x6120000001e4

foot: 0x6120000001fa

foot->size: 22

[ 4] @ 0x612000000202

state: u

size: 64 (total: 0x68)

prev: 0x610000000078

next: 0x6120000001c4

user: 0x612000000222

foot: 0x612000000262

foot->size: 64

[ 5] @ 0x61200000026a

state: a

size: 3438 (total: 0xd96)

prev: 0x612000000000

next: 0x610000000038

user: 0x61200000028a

foot: 0x612000000ff8

foot->size: 3438

FREE 3

HEAP STATS (overhead per node: 40)

heap_start: 0x612000000000

heap_end: 0x612000001000

total_bytes: 4096

AVAILABLE LIST: {length: 3 bytes: 3842}

[ 0] head @ 0x612000000100 {state: a size: 156}

[ 1] head @ 0x612000000000 {state: a size: 128}

[ 2] head @ 0x61200000026a {state: a size: 3438}

USED LIST: {length: 3 bytes: 254}

[ 0] head @ 0x612000000202 {state: u size: 64}

[ 1] head @ 0x6120000001c4 {state: u size: 22}

[ 2] head @ 0x6120000000a8 {state: u size: 48}

HEAP BLOCKS:

[ 0] @ 0x612000000000

state: a

size: 128 (total: 0xa8)

prev: 0x612000000100

next: 0x61200000026a

user: 0x612000000020

foot: 0x6120000000a0

foot->size: 128

[ 1] @ 0x6120000000a8

state: u

size: 48 (total: 0x58)

prev: 0x6120000001c4

next: 0x610000000098

user: 0x6120000000c8

foot: 0x6120000000f8

foot->size: 48

[ 2] @ 0x612000000100

state: a

size: 156 (total: 0xc4)

prev: 0x610000000018

next: 0x612000000000

user: 0x612000000120

foot: 0x6120000001bc

foot->size: 156

[ 3] @ 0x6120000001c4

state: u

size: 22 (total: 0x3e)

prev: 0x612000000202

next: 0x6120000000a8

user: 0x6120000001e4

foot: 0x6120000001fa

foot->size: 22

[ 4] @ 0x612000000202

state: u

size: 64 (total: 0x68)

prev: 0x610000000078

next: 0x6120000001c4

user: 0x612000000222

foot: 0x612000000262

foot->size: 64

[ 5] @ 0x61200000026a

state: a

size: 3438 (total: 0xd96)

prev: 0x612000000000

next: 0x610000000038

user: 0x61200000028a

foot: 0x612000000ff8

foot->size: 3438

ALLOC 3,1 AGAIN

HEAP STATS (overhead per node: 40)

heap_start: 0x612000000000

heap_end: 0x612000001000

total_bytes: 4096

AVAILABLE LIST: {length: 3 bytes: 3530}

[ 0] head @ 0x61200000035a {state: a size: 3198}

[ 1] head @ 0x612000000148 {state: a size: 84}

[ 2] head @ 0x612000000000 {state: a size: 128}

USED LIST: {length: 5 bytes: 566}

[ 0] head @ 0x61200000026a {state: u size: 200}

[ 1] head @ 0x612000000100 {state: u size: 32}

[ 2] head @ 0x612000000202 {state: u size: 64}

[ 3] head @ 0x6120000001c4 {state: u size: 22}

[ 4] head @ 0x6120000000a8 {state: u size: 48}

HEAP BLOCKS:

[ 0] @ 0x612000000000

state: a

size: 128 (total: 0xa8)

prev: 0x612000000148

next: 0x610000000038

user: 0x612000000020

foot: 0x6120000000a0

foot->size: 128

[ 1] @ 0x6120000000a8

state: u

size: 48 (total: 0x58)

prev: 0x6120000001c4

next: 0x610000000098

user: 0x6120000000c8

foot: 0x6120000000f8

foot->size: 48

[ 2] @ 0x612000000100

state: u

size: 32 (total: 0x48)

prev: 0x61200000026a

next: 0x612000000202

user: 0x612000000120

foot: 0x612000000140

foot->size: 32

[ 3] @ 0x612000000148

state: a

size: 84 (total: 0x7c)

prev: 0x61200000035a

next: 0x612000000000

user: 0x612000000168

foot: 0x6120000001bc

foot->size: 84

[ 4] @ 0x6120000001c4

state: u

size: 22 (total: 0x3e)

prev: 0x612000000202

next: 0x6120000000a8

user: 0x6120000001e4

foot: 0x6120000001fa

foot->size: 22

[ 5] @ 0x612000000202

state: u

size: 64 (total: 0x68)

prev: 0x612000000100

next: 0x6120000001c4

user: 0x612000000222

foot: 0x612000000262

foot->size: 64

[ 6] @ 0x61200000026a

state: u

size: 200 (total: 0xf0)

prev: 0x610000000078

next: 0x612000000100

user: 0x61200000028a

foot: 0x612000000352

foot->size: 200

[ 7] @ 0x61200000035a

state: a

size: 3198 (total: 0xca6)

prev: 0x610000000018

next: 0x612000000148

user: 0x61200000037a

foot: 0x612000000ff8

foot->size: 3198

POINTERS

p1: 0x61200000028a

p3: 0x612000000120

p5: 0x612000000222

p4: 0x6120000001e4

p2: 0x6120000000c8

FREE'D 1

HEAP STATS (overhead per node: 40)

heap_start: 0x612000000000

heap_end: 0x612000001000

total_bytes: 4096

AVAILABLE LIST: {length: 3 bytes: 3770}

[ 0] head @ 0x61200000026a {state: a size: 3438}

[ 1] head @ 0x612000000148 {state: a size: 84}

[ 2] head @ 0x612000000000 {state: a size: 128}

USED LIST: {length: 4 bytes: 326}

[ 0] head @ 0x612000000100 {state: u size: 32}

[ 1] head @ 0x612000000202 {state: u size: 64}

[ 2] head @ 0x6120000001c4 {state: u size: 22}

[ 3] head @ 0x6120000000a8 {state: u size: 48}

HEAP BLOCKS:

[ 0] @ 0x612000000000

state: a

size: 128 (total: 0xa8)

prev: 0x612000000148

next: 0x610000000038

user: 0x612000000020

foot: 0x6120000000a0

foot->size: 128

[ 1] @ 0x6120000000a8

state: u

size: 48 (total: 0x58)

prev: 0x6120000001c4

next: 0x610000000098

user: 0x6120000000c8

foot: 0x6120000000f8

foot->size: 48

[ 2] @ 0x612000000100

state: u

size: 32 (total: 0x48)

prev: 0x610000000078

next: 0x612000000202

user: 0x612000000120

foot: 0x612000000140

foot->size: 32

[ 3] @ 0x612000000148

state: a

size: 84 (total: 0x7c)

prev: 0x61200000026a

next: 0x612000000000

user: 0x612000000168

foot: 0x6120000001bc

foot->size: 84

[ 4] @ 0x6120000001c4

state: u

size: 22 (total: 0x3e)

prev: 0x612000000202

next: 0x6120000000a8

user: 0x6120000001e4

foot: 0x6120000001fa

foot->size: 22

[ 5] @ 0x612000000202

state: u

size: 64 (total: 0x68)

prev: 0x612000000100

next: 0x6120000001c4

user: 0x612000000222

foot: 0x612000000262

foot->size: 64

[ 6] @ 0x61200000026a

state: a

size: 3438 (total: 0xd96)

prev: 0x610000000018

next: 0x612000000148

user: 0x61200000028a

foot: 0x612000000ff8

foot->size: 3438

FREE'D 2

HEAP STATS (overhead per node: 40)

heap_start: 0x612000000000

heap_end: 0x612000001000

total_bytes: 4096

AVAILABLE LIST: {length: 3 bytes: 3858}

[ 0] head @ 0x612000000000 {state: a size: 216}

[ 1] head @ 0x61200000026a {state: a size: 3438}

[ 2] head @ 0x612000000148 {state: a size: 84}

USED LIST: {length: 3 bytes: 238}

[ 0] head @ 0x612000000100 {state: u size: 32}

[ 1] head @ 0x612000000202 {state: u size: 64}

[ 2] head @ 0x6120000001c4 {state: u size: 22}

HEAP BLOCKS:

[ 0] @ 0x612000000000

state: a

size: 216 (total: 0x100)

prev: 0x610000000018

next: 0x61200000026a

user: 0x612000000020

foot: 0x6120000000f8

foot->size: 216

[ 1] @ 0x612000000100

state: u

size: 32 (total: 0x48)

prev: 0x610000000078

next: 0x612000000202

user: 0x612000000120

foot: 0x612000000140

foot->size: 32

[ 2] @ 0x612000000148

state: a

size: 84 (total: 0x7c)

prev: 0x61200000026a

next: 0x610000000038

user: 0x612000000168

foot: 0x6120000001bc

foot->size: 84

[ 3] @ 0x6120000001c4

state: u

size: 22 (total: 0x3e)

prev: 0x612000000202

next: 0x610000000098

user: 0x6120000001e4

foot: 0x6120000001fa

foot->size: 22

[ 4] @ 0x612000000202

state: u

size: 64 (total: 0x68)

prev: 0x612000000100

next: 0x6120000001c4

user: 0x612000000222

foot: 0x612000000262

foot->size: 64

[ 5] @ 0x61200000026a

state: a

size: 3438 (total: 0xd96)

prev: 0x612000000000

next: 0x612000000148

user: 0x61200000028a

foot: 0x612000000ff8

foot->size: 3438

P2 FAILS

POINTERS

p1: 0x61200000028a

p3: 0x612000000120

p5: 0x612000000222

p4: 0x6120000001e4

p2: (nil)

HEAP STATS (overhead per node: 40)

heap_start: 0x612000000000

heap_end: 0x612000001000

total_bytes: 4096

AVAILABLE LIST: {length: 2 bytes: 380}

[ 0] head @ 0x612000000000 {state: a size: 216}

[ 1] head @ 0x612000000148 {state: a size: 84}

USED LIST: {length: 4 bytes: 3716}

[ 0] head @ 0x61200000026a {state: u size: 3438}

[ 1] head @ 0x612000000100 {state: u size: 32}

[ 2] head @ 0x612000000202 {state: u size: 64}

[ 3] head @ 0x6120000001c4 {state: u size: 22}

HEAP BLOCKS:

[ 0] @ 0x612000000000

state: a

size: 216 (total: 0x100)

prev: 0x610000000018

next: 0x612000000148

user: 0x612000000020

foot: 0x6120000000f8

foot->size: 216

[ 1] @ 0x612000000100

state: u

size: 32 (total: 0x48)

prev: 0x61200000026a

next: 0x612000000202

user: 0x612000000120

foot: 0x612000000140

foot->size: 32

[ 2] @ 0x612000000148

state: a

size: 84 (total: 0x7c)

prev: 0x612000000000

next: 0x610000000038

user: 0x612000000168

foot: 0x6120000001bc

foot->size: 84

[ 3] @ 0x6120000001c4

state: u

size: 22 (total: 0x3e)

prev: 0x612000000202

next: 0x610000000098

user: 0x6120000001e4

foot: 0x6120000001fa

foot->size: 22

[ 4] @ 0x612000000202

state: u

size: 64 (total: 0x68)

prev: 0x612000000100

next: 0x6120000001c4

user: 0x612000000222

foot: 0x612000000262

foot->size: 64

[ 5] @ 0x61200000026a

state: u

size: 3438 (total: 0xd96)

prev: 0x610000000078

next: 0x612000000100

user: 0x61200000028a

foot: 0x612000000ff8

foot->size: 3438

APPENDED PAGES

HEAP STATS (overhead per node: 40)

heap_start: 0x612000000000

heap_end: 0x612000004000

total_bytes: 16384

AVAILABLE LIST: {length: 3 bytes: 12668}

[ 0] head @ 0x612000001000 {state: a size: 12248}

[ 1] head @ 0x612000000000 {state: a size: 216}

[ 2] head @ 0x612000000148 {state: a size: 84}

USED LIST: {length: 4 bytes: 3716}

[ 0] head @ 0x61200000026a {state: u size: 3438}

[ 1] head @ 0x612000000100 {state: u size: 32}

[ 2] head @ 0x612000000202 {state: u size: 64}

[ 3] head @ 0x6120000001c4 {state: u size: 22}

HEAP BLOCKS:

[ 0] @ 0x612000000000

state: a

size: 216 (total: 0x100)

prev: 0x612000001000

next: 0x612000000148

user: 0x612000000020

foot: 0x6120000000f8

foot->size: 216

[ 1] @ 0x612000000100

state: u

size: 32 (total: 0x48)

prev: 0x61200000026a

next: 0x612000000202

user: 0x612000000120

foot: 0x612000000140

foot->size: 32

[ 2] @ 0x612000000148

state: a

size: 84 (total: 0x7c)

prev: 0x612000000000

next: 0x610000000038

user: 0x612000000168

foot: 0x6120000001bc

foot->size: 84

[ 3] @ 0x6120000001c4

state: u

size: 22 (total: 0x3e)

prev: 0x612000000202

next: 0x610000000098

user: 0x6120000001e4

foot: 0x6120000001fa

foot->size: 22

[ 4] @ 0x612000000202

state: u

size: 64 (total: 0x68)

prev: 0x612000000100

next: 0x6120000001c4

user: 0x612000000222

foot: 0x612000000262

foot->size: 64

[ 5] @ 0x61200000026a

state: u

size: 3438 (total: 0xd96)

prev: 0x610000000078

next: 0x612000000100

user: 0x61200000028a

foot: 0x612000000ff8

foot->size: 3438

[ 6] @ 0x612000001000

state: a

size: 12248 (total: 0x3000)

prev: 0x610000000018

next: 0x612000000000

user: 0x612000001020

foot: 0x612000003ff8

foot->size: 12248

P2 SUCCEEDS

POINTERS

p1: 0x61200000028a

p3: 0x612000000120

p5: 0x612000000222

p4: 0x6120000001e4

p2: 0x612000001020

HEAP STATS (overhead per node: 40)

heap_start: 0x612000000000

heap_end: 0x612000004000

total_bytes: 16384

AVAILABLE LIST: {length: 3 bytes: 11604}

[ 0] head @ 0x612000001428 {state: a size: 11184}

[ 1] head @ 0x612000000000 {state: a size: 216}

[ 2] head @ 0x612000000148 {state: a size: 84}

USED LIST: {length: 5 bytes: 4780}

[ 0] head @ 0x612000001000 {state: u size: 1024}

[ 1] head @ 0x61200000026a {state: u size: 3438}

[ 2] head @ 0x612000000100 {state: u size: 32}

[ 3] head @ 0x612000000202 {state: u size: 64}

[ 4] head @ 0x6120000001c4 {state: u size: 22}

HEAP BLOCKS:

[ 0] @ 0x612000000000

state: a

size: 216 (total: 0x100)

prev: 0x612000001428

next: 0x612000000148

user: 0x612000000020

foot: 0x6120000000f8

foot->size: 216

[ 1] @ 0x612000000100

state: u

size: 32 (total: 0x48)

prev: 0x61200000026a

next: 0x612000000202

user: 0x612000000120

foot: 0x612000000140

foot->size: 32

[ 2] @ 0x612000000148

state: a

size: 84 (total: 0x7c)

prev: 0x612000000000

next: 0x610000000038

user: 0x612000000168

foot: 0x6120000001bc

foot->size: 84

[ 3] @ 0x6120000001c4

state: u

size: 22 (total: 0x3e)

prev: 0x612000000202

next: 0x610000000098

user: 0x6120000001e4

foot: 0x6120000001fa

foot->size: 22

[ 4] @ 0x612000000202

state: u

size: 64 (total: 0x68)

prev: 0x612000000100

next: 0x6120000001c4

user: 0x612000000222

foot: 0x612000000262

foot->size: 64

[ 5] @ 0x61200000026a

state: u

size: 3438 (total: 0xd96)

prev: 0x612000001000

next: 0x612000000100

user: 0x61200000028a

foot: 0x612000000ff8

foot->size: 3438

[ 6] @ 0x612000001000

state: u

size: 1024 (total: 0x428)

prev: 0x610000000078

next: 0x61200000026a

user: 0x612000001020

foot: 0x612000001420

foot->size: 1024

[ 7] @ 0x612000001428

state: a

size: 11184 (total: 0x2bd8)

prev: 0x610000000018

next: 0x612000000000

user: 0x612000001448

foot: 0x612000003ff8

foot->size: 11184

FREE'D 1-5

HEAP STATS (overhead per node: 40)

heap_start: 0x612000000000

heap_end: 0x612000004000

total_bytes: 16384

AVAILABLE LIST: {length: 1 bytes: 16384}

[ 0] head @ 0x612000000000 {state: a size: 16344}

USED LIST: {length: 0 bytes: 0}

HEAP BLOCKS:

[ 0] @ 0x612000000000

state: a

size: 16344 (total: 0x4000)

prev: 0x610000000018

next: 0x610000000038

user: 0x612000000020

foot: 0x612000003ff8

foot->size: 16344

3.12 Grading Criteria for Problem 1 grading 45

| Weight | Criteria |

|---|---|

| Automated Tests | |

| 20 | make test-prob1 runs tests for correctness with Valgrind enabled |

| 20 tests, 1 point per test | |

| 25 | Manual Inspection |

| 4 | Code Style: Functions adhere to CMSC 216 C Coding Style Guide which dictates reasonable indentation, commenting, consistency of curly usage, etc. |

| 3 | el_get_header() and el_block_below() |

| Use of provided macros for pointer arithmetic | |

Correct use of sizeof() operator to account for sizes |

|

el_block_below() checks for beginning of heap and returns NULL |

|

| 3 | el_add_block_front() and el_remove_block() |

| Sensible use of pointers prev/next to link/unlink nodes efficiently; no looping used | |

| Correct updating of list length and bytes | |

Accounts for EL_BLOCK_OVERHEAD when updating bytes |

|

| 3 | el_split_block() |

Use of el_get_footer() to obtain footers for updating size |

|

| Checks to determine if block is large enough to be split; returns NULL if not | |

| Clear evidence of placing a new header and footer for new block when splitting | |

Accounting for overhead EL_BLOCK_OVERHEAD when calculating new size |

|

| 3 | el_malloc() |

Use of el_find_first_avail() to locate a node to split |

|

Use of el_split_block() to split block into two |

|

| Clear state change of split blocks to Used and Available | |

| Clear movement of lower split blocks to front of Used List | |

| Clear movement of upper split blocks to front of Available lists | |

| Use of pointer arithmetic macros to computer user address | |

| 3 | el_merge_block_with_above() |

NULL checks for argument and block above which result in no changes |

|

Clear checks of whether both blocks are EL_AVAILABLE |

|

Use of el_block_above() to find block above |

|

| Clear updates to size of lower block and higher foot | |

| Movement of blocks out of available list and merged block to front | |

| 3 | el_free() |

Error checking that block is EL_USED |

|

| Movement of block from used to available list | |

| Attempts to merge block with blocks above and below it | |

| 3 | el_append_pages_to_heap() |

Makes use of mmap() to map in additional pages of virtual memory |

|

Checks mmap() for failures and prints appropriate error messages |

|

| Maps new heap space to current heap end to create a contiguous heap | |

| Creates new available block for new space and attempts to merge with existing available blocks | |

| 45 | Problem Total |

4 Problem 2: MATATA Operation

4.1 Overview

A problem that occasionally arises in numerical computing when working with Matrices (2D Arrays) is to multiply the transpose of a matrix by itself, notated AT × A and referred to here as a the "MATATA" operation (short for MATrix AT times A, "matata" is not widely used to describe this matrix operation and internet searches it are likely to turn up unrelated results).

The MATATA operation is a special case of general matrix multiplication and students unfamiliar with this operation should study it to get some context for the present work. The diagram below illustrates a sample matrix A, its transpose AT that exchanges rows and columns, and the results of computing AT × A. Note that for rectangular matrices with R rows and C columns, the results of

Figure 7: 4 by 3 matrix A with colored elements, its transpose At, and the result of multiplying At × A.

Note that that for each of the elements of the result matrix, the elements are the result of a row in the transpose multiplied by a column in the original matrix:

Ans[i][j] = 0;

for(k = 0 to Ans.cols){

Ans[i][j] += At[i][k] * A[k][j];

}

After commuting the MATATA, it is often further used in numerical operations which are improved by normalizing the matrix to a limited range. There are several choices for this but here we will favor the maximum absolute value of elements in the MATATA and divide all other elements in the MATATA by it to normalize the matrix (the maximum absolute value of a vector or matrix is often referred to as its infinity norm in technical literature).

The file matata_base.c provides a baseline function that performs

the MATATA and normalization in a direct fashion as the above

definition indicates The algorithm uses the most "natural" approach of

multiplying each row of the transpose by each column of the original

to produce the result in the for each part of the squared result. The

transposed matrix is formed via a malloc(), copying elements, then

performing the multiply. As you survey the code, note the use of

various convenience macros such as MGET(mat,i,j) interact with the

matrix type used.

1: #include "matata.h" 2: 3: // exploits symmetry, uses a transpose matrix, does not have a good 4: // memory access pattern and is "serial" - single threaded 5: int matata_BASE(matrix_t mat, matrix_t ans) { 6: matrix_t tra; // allocate space for transpose matrix 7: matrix_init(&tra, mat.cols, mat.rows); 8: 9: for(int i=0; i<mat.rows; i++){ // copy elements into transpose matrix 10: for(int j=0; j<mat.cols; j++){ 11: float mij = MGET(mat, i, j); 12: MSET(tra, j, i, mij); 13: } 14: } 15: 16: for(int i=0; i<mat.cols; i++){ // compute ans = A^T * A 17: for(int j=i; j<mat.cols; j++){ // compute upper triangle elements 18: float sum = 0; 19: for(int k=0; k<mat.rows; k++){ // sum over dot(row, col) 20: sum += MGET(tra, i, k)*MGET(mat, k, j); 21: } 22: MSET(ans, i, j, sum); // exploit symmetry: assign upper 23: MSET(ans, j, i, sum); // trianble element to lower triangle 24: } 25: } 26: 27: float max = -INFINITY; // calculate absolute max in ans 28: for(int i=0; i<mat.cols; i++){ 29: for(int j=0; j<mat.cols; j++){ 30: float mij = fabsf(MGET(ans, i, j)); 31: if(mij > max){ 32: max = mij; 33: } 34: } 35: } 36: for(int i=0; i<mat.cols; i++){ // normalize matrix by dividing 37: for(int j=0; j<mat.cols; j++){ // by absolute maximum value 38: float mij = MGET(ans, i, j); 39: MSET(ans,i,j, mij / max); 40: } 41: } 42: 43: matrix_free_data(&tra); // de-allocate transpose mat 44: 45: return 0; // return success 46: }

Note that the original matrix comes in as the parameter mat and the

normalized MATATA result is stored in the parameter ans.

While this algorithm is a direct translation of how humans would visually calculate the MATATA of small matrices, it is unfortunately fairly slow when executing on most modern computing systems.

4.2 Optimize MATATA

The purpose of this problem is to write matata_OPTM() which is a

faster version of the provided matata_BASE() to calculate the

results.

Write your code in the file matata_optm.c.

Keep the following things in mind.

- You will need to acquaint yourself with the functions and types

related to matrices and vectors provided in the

matata.hand demonstrated in the baseline function. Understanding the layout of the matrix in memory is essential to unlocking performance. - The goal of

matata_OPTM()is to exceed the performance ofmatata_BASE()by as much as possible. - To achieve this goal, several optimizations must be implemented and suggestions are given in a later section.

- There is one additional parameter to the optimized function:

matata_OPTM(mat,avg,std,thread_count). This indicates the number of threads that should be used during the computation: one of the optimizations that is essential is to add multi-threading support. - Part of your grade will be based on the speed of the optimized code

on

grace.umd.edu. The main routinematata_benchmark.cwill be used for this.

Some details are provided in subsequent sections.

4.3 Evaluation on Grace

The file matata_benchmark.c provides a benchmark for the speed of

the algorithms. It will be used by graders to evaluate the submitted

code and should be used during development to gauge performance

improvements. It runs both the Baseline and Optimized versions

(from matata_base.c and matata_optm.c respectively) and compares

their performance.

Students must paste their benchmark results from GRACE into their

matata_optm.c file. During grading, staff will independently

evaluate your code performance on a grace node and take the best score

from 3 runs of the benchmark.

While the benchmark runs on any machine, it is specifically tailored to run on the following machines:

grace3.umd.edu grace4.umd.edu grace5.umd.edu grace6.umd.edu grace7.umd.edu grace8.umd.edu grace9.umd.edu grace10.umd.edu

These Grace nodes have a reasonably large cache and fast processor

while other Grace nodes have slower, older processors. The scoring

present in matata_benchmark.c is "tuned" to these machines and will

likely report incorrect results on other machines. Test your

performance on these nodes so that no unexpected results occur after

submission.

The output of the matata_benchmark is shown below.

ROWS/COLS: the size of the matrix being used.BASE: the time it takes formatata_BASE()to complete.T: number of threads used for runningmatata_OPTM()OPTM: the time it takes formatata_OPTM()to complete.SPDUP: the speedup ofmatata_OPTM()overmatata_BASE()which isBASE / OPTM.POINT: points earned on this run according to the following code:double points = log(speedup_OPTM) / log(2.0) * 2.0;

This scheme means that unless actual optimizations are implemented, 0 points will be scored. Each speedup by a factor of 2X earns 2 point2: finishing in half the time (2X speedup) of the Baseline earns 2.0 points, one quarter of time (4X speedup) earns 4.0 points, 1/8th the time (8X speedup) 6.0 points, and so on.

TOTAL: the running total of points accumulated after running each segment of the benchmark.

Below are several demonstration runs of the benchmark.

# ###############################################################################

# RUN ON INCORRECT MACHINE (NOT grace nodes), NOTE WARNINGS

homputer>> ./matata_benchmark

WARNING: UNEXPECTED HOST 'val'

WARNING: ensure you are on an normal GRACE node

WARNING: timing results / scoring will not reflect actual scoring

WARNING: run on one of the following hosts for accurate results

WARNING: grace3.umd.edu

WARNING: grace4.umd.edu

WARNING: grace5.umd.edu

WARNING: grace6.umd.edu

WARNING: grace7.umd.edu

WARNING: grace8.umd.edu

WARNING: grace9.umd.edu

WARNING: grace10.umd.edu

==== Matrix A^T*A Benchmark Version 2.0 ====

Performing 11 runs with 2 thread_counts (max 2)

ROWS COLS BASE T OPTM SPDUP POINT TOTAL

128 128 0.007 1 0.008 0.96 0.00 0.00

2 0.004 1.70 1.53 1.53

171 151 0.012 1 0.013 0.92 0.00 1.53

2 0.007 1.67 1.49 3.01

196 180 0.019 1 0.021 0.94 0.00 3.01

2 0.011 1.71 1.54 4.56

256 128 0.013 1 0.019 0.70 0.00 4.56

2 0.010 1.30 0.76 5.32

256 256 0.066 1 0.077 0.86 0.00 5.32

2 0.040 1.64 1.42 6.74

512 256 0.179 1 0.181 0.99 0.00 6.74

2 0.099 1.81 1.71 8.45

512 512 0.784 1 0.787 1.00 0.00 8.45

2 0.424 1.85 1.78 10.22

640 512 0.992 1 0.920 1.08 0.22 10.44

2 0.517 1.92 1.88 12.32

640 640 1.175 1 1.437 0.82 0.00 12.32

2 0.685 1.71 1.56 13.88

768 640 1.539 1 1.689 0.91 0.00 13.88

2 0.850 1.81 1.71 15.59

801 800 1.991 1 2.154 0.92 0.00 15.59

2 1.086 1.83 1.75 17.34

RAW POINTS: 17.34

TOTAL POINTS: 17 / 35

WARNING: UNEXPECTED HOST 'val'

WARNING: ensure you are on an normal GRACE node

WARNING: timing results / scoring will not reflect actual scoring

WARNING: run on one of the following hosts for accurate results

WARNING: grace3.umd.edu

WARNING: grace4.umd.edu

WARNING: grace5.umd.edu

WARNING: grace6.umd.edu

WARNING: grace7.umd.edu

WARNING: grace8.umd.edu

WARNING: grace9.umd.edu

WARNING: grace10.umd.edu

# ###############################################################################

# PARTIAL CREDIT RUN

>> ssh grace.umd.edu

...

grace4>> ./mata_benchmark

./matata_benchmark

==== Matrix A^T*A Benchmark Version 2.0 ====

Performing 12 runs with 2 thread_counts (max 2)

ROWS COLS BASE T OPTM SPDUP POINT TOTAL

128 128 0.008 1 0.009 0.88 0.00 0.00

2 0.005 1.64 1.43 1.43

171 151 0.015 1 0.016 0.98 0.00 1.43

2 0.008 1.90 1.85 3.28

196 180 0.025 1 0.027 0.94 0.00 3.28

2 0.018 1.41 1.00 4.28

216 128 0.015 1 0.015 0.98 0.00 4.28

2 0.009 1.70 1.54 5.81

256 128 0.018 1 0.019 0.96 0.00 5.81

2 0.010 1.89 1.83 7.64

256 256 0.093 1 0.078 1.19 0.51 8.16

2 0.052 1.81 1.71 9.86

512 256 0.138 1 0.167 0.83 0.00 9.86

2 0.099 1.39 0.95 10.82

512 512 0.648 1 0.725 0.89 0.00 10.82

2 0.370 1.75 1.62 12.43

640 512 0.847 1 0.959 0.88 0.00 12.43

2 0.475 1.78 1.67 14.10

640 640 1.095 1 1.357 0.81 0.00 14.10

2 0.684 1.60 1.36 15.46

768 640 1.377 1 1.642 0.84 0.00 15.46

2 0.830 1.66 1.46 16.92

801 800 2.211 1 2.953 0.75 0.00 16.92

2 1.981 1.12 0.32 17.23

RAW POINTS: 17.23

TOTAL POINTS: 17 / 35

# ###############################################################################

# FULL CREDIT RUN

>> ssh grace.umd.edu

...

grace3>> ./matata_benchmark

==== Matrix A^T*A Benchmark Version 2.0 ====

Performing 12 runs with 2 thread_counts (max 2)

ROWS COLS BASE T OPTM SPDUP POINT TOTAL

128 128 0.008 1 0.007 1.15 0.39 0.39

2 0.004 1.88 1.82 2.21

171 151 0.016 1 0.013 1.16 0.44 2.65

2 0.007 2.19 2.27 4.92

196 180 0.026 1 0.021 1.23 0.60 5.51

2 0.011 2.27 2.37 7.88

216 128 0.015 1 0.012 1.22 0.56 8.45

2 0.007 2.14 2.20 10.65

256 128 0.017 1 0.014 1.24 0.62 11.27

2 0.007 2.30 2.40 13.67

256 256 0.068 1 0.055 1.24 0.62 14.29

2 0.031 2.16 2.22 16.51

512 256 0.137 1 0.106 1.29 0.74 17.25

2 0.057 2.40 2.52 19.77

512 512 0.596 1 0.429 1.39 0.94 20.72

2 0.225 2.65 2.81 23.53

640 512 0.833 1 0.533 1.56 1.29 24.81

2 0.273 3.05 3.22 28.03

640 640 1.086 1 0.839 1.29 0.75 28.78

2 0.420 2.59 2.74 31.52

768 640 1.314 1 1.002 1.31 0.78 32.30

2 0.548 2.40 2.53 34.83

801 800 2.199 1 2.070 1.06 0.18 35.00

2 0.828 2.66 2.82 37.82

RAW POINTS: 37.82

TOTAL POINTS: 35 / 35

Note that it is possible to exceed the score associated with maximal performance (as seen in the RAW POINTS reported) but no more than the final reported points will be given for the performance portion of the problem.

Significantly exceeding the max score may garner some MAKEUP credit: you'll know you earned this as the benchmark will report as much. See the later section for suggestions of potential additional optimizations.

4.4 matata_print.c Testing Program

As one works on implementing optimizations in matata_OPTM(), bugs

which compute incorrect results are often introduced. To aid in

testing, the matata_print() program runs both the BASE and OPTM

versions on the same matrix and shows all results. The matrix size is

determined from the command line and is printed on the screen to

enable hand verification. Examples are below.

################################################################################ # RUN 1: Single threaded, Correct Results >> ./matata_print 6 4 1 # run on a 6 by 4 matrix, 1 thread ==== Matrix A^T*A Print ==== Original Matrix: # original matrix 6 x 4 matrix 0: 0.00 1.00 2.00 3.00 1: 4.00 5.00 6.00 7.00 2: 8.00 9.00 10.00 11.00 3: 12.00 13.00 14.00 15.00 4: 16.00 17.00 18.00 19.00 5: 20.00 21.00 22.00 23.00 BASE Matrix A^T*A : # results computed by matata_BASE() 4 x 4 matrix 0: 0.680062 0.726430 0.772798 0.819165 1: 0.726430 0.777434 0.828439 0.879444 2: 0.772798 0.828439 0.884080 0.939722 3: 0.819165 0.879444 0.939722 1.000000 OPTM Matrix A^T*A : # results computed by matata_OPTM() 4 x 4 matrix 0: 0.680062 0.726430 0.772798 0.819165 1: 0.726430 0.777434 0.828439 0.879444 2: 0.772798 0.828439 0.884080 0.939722 3: 0.819165 0.879444 0.939722 1.000000 BASE/OPTM Element Comparison: # comparison of elements [ i][ j]: BASE OPTM [ 0][ 0]: 0.680062 0.680062 # all match - CORRECT RESULTS [ 0][ 1]: 0.726430 0.726430 [ 0][ 2]: 0.772798 0.772798 [ 0][ 3]: 0.819165 0.819165 [ 1][ 0]: 0.726430 0.726430 [ 1][ 1]: 0.777434 0.777434 [ 1][ 2]: 0.828439 0.828439 [ 1][ 3]: 0.879444 0.879444 [ 2][ 0]: 0.772798 0.772798 [ 2][ 1]: 0.828439 0.828439 [ 2][ 2]: 0.884080 0.884080 [ 2][ 3]: 0.939722 0.939722 [ 3][ 0]: 0.819165 0.819165 [ 3][ 1]: 0.879444 0.879444 [ 3][ 2]: 0.939722 0.939722 [ 3][ 3]: 1.000000 1.000000 ################################################################################ # RUN 2: Two threads, Incorrect Results >> ./matata_print 6 4 2 # run on a 6 by 4 matrix with 2 threads ==== Matrix A^T*A Print ==== Original Matrix: 6 x 4 matrix 0: 0.00 1.00 2.00 3.00 1: 4.00 5.00 6.00 7.00 2: 8.00 9.00 10.00 11.00 3: 12.00 13.00 14.00 15.00 4: 16.00 17.00 18.00 19.00 5: 20.00 21.00 22.00 23.00 BASE Matrix A^T*A : 4 x 4 matrix 0: 0.680062 0.726430 0.772798 0.819165 1: 0.726430 0.777434 0.828439 0.879444 2: 0.772798 0.828439 0.884080 0.939722 3: 0.819165 0.879444 0.939722 1.000000 OPTM Matrix A^T*A : 4 x 4 matrix 0: 0.510029 0.540115 0.570201 0.600287 1: 0.604585 0.647564 0.690544 0.733524 2: 0.699140 0.755014 0.810888 0.866762 3: 0.793696 0.862464 0.931232 1.000000 BASE/OPTM Element Comparison: [ i][ j]: BASE OPTM [ 0][ 0]: 0.680062 0.510029 *** # most elements mismatch [ 0][ 1]: 0.726430 0.540115 *** # likely bugs in thread implementation [ 0][ 2]: 0.772798 0.570201 *** [ 0][ 3]: 0.819165 0.600287 *** [ 1][ 0]: 0.726430 0.604585 *** [ 1][ 1]: 0.777434 0.647564 *** [ 1][ 2]: 0.828439 0.690544 *** [ 1][ 3]: 0.879444 0.733524 *** [ 2][ 0]: 0.772798 0.699140 *** [ 2][ 1]: 0.828439 0.755014 *** [ 2][ 2]: 0.884080 0.810888 *** [ 2][ 3]: 0.939722 0.866762 *** [ 3][ 0]: 0.819165 0.793696 *** [ 3][ 1]: 0.879444 0.862464 *** [ 3][ 2]: 0.939722 0.931232 *** [ 3][ 3]: 1.000000 1.000000

4.5 Optimization Suggestions and Documentation

Labs and lectures have covered several kinds of optimizations which

are useful to improve the speed of matata_OPTM(). These techniques

include:

- Re-ordering memory accesses to be as sequential as possible which favors cache. Unless memory accesses favor cache, it is unlikely that any other optimizations will provide much speedup. There are several possibilities to optimizing cache so make sure to read the section on alternatives.

- Use threads to split up the work of computation. The problem has a high degree of parallelism in it with many portions that can be computed in worker threads independently from others. Assume that the number of threads to use will be small compared to the size of the matrix. For example, the benchmark uses at most 2 threads and the smallest matrix is 128 rows by 128 columns.

In most cases, implementing these two optimizations correctly will yield full performance points. Look for examples in Lecture and Lab to assist you along with more discussion in this document.

4.6 Hints on Cache-Friendly Memory Traversals

There are two approaches to getting more out of cache in this setting.

- Attempt to use the Transpose matrix which inverts rows and columns. This is a quick and easy way to get more speed as it allows traversing rows instead of columns which tends to favor cache. However, the transpose matrix must be created which takes a fair amount of time and is extremely hard to make cache friendly. For the ultimate speed, a different approach is necessary.

- Utilize a completely different iteration pattern that still allows the answer matrix to be computed but favors cache while doing so.

Relying on the creation of the Transpose matrix will likely not earn full credit. Instead, study the diagram below which suggests a way to compute a row the answer matrix in a cache friendly fashion. It favors repeatedly traversing the matrix in a row-wise fashion to avoid the stride required for column-wise access. Consider implementing the approach illustrated before implementing any threading. Threads that exhibit poor cache behavior tend to slow each other down; more benefit comes from adding multi-threading to a cache-friendly serial solution.

Figure 8: Illustration of how to favor row-wise access to compute squared matrix. Computation of first row of the answer matrix is shown with natural extensions to rows beyond that.

4.7 Hints on Multi-threading

Division of Matrix Among Threads

The matrix must be divided among worker threads. The bulk of the work that is done by BASE algorithm is computing the "upper triangle" of the output values in this part of the code:

int matata_BASE(matrix_t mat, matrix_t ans) {

...;

for(int i=0; i<mat.cols; i++){ // compute ans = A^T * A

for(int j=i; j<mat.cols; j++){ // compute upper triangle elements

float sum = 0;

for(int k=0; k<mat.rows; k++){ // sum over dot(row, col)

sum += MGET(tra, i, k)*MGET(mat, k, j);

}

MSET(ans, i, j, sum); // exploit symmetry: assign upper

MSET(ans, j, i, sum); // trianble element to lower triangle

}

}

...

}

It is natural to then subdivide this computation among threads,

possibly dividing the i, j, or k loops among multiple

threads.

Students are encouraged to divide the outer i loop among

threads. The reasons for this are worth discussion but the most

efficient explanation is that this is how the solution code that gets

full points is constructed. Note also that the cache-friendly

computation technique described in another section also an outer i

loop and it is wise to take the same approach of dividing the outer

loop among threads.

Use caution when assign threads to outer loop iterations. The structure of the code computes a "triangle":

- The

i=0outer iteration loops fromj=i=0tomat.cols - The

i=1outer iteration loops fromj=i=1tomat.cols, one fewer than ati=0 - The

i=mat.cols-1iteration loops fromj=i=mat.cols-1tomat.cols, only a single iteration.

That means if Thread 0 is assigned rows 0 to 49, it has a LARGE part of the triangle while Thread 1 assigned to rows 50-99 has a SMALL part of the triangle.

Ideally an equal division of work among threads is used. An easy way to arrange this is with the following code pattern:

int matata_OPTM(matrix_t mat, matrix_t ans, int tc){

...;

for(int i=thread_id; i<mat.cols; i+=thread_count){

...

}

...

}

Here threads will alternate rows; with 2 threads

- Thread 0 will take rows 0,2,4,6,…

- Thread 1 will take rows 1,3,5,7,…

which will lead to an even amount of work and may actually help cache performance as threads will be more likely to work on adjacent rows.

Note as well that the number of threads will be small so that it is likely several hundred or several thousands rows/cols will be assigned dot each thread. This will allow well-written code the have each thread work independently on portions of the matrix in parallel with other threads.

Use of Mutexes

Whenever a thread must access a memory element that other threads may reference, protect the access to that memory with a Mutex. Each thread should lock the mutex, make changes to the data, then unlock the mutex. Failure to do this when altering shared data may result in incorrect results. Try to keep the code between lock/unlock as short and fast as possible as only one thread can execute it at a time.

For this problem, it is likely that a single lock/unlock will be required.

Use of Barriers

Later parts of the computation may require all threads to complete earlier parts. For example, without having the maximum element across the entire MATATA answer matrix, the answer matrix cannot be normalized correctly. If multiple threads are cooperating to compute a shared result, all threads must finish their task before any should move ahead. In these cases, a Barrier is useful.

Below is a basic code synopsis of how to use a barrier. Most solutions

to MATATA will use 1-3 pthread_barrier_wait() calls and can utilize

the same barrier for all of them.

// global variable, may also be a local variable in a struct

pthread_barrier_t barrier;

void main_thread(int thread_count) {

// main thread sets up the barrier

pthread_barrier_init(&barrier, NULL, thread_count);

// main thread creates threads

for(int i=0; i<thread_count; i++){

...;

pthread_create(..., worker, ...);

}

...;

}

void *worker(void *){

// worker threads compute PART A of the computation

...;

// before continuing to PART B, all threads must finish PART A

// use the barrier to wait until

pthread_barrier_wait(barrier);

// all threads now finished with PART A, safe to proceed to part B

// worker threads compute PART B of the computation

...;

// before continuing to PART C, all threads must finish PART B

// use the barrier to wait until

pthread_barrier_wait(barrier);

// all threads now finished with PART B, safe to proceed to part C