We are seeing an increasing trend towards  generation and interaction with very large time-varying datasets in scientific simulation. These datasets are used to study the dynamics and evolution of phenomena in a variety of application domains ranging from understanding the evolution of planets and stars to the understanding of life itself. For instance, a cardiologist may be interested in understanding the deformations, strain, and variations of the electric field across a beating heart; a meteorologist may use time-varying atmospheric simulation and observation datasets to validate weather prediction models and study atmospheric instabilities; a biochemist may use molecular dynamics simulations to better understand the relationships between biomacromolecular form and function; an aeronautical engineer may use the streamlines of air flow over an airplane for better aircraft design; and a physicist may use time-varying datasets to study solar wind turbulence and microgravity fluid mechanics. These datasets are characterized by their very large sizes ranging from hundreds of gigabytes to tens of terabytes, multiple superposed scalar and vector fields, and an imperative need for interactive exploratory visualization capabilities. Our research deals with how visualization can help build better scientist-computer interfaces to facilitate knowledge discovery and transformative understanding of problems in science, engineering, and medicine.

generation and interaction with very large time-varying datasets in scientific simulation. These datasets are used to study the dynamics and evolution of phenomena in a variety of application domains ranging from understanding the evolution of planets and stars to the understanding of life itself. For instance, a cardiologist may be interested in understanding the deformations, strain, and variations of the electric field across a beating heart; a meteorologist may use time-varying atmospheric simulation and observation datasets to validate weather prediction models and study atmospheric instabilities; a biochemist may use molecular dynamics simulations to better understand the relationships between biomacromolecular form and function; an aeronautical engineer may use the streamlines of air flow over an airplane for better aircraft design; and a physicist may use time-varying datasets to study solar wind turbulence and microgravity fluid mechanics. These datasets are characterized by their very large sizes ranging from hundreds of gigabytes to tens of terabytes, multiple superposed scalar and vector fields, and an imperative need for interactive exploratory visualization capabilities. Our research deals with how visualization can help build better scientist-computer interfaces to facilitate knowledge discovery and transformative understanding of problems in science, engineering, and medicine.

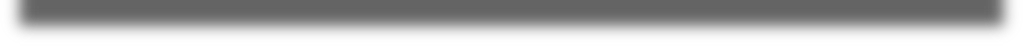

Our interest in multi-core processing focuses on designing highly-parallel algorithms for grand challenge problems in science and engineering. Our high-performance computing and visualization effort takes advantage of the synergies afforded by coupling central processing units (CPUs), graphics processing units (GPUs) displays, and storage. The infrastructure is being used to support a broad program of computing research that revolves around understanding, augmenting, and leveraging the power of heterogeneous vector computing enabled by GPU co-processors. The driving force here is the availability of cheap, powerful, and programmable graphics processing units (GPUs) as well as other multicore architectures such as the Cell processor. The CPU-GPU coupled cluster is enablng the pursuit of several new research directions in computing, as well as a better understanding and fast solutions to several existing interdisciplinary problems through a visualization-assisted computational steering environment. The research groups that are using this cluster fall into several broad interdisciplinary computing areas. We are exploring visualization of large datasets and algorithms for parallel rendering. In high-performance computing we are developing and analyzing efficient algorithms for querying large scientific datasets as well as modeling complex systems when uncertainty is included in models. We are using the cluster for several applications in computational biology, including computational modeling and visualization of proteins, conformational steering in protein structure prediction, and sequence alignment. We are also using the cluster for applications in real-time computer vision, real-time 3D virtual audio, large-scale modeling of neural networks, and high-energy physics. The coupled cluster with a large-area high-resolution display screen is serving as a valuable resource to present, interactively explore, evaluate, and validate the ongoing research in visualization, vision, scientific computing, human-computer interfaces, and computational biology with active participation of graduate as well as undergraduate students.

|

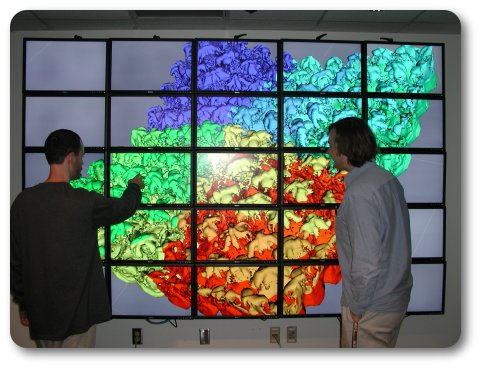

| Progressive Estimation of Molecular Surface area for PDB 1HTO on NVIDIA GeForce 8800 GPU (8x speedup) |

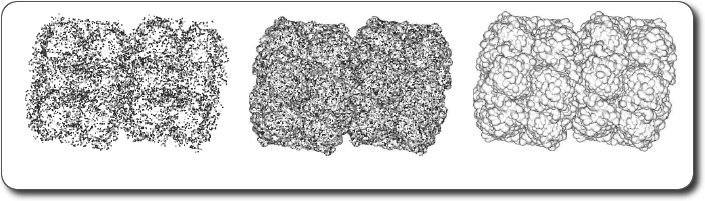

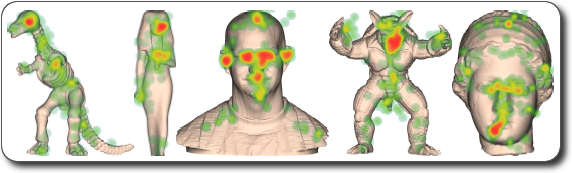

Even as simulation datasets have been growing at an exponential rate, the capabilities of the human visual system have remained unchanged. Furthermore, the bandwidth into the human cognitive machinery remains constant. As a result, we have now reached a stage where the current generation simulation datasets can easily overwhelm the limits of human comprehension. The human visual system deals with these limitations by focusing retinal hardware and attention on what is most important. The challenge of visual presentation and analysis of very large datasets compels us to re-examine not just how to present data, but what data to present. Visual scalability is thus rapidly emerging as one of the grand challenges. This has informed much of our recent research in developing saliency-guided techniques for large data visualization and analysis. Most of the time, most of the data is innocuous and unimportant and even considering it wastes precious time and resources. However, current visualization systems effectively assume a default, that every piece of data is equally important. Our research in perceptual graphics is on devising superior visualization systems that can leverage the principles of perception of visual salience and illumination. We regularly validate our results through eye-tracking-based user studies.

|

|

|

|

Lambertian rendering, observed visual hotspots (from eye-tracking-based user studies), and computed mesh saliency maps for some commonly used 3D graphics models

|

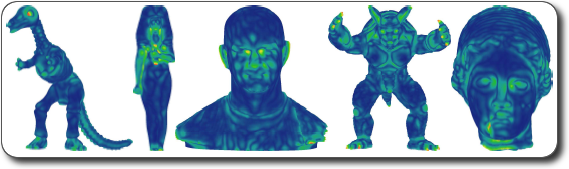

Our research into representation and analysis of geometry deals with 3D point-based graphics, triangle and tetrahedral meshes, volumes and isosurfaces, multiresolution hierarchies, and stochastic representations of geometry. We have developed a variety of multiresolution data-structures to represent continuous and discrete levels of detail for geometry. Our work lends itself to view-dependent and view-independent processing of geometry. We have also worked on generation of triangle strips, skip strips, and a variety of other techniques for compression of connectivity and geometry. Our group has a number of results in nearest neighbor finding for triangle and tetrahedral meshes, as well as point clouds. We have also developed novel algorithms for efficient extraction of isosurfaces from volume data as well as representation of volumes using radial-basis functions. We have explored representations of geometry that map especially well to the streaming model of computation on some of the modern multi-core processors as well as algorithms to efficiently detect self-similar patterns in point cloud datasets.

Illumination models are important for photo-realistic image synthesis. Accurately modelling the physical interaction of light with objects is an exciting, but challenging task. We have shown how low (nine)-dimensional spherical harmonic representations of lighting can be used to model the lighting variation on Lambertian (non-shiny) objects. We have also integrated the bidirectional surface scattering reflectance distribution function (BSSRDF) effects for translucent meshes into a run-time single-pass local illumination model with an efficient (linear) run-time complexity using pre-computing scattering integrals and multiresolution spherical harmonic representation. We have also developed a new method for accelerating ray tracing of complex reflective and refractive objects by substituting accurate-but-slow intersection calculations with approximate but fast interpolation computations. Our approach is based on modeling the reflective/refractive object as a function that maps input rays entering the object to output rays exiting the object.

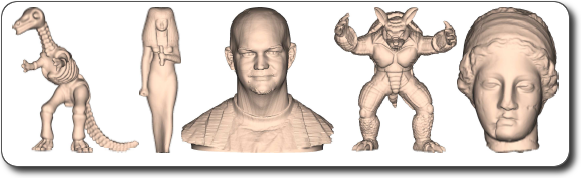

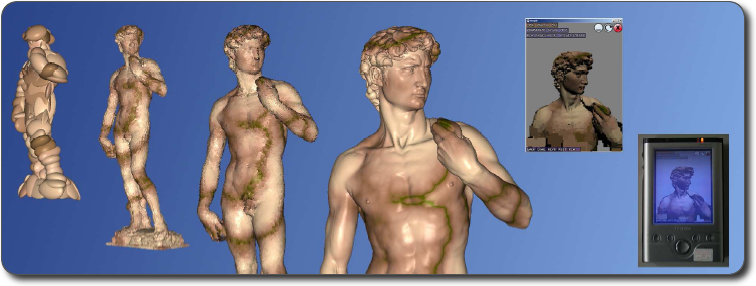

We have expertise in acquisition of 3D objects and scenes using a variety of techniques. We have worked on acquisition of 3D objects from structured light patterns as well as laser scanners. We have also developed new research algorithms based on recent advances in computer vision and graphics for 3D modeling of buildings as well tracking of people from multiple cameras – stationary and mobile.

|

|

| Scene Reconstruction, People Tracking, and Rendering from Video (joint work with SET corporation) |