An Interactive Ray Tracer

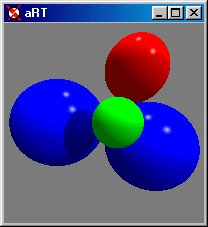

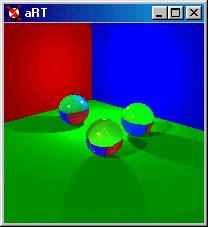

In order to design an adaptive or interactive ray tracer (aRT), it is necessary to understand ray tracing. Ray tracing is a technique for rendering images of three-dimensional scenes that simulates photons of light. In a ray tracer, each pixel of the rendered picture is treated as an individual photon or ray of light that is traced through a three-dimensional scene as it hits and bounces off of various objects in the scene. With each item that the ray interacts with, part of the light is reflected and part of the light is refracted. The degree of realism that can be achieved is directly proportional to the accuracy of the descriptive model of the scene and the number of times that a ray is allowed to hit something and bounce. The more complex the model and the more times a ray is allowed to bounce directly increases the realism of the image that will be achieved. With this formula, comes a cost. With every complexity and every level of recursive bouncing comes an increase in the computational time that will be required to generate an image. Thus, ray tracing is considered to be inherently slow.- Immediate Feedback - Providing the user with

immediate feedback is required if interaction is to be added.

By rendering at extremely low resolutions first and

incrementally re-rendering at increasing resolutions, the user has

the opportunity to 'see' the image before it is completed. The

user may then make adjustments in camera placement and orientation

prior to substantial, possibly wasted, computations being

performed. The following images show, in detail, how aRT

displays the image as it is rendered.

Click on an image to see how aRT renders incrementally

- Pixel Memory - In the traditional ray tracing variant,

each pixel of the final image is calculated and drawn to a file or

screen. Moving this to incrementally increasing the resolution of

the image, naively implies that the image is rendered at one

resolution and then re-rendered at a higher resolution. While this

works, it repeatedly retraces rays that have already been traced

at lower-levels of resolution. The following table displays the

number of times each ray in a 16x16 pixel region would be traced

following this naive approach:

1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 1 2 1 2 1 2 1 2 1 2 1 2 1 2 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 1 4 1 2 1 2 1 2 1 4 1 2 1 2 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 1 2 1 2 1 2 1 2 1 2 1 2 1 2 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 1 2 1 2 1 4 1 2 1 2 1 2 1 2 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 1 2 1 2 1 2 1 2 1 2 1 2 1 2 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 1 4 1 2 1 2 1 2 1 4 1 2 1 2 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 1 2 1 2 1 2 1 2 1 2 1 2 1 2 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 1 2 1 2 1 2 1 2 1 2 1 2 1 2 16x16 pixel information and the number of times

a ray will be retraced using the naive approach

Looking at this mathmatically, (330-256)/256 = 29% of rays are retraced at least once in this naive model. To eliminate this duplication of work, aRT needs to store pixel information as it works thus eliminating the need to retrace rays and thereby gaining a memory of what it has done before.

- 'Beyond the Bounds' Memory - Extending the Pixel Memory

beyond the bounds of the image is a very important step in

allowing aRT to remember what it has done in relation to

repositioning the camera. It allows the camera to accidentally be

moved beyond a particular positon and back again without aRT

forgetting everything it has done in the process. The following

pictures shows the relationship between aRT's memory and the

actual image.

aRT's Memory Regions

Camera Rotation

Camera rotation is like turning your head to look at something slightly to the right of what you are currently looking at. Nothing in what you see has changed, just the 'center' of your view has moved. Thus as the camera rotates around a single point, the perspective between objects in the scene remains the same. Simply rotating the camera does not change the information that has already been computed, it would simply relocates it's position in the image or move it out of the bounds of the image altogether. Thus, the greatest amount of information already computed can be reused.

Camera rotation is implemented in two steps. First, the current

image is shifted in the direction of movement and then rendering

resumes at a lower resolution, incrementally increasing over

time. Portions of the image that have been rendered at a higher

resolution are not re-rendered until that higher resolution is reached

by all areas of the image.

Click on an image to see aRT implements camera rotation

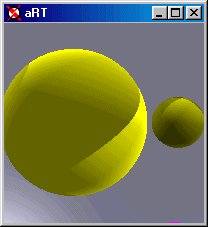

Zooming In and Out

Zooming in or out is the other motion that was added to aRT. As the camera moves forward (or backwards), the relationship between objects can change dramatically. Objects or parts of objects that were previously hidden can suddenly become visible. This change in the relationships between objects makes it much harder to provide instant feedback to the user.

As the distance from the center of the image increases, so does the

probability that the relationship between objects have changed. aRT

tries to take this into consideration when it zooms in (or out) by

first reducing (increasing) the current image and displaying it to the

user. This provides the immediate feedback to the user, then the

resolution of the image that is rendered is decreased as the distance

from the center increases. In this case, no actual information is

remembered and all rays are re-rendered since all rays are suspect.

Click on the right image to see aRT zoom in

and the left image to see aRT zoom out

Many Thanks

I would like to extend thanks to the following people for their assistance through the completion of this project:- The Senior Summer Scholars Program in conjuction with the Undergraduate Dean's Office for financial support.

- My advisor, Dr. David Mount, for his ever encouraging direction and advice.

- My husband, Theodore A. Jump, for his moral and emotional support.

- Nancy L. Debnam, Dr. Michelle Hugue, and Britt McAlister for all of their encouragement and their red pens.

Useful Web Pages

Bibliography

- Foley, James D., van Dam, Andries, Feiner, Steven K., & Hughes, John F. Computer Graphics: Principles and Practice (Second Edition in C). Addison-Wesley Publishing Company. 1996.

- Glassner, Andrew S. An Introduction to Ray Tracing. Academic Press Limited, San Diego, CA. 1989.

- Hearn, Donald, & Baker, M. Pauline. Computer Graphics. Prentice Halls, Englewood Cliffs, NJ. 1994

- Mount, David. Lecture Notes from CMSC427/828M. Spring 1997.

- Samet, Hanan. The Design and Analysis of Spatial Data Structures. Addison-Wesley, Reading, MA. 1990.

- Silicon Graphics, Inc. OpenGL WWW Center (http://www.sgi.com/Technology/OpenGL/). 1998.

- Wilt, Nicholas P. Object-Oriented Ray Tracing in C++. John Wiley & Sons, Inc. 1994.