Memory for dumbies like me!

When I was young I used to play with my parents computer. It was state of the art! We had the best games they had out. Our computer had more memory and was faster than anyone on our block. That good old Commodor 64 could not be beat. The only problem was that everything had to be on disks because we didn't have enough memory for a simple program. With 64 kilobytes of memory we could do what was needed, but today you need 4 megabytes just to tern you computer on! For most people there is a simple solution to any question about memory: more is better, less is not. But for those of us who really what to get it, no more stupid car salesman telling me what is good, this will let us know what is up. I will help you understand all the great key words, so that there won't be a car salesman out there that can get you suckered into buying a lousy computer (or memory).

When most people think about main memory they think of RAM, random access memory. One of the main types that is most common is DRAM, dynamic random access memory. The uses of RAM is to hold temporary instructions and data needed to complete tasks. When you think of memory try to visualize an office with a desk, chairs, filing cabinet, and a potted plant.

The part of the office you would consider your main memory would be the desktop, which would offer fast and easy access to your files and information you are currently working on. The filing cabinet would represent the hard disk or any other high capacity storage unit (such as a zip disk or DVD). And lastly, the potted plant would represent a plant that is in a pot.

Memory can be physically broken down into smaller chunks called integrated circuits. On each integrated circuit there is DRAM, dynamic random access memory. This type of memory is by far the most common of the lot. On each chip there are many "cells" each of which contains one transistor and one capacitor. Each one of the cells represents just one bit. To go back up, an integrated circuit contains a number of these DRAM chips. One of the common products is called a SIMM, single in-line memory module, although there are other types like DIMMs, dual in-line memory module). A typical SIMM hold many of these DRAM chips on a small printed circuit board which is held into place by a socket on the mother board.

This is a small representation of a memory chip

SIMMs and DIMMs and the differences.

The main difference between SIMMs and DIMMs (besides the S and D) is in how each one handles the data path from the CPU, central processing unit. First, SIMMs come in two different configurations, which depends on the number of pins on the bottom of the chip. The two types are either 30 or 72 pins of either tin or gold along the bottom and these pins determine the amount of data the module can take. It is the amount of data that makes SIMMs a more difficult module to use, as compared to the DIMMs. This is because a 30 pin SIMM can only handle a 8-bit data flow, although most of the CPU's today need 32-bits. One strategy was to make the 30 pin SIMMs to work in groups. If you have four (4) 30 pin SIMMs which can handle 8-bits (8) your new data flow is up to 32-bit (8 x 4) data path. Because of the practicality of upgrading four 30 pin SIMMs, which is non-existent, they designed a 72 pin SIMM to handle the 32-bit data path. The only problem was that they are increasingly larger than the 30 pin ones, because of the number of pins. Well, good is never enough so they thought of a new solution. You guessed it... DIMMs.

DIMMs look similar but the difference is that they use both sides of the module to hold all of the pins, which means they can hold at least twice as many pins per module. Using the same ideas as above, picture two 42 pin SIMMs back to back. This would create a 144 pin DIMM (72 on each side). There is also a 168 pin DIMM which has 84 pins per side. Most of the properties hold, like if you have a 64-bit data path, these 32-bit 144 pin DIMMs are not quite enough. So you can group them just like the SIMMs. Two 144 pin DIMMs can handle a 64-bit data path (2 x 32 = 64).

animated GIF by Donnie Heath

So what is it about DRAM that makes it ... it?

To recap from above and add a little bit more, DRAM is the most popular type of RAM used in main memory (as compared to SRAM in caches). DRAM has bits that are arranged in cells where in each cell there is a certain number of bits in it. One example to illustrate is a 4 MB x 4 bit DRAM which has four bits per cell. Picture the internals of a cell to be a spreadsheet. It doesn't have to be Excel if you don't like Microsoft, anyone will do. This spreadsheet is accessed by row and column addresses.

So how many types of DRAM are there? Golly Heck!!

Well, there is just one... Just kidding! There are many types of DRAM. In this section I will only go into a few of them. Just to throw some names at you, they are FPM (fast page mode), EDO (extended data out), SDRAM (synchronous DRAM), JEDEC SDRAM, DDR SDRAM, and many ... many more. In the following sub sections I will describe several of the types from the little list I made.

Fast page mode is a modification of an older idea called Page Mode. To begin I will explain what page mode is and how fast page mode was made better. Page mode is a special access mode that enables an entire "page" of data to be held at one time. This would now allow column addresses to read from the held page without waiting for row address setup. Fast page mode made the improvement by removing the column address setup time during the page cycle. This mode is still being used in many systems because there is a reduced power consumption in memory. Although because of several drawbacks, FPM is not in any demand and is least desirable of the available DRAM memory types.

Extended data out DRAMs work very similarly to FPM DRAMs because of the way they both handle a "page" of data. The primary advantage of EDO DRAM over FPM DRAM is that the EDO holds the data for a longer period of time by not making it go invalid once the signal ends. What this means is that it allows faster microprocessors to handle more tasks by managing it's time more effectively.

This type of DRAM is the most recent of all the types explained above. SDRAM provides a significant increase in performance over both FPM and EDO. This new type uses a clock to synchronize the signal to input and output on a memory chip. The clock on the CPU and the clock on the memory chip are synchronized, which increases the overall performance of the computer. The reason the performance increases is because the synchronization of the clocks makes the data availability time completely predictable, therefore the CPU can be doing other jobs while waiting for the information to become available.

Just remember that these are not the only ones out there. There are a large amount of different types of memory to choose from, and don't get suckered into buying something that is not right for you or your computer. Hopefully this small section helps you find what you need and not what someone else says you do.

A little bit about buffered and non-buffered

When purchasing any sort of hardware for your computer, most of the requirements related to memory design are dependent on the mother board design. Well this is the case with buffered and non-buffered DIMMs. Some mother boards have buffers for the control signals that the chips use, and others rely on the chips themselves to have the buffers. The problem here is that a mother board with a buffer module on it will not work unless the chip does not have a buffer module on it, so there must be one and only one buffer. This is true for the other way also, if a board does not have a buffer, then the chip must have one. When you are looking to buy a new chip or board, make sure you know what you have already so you don't get the wrong type.

Everyone enjoys a parity-an extra bit won't hurt

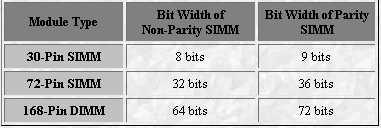

Memory module have two basic flavors of error detection, parity and non-parity, although there are several variations of them. Non-parity is the easiest to describe because it is exactly what you would normally think of a chip as being. It contains exactly one bit of memory for every bit of data to be stored. But in parity memory each byte has an extra bit which is used for error detection but not correction. Later I will explain some error detection and correction (ECC) but for now here is a small table to explain the difference between the bits of a non-parity and a parity SIMM module:

There are two types of parity, odd and even, and they both function is similar ways. Here is another table to explain how odd and even parity work.

by Kingston Technology Company

Parity is useful in detecting errors but it does have several drawbacks. One of them is that it cannot correct the errors, just tell if they exist. There is still a problem with finding the errors. For example, what if two bits were invalid. One of the bits was a 1 and is now a 0, whereas the other bit was a 0 and is now a 1. Both of the types of parity would fail to detect the error, but that doesn't happen very often.

But don't forget about the ECC (Error Checking and Correction)

One use for the parity in error detection is that it is used in a more advanced form of error detection and correction called ECC. Due to the fact that both parity memory and ECC memory can support error checking and correction, there is a great deal of confusion about the differences between the two... especially since they often are quoted as having the same specifications. Although they appear to be the same, the biggest difference between the two types of memory is that ECC memory does not work in just parity mode. Both types (ECC and parity) can work in ECC mode, but parity can work in just parity also. ECC is capable of detecting up to 4-bit memory errors, but can only correct a 1-bit error and reports the other multiple-bit errors as parity errors.

It's not fast enough... well let's make it faster!

So the memory is too slow. People have ignored what Amdahl suggested, that memory capacity should grow linearly with the CPU speed. With this balance a system would run smoothly. For this linear growth with CPU speed to happen the designers for RAM had better get off their butts. The memory designers are far behind the demand of 60% improvement per year, they only improve 7% per year. With this slow improvement rate there have been a couple ideas to improve the bandwidth, which would in turn improve the speed of main memory. Here are the different ideas on improving main memory performance:

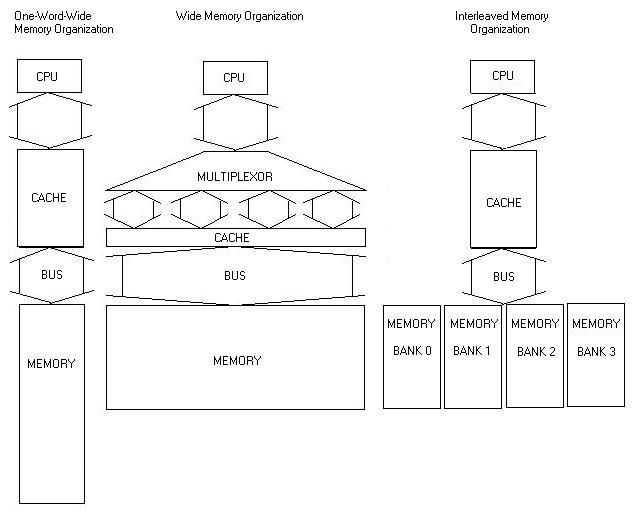

To understand this idea you must first understand a little bit about how cache is organized and works. Supposing you already understand cache you would know that it is often organized so that the physical width is the same as the width of one word. You can look at the pictures below for help in visualizing the sizes. Suppose you would double or even quadruple the size of the cache and main memory, this would produce a larger bandwidth (either doubled or quadrupled). Ofcourse there are drawbacks to everything that is pretty much said about any improvements, and by widening the width of the memory and cache it produces two problems. For this to work you would need to add a multiplexer on the data path before it reaches the CPU. The CPU still reads just one word at a time, so you would need to give it a part of the data at a time. After doing all of this, it might take a while, you might want to upgrade your memory. But for the higher bandwidth to stay you would have to have to double or even quadruple your memory size.

Another way to increase the bandwidth of the memory is to utilize the potential memory bandwidth of all the DRAMs in your computer. Instead of just using one at time, like in the first picture, you could use banks of memory and read or write multiple words at a time. Each bank is usually the size of one word, so the bus and cache do not need to change, but now you can send addresses to all the banks and read simultaneously. The banks are also useful for writes as well. If you are writing back-to-back you would normally have to wait for the previous one to finish, but with banks you can write in one clock cycle (if they go to different banks). This is especially useful if you have a write through strategy. The problems with this technique are that it is very expensive to have a system like this one, and once again you have to double you memory if you want to upgrade.

Independednt memory banks is a continuation of interleaved memory. The difference is in the added hardware, and obviously the end product of enhancement. This ideas is to allow multiple independent accesses to multiple independent banks which are controled by separate memory controllers. This would mean that each bank would need separate address lines and possibly a separate data bus, to be completely independent. With this architecture, multiprocessors are motivated to use the independent memory banks because of the sharing of common memory.

Avoiding memory bank conflicts

When using memory banks there will be no contention for a bank if the accesses are sequential, because they would all go to different banks. But if the accesses are even, then there is a problem. One way to fix this problem is to statistically reduce the chances of hitting the same bank by having a large number of banks. Although this does not fix the problem, it does reduce the chances of accesses going to one bank. To further solve this problem is to have a prime number of memory banks. Once again the problem with a prime number of banks is that you would have to change the way divide and modulo work, since it is not as easy as just looking at the bits anymore. The proofs for all of these are based on the Chinese Remainder Theorem, which I will not go into.

So far all of the techniques that I have talked about are not memory type specific, they can work on all types and have been in use before DRAM was around. Because DRAM has a timing signal you can modify the chip to page mode, extended data out, and syncronous DRAMs, which I talked about above.

Well, now you can brag that you know how to make the fastest RAM in your neighborhood. The only problem with that is that tommorrow everything will be different, there will be faster, smaller, more expensive RAM which you will have to read about and understand (don't let the car salesmen get a jump on you).

© 1998, Neil Anstey

Permission is granted for use of unmodified page for academic purpose