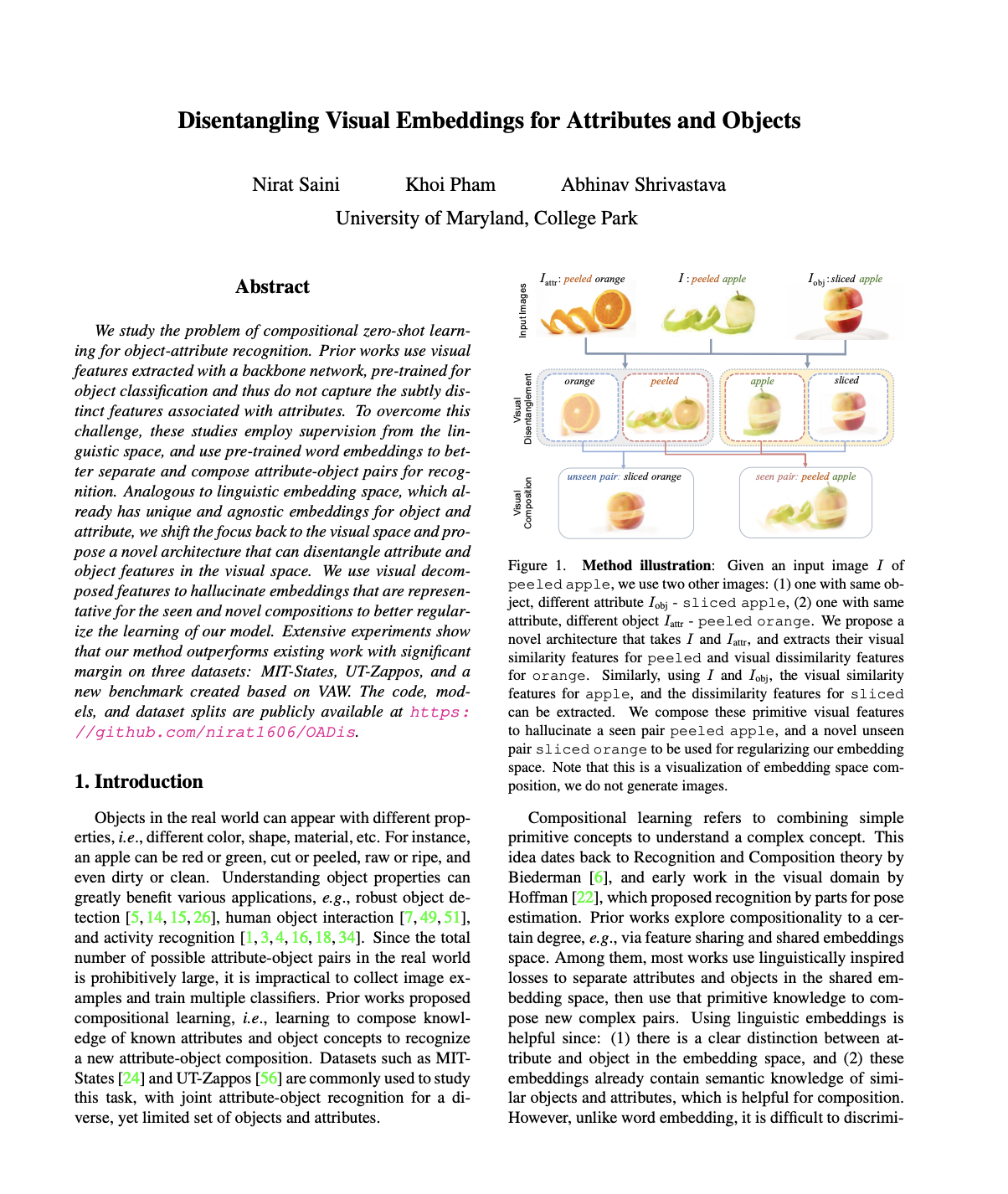

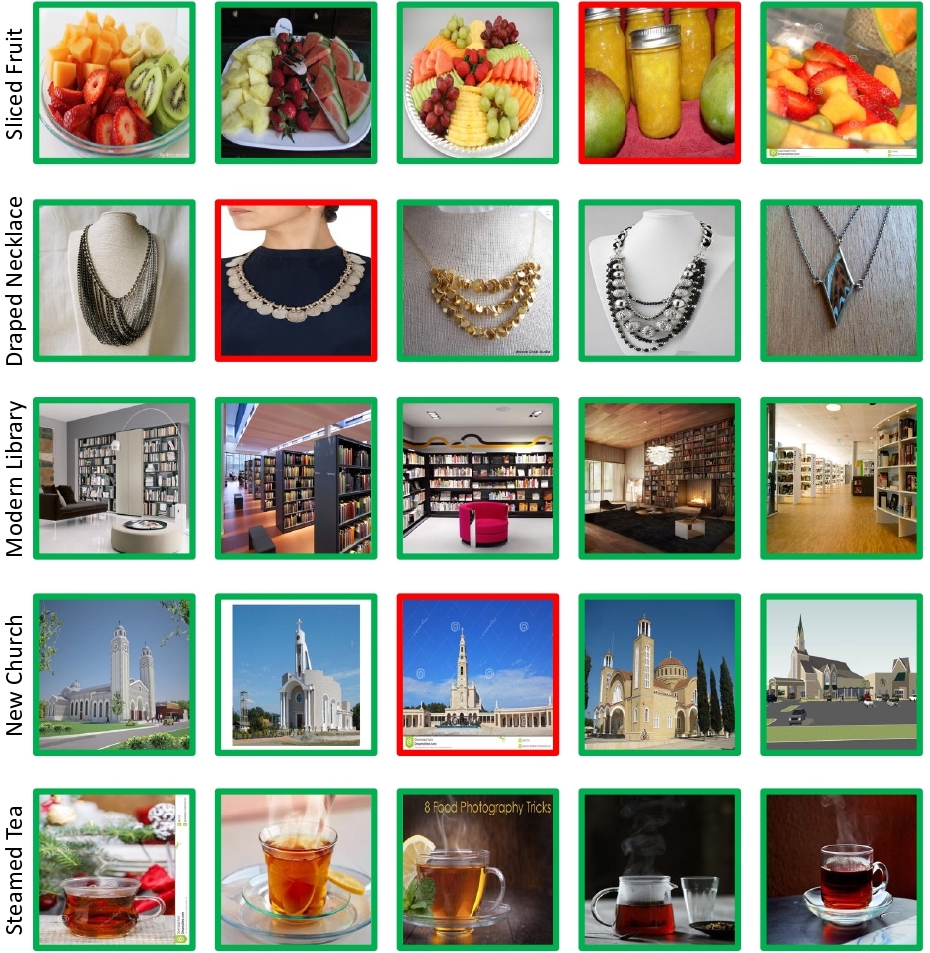

Qualitative Results

Retrieval of nearest 5 images using hallucinated compositions of unseen attributes and objects

MIT-States Results

Using the hallucinated composition of unseen attribute and object embeddings, we retrieve 5 nearest neighbors. For example, in first row, images are shown for sliced fruit. Images with incorrect labels are in red.

UT-Zappos Results

Using the hallucinated composition of unseen attribute and object embeddings, we retrieve 5 nearest images. Similar to left, images with incorrect labels are in red.