What are poisoning attacks?

Before deep learning algorithms can be deployed in security-critical applications, their robustness against adversarial attacks must be put to the test. The existence of adversarial examples in deep neural networks (DNNs) has triggered debates on how secure these classifiers are. Adversarial examples fall within a category of attacks called evasion attacks. Evasion attacks happen at test time – a clean target instance is modified to avoid detection by a classifier, or spur misclassification.

Poison attacks work in cases where adversarial perturbations don’t. Consider the case of fooling a face recognition system. Staff at a security gate can ask people to remove their hats/sunglasses, preventing a would-be attacker from modifying her appearance at test time. Similarly, an attacker might want to change the identity of an unsuspecting person (to get them flagged by airport security) without their cooperation/knowledge. Once again, the attacker can’t make adversarial perturbations to the test-time input.

Such systems are still susceptible to data poisoning attacks. These attacks happen at training time; they aim to manipulate the performance of a system by inserting carefully constructed poison instances into the training data. Sometimes, a system can be poisoned with just one single poison image, and this image won’t look suspicious, even to a trained observer.

A complete description of these attacks can be found in our paper below.

Poison Frogs! Targeted Clean-Label Poisoning Attacks on Neural Networks.

Why clean-label attacks?

In “clean label” attacks, the poison image looks totally innocuous, and is labelled properly according to a human observer. This makes it possible to poison a machine learning dataset without having any inside access to the dataset creating process. Clean label attacks are a threat when…

- An attacker leaves an image on the web, and waits for it to be picked up by a bot that scrapes data. The image is then labelled by an expert, and placed into the dataset.

- A malicious insider wants to execute an attack that will not be detected by an auditor or supervisor.

- Unverified users can submit training data: this is the case for numerous malware databases, spam filters, smart cameras, etc.

For example, suppose a company asks employees to submit a photo ID for its facial recognition control system; an employee provides a poisoned photo, and this gives her back-door control of the face recognition system.

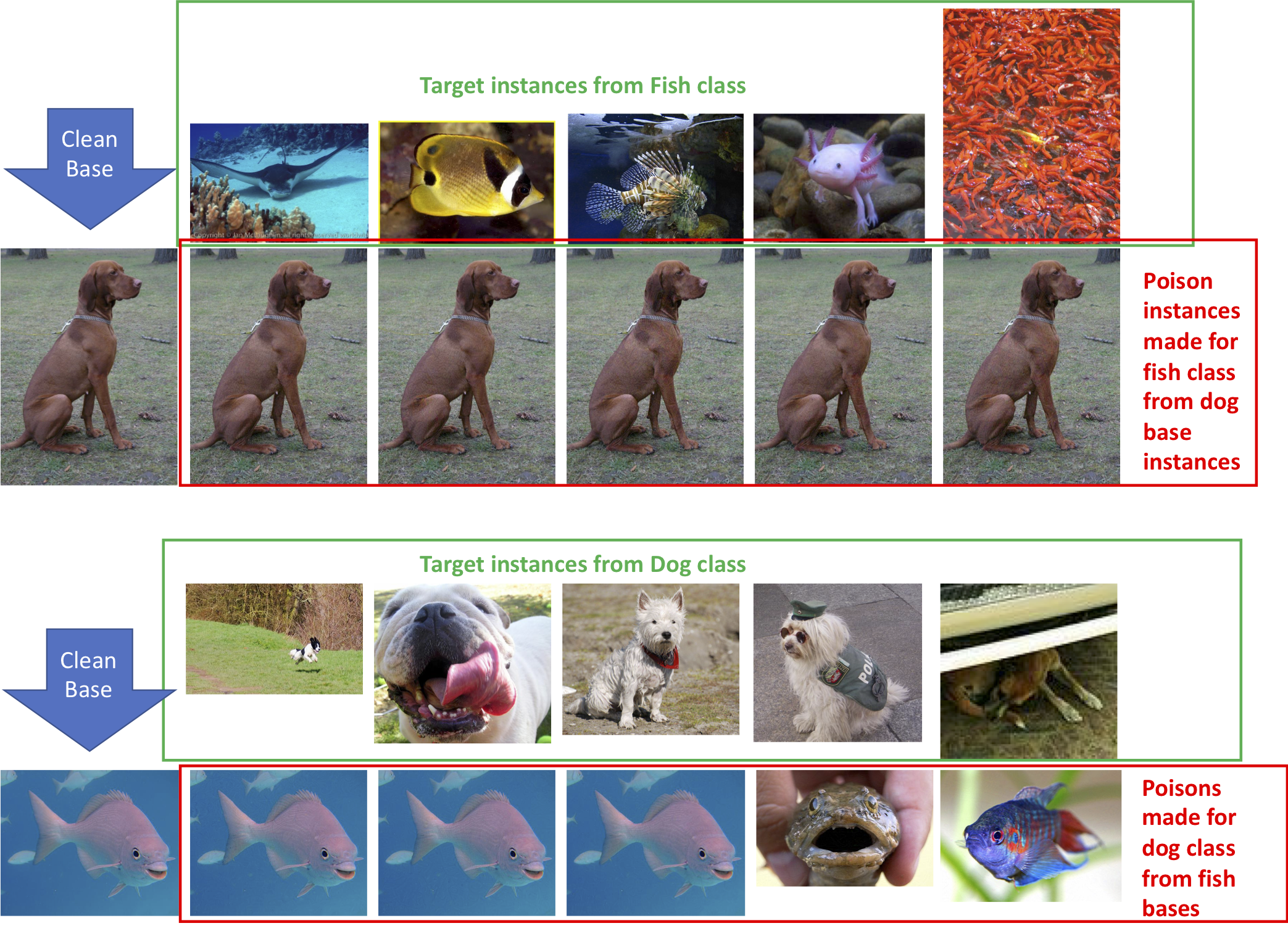

An example

At the top of this example, we attack fish images with poison dogs. Each of the fish images in the top row are correctly classified by an untainted neural classifier. We then poison this classified to control the classification of each fish. To do this, we create a poison dog image for every fish, each of which is shown in the second row of images. When each poison dog is added to the training set (and correctly labelled), the resulting classifier mistakes the corresponding fish for a dog. Note that the poison dogs look innocuous - to a human eye they cannot be discerned from the original non-poisonous dog.

The bottom two rows of images show the reverse experiment; we attack dogs with poison fish. Each of the dogs shown is mistaken for a fish when the corresponding poison fish image appears in the traning data.