Research in the Graphics and Visual Informatics Laboratory deals with a range of issues in visual computing ranging from the theoretical to the applied. Our research is driven by the applications of computer-aided Proteomics and computer-aided engineering design. Here we give an overview of our ongoing and recent research in software systems and algorithms.

This paper presents an immersive geo-spatial social media system for virtual and augmented reality environments. With the rapid growth of photo-sharing social media sites such as Flickr, Pinterest, and Instagram, geo-tagged photographs are now ubiquitous. However, the current systems for their navigation are unsatisfyingly one- or two-dimensional. In this paper, we present our prototype system, Social Street View, which renders the geo-tagged social media in its natural geo-spatial context provided by immersive maps, such as Google Street View. This paper presents new algorithms for fusing and laying out the social media in an aesthetically pleasing manner with geospatial renderings, validates them with respect to visual saliency metrics, suggests spatio-temporal filters, and presents a system architecture that is able to stream geo-tagged social media and render it across a range of display platforms spanning tablets, desktops, head-mounted displays, and large-area room-sized curved tiled displays. The paper concludes by exploring several potential use cases including immersive social storytelling, learning about culture and crowd-sourced tourism.

Further details of this work are available here.

- Social Street View: Blending Immersive Street Views with Geo-Tagged Social Media, R. Du, S. Bista, and A. Varshney, ACM SIGGRAPH Conference on Web3D, 2016, pp 77-85.

We present PixelPie, a highly parallel geometric formulation of the Poisson-disk sampling problem on the graphics pipeline. Traditionally, generating a distribution by throwing darts and removing conflicts has been viewed as an inherently sequential process. In this paper, we present an efficient Poisson-disk sampling algorithm that uses rasterization in a highly parallel manner. Our technique is an iterative two step process. The first step of each iteration involves rasterization of random darts at varying depths. The second step involves culling conflicted darts. Successive iterations identify and fill in the empty regions to obtain maximal distributions. Our approach maps well to the parallel and optimized graphics functions on the GPU and can be easily extended to perform importance sampling. Our implementation can generate Poisson-disk samples at the rate of nearly 7 million samples per second on a GeForce GTX 580 and is significantly faster than the state-of-the-art maximal Poisson-disk sampling techniques.

Further details of this work are available here.

- PixelPie: Maximal Poisson-disk Sampling with Rasterization, C.Y. Ip, M.A. Yalçın, D. Luebke, and A. Varshney, High-Performance Graphics, 2013, pp 17-26.

Visual exploration of volumetric datasets to discover the embedded features and spatial structures is a challenging and tedious task. In this paper we present a semi-automatic approach to this problem that works by visually segmenting the intensity-gradient 2D histogram of a volumetric dataset into an exploration hierarchy. Our approach mimics user exploration behavior by analyzing the histogram with the normalized-cut multilevel segmentation technique. Unlike previous work in this area, our technique segments the histogram into a reasonable set of intuitive components that are mutually exclusive and collectively exhaustive. We use information-theoretic measures of the volumetric data segments to guide the exploration. This provides a data-driven coarse-to-fine hierarchy for a user to interactively navigate the volume in a meaningful manner.

Further details of this work are available here.

- Hierarchical Exploration of Volumes Using Multilevel Segmentation of the Intensity-Gradient Histograms, C.Y. Ip, A. Varshney, and J. JaJa, IEEE Transactions on Visualization and Computer Graphics, Vol. 18, No. 12, 2012, pp 2355-2363.

The field of visualization has addressed navigation of very large datasets, usually meshes and volumes. Significantly less attention has been devoted to the issues surrounding navigation of very large images. In the last few years the explosive growth in the resolution of camera sensors and robotic image acquisition techniques has widened the gap between the display and image resolutions to three orders of magnitude or more. This paper presents the first steps towards navigation of very large images, particularly landscape images, from an interactive visualization perspective. The grand challenge in navigation of very large images is identifying regions of potential interest. In this paper we outline a three-step approach. In the first step we use multi-scale saliency to narrow down the potential areas of interest. In the second step we outline a method based on statistical signatures to further cull out regions of high conformity. In the final step we allow a user to interactively identify the exceptional regions of high interest that merit further attention. We show that our approach of progressive elicitation is fast and allows rapid identification of regions of interest. Unlike previous work in this area, our approach is scalable and computationally reasonable on very large images. We validate the results of our approach by comparing them to user-tagged regions of interest on several very large landscape images from the Internet.

Further details of this work are available here.

- Saliency-Assisted Navigation of Very Large Landscape Images, C.Y. Ip and A. Varshney, IEEE Transactions on Visualization and Computer Graphics, Vol. 17, No. 12, 2011, pp 1737-1746. video

Recent research in visual saliency has established a computational measure of perceptual importance. In this paper we present a visual-saliency-based operator to enhance selected regions of a volume. We show how we use such an operator on a user-specified saliency field to compute an emphasis field. We further discuss how the emphasis field can be integrated into the visualization pipeline through its modifications of regional luminance and chrominance. Finally, we validate our work using an eye-tracking-based user study and show that our new saliency enhancement operator is more effective at eliciting viewer attention than the traditional Gaussian enhancement operator.

Further details of this work are available here.

- Saliency-guided Enhancement for Volume Visualization, Y. Kim and A. Varshney, IEEE Visualization 2006, Baltimore, MD, October - November, 2006 (PDF 19.0 MB)

Recent trends in parallel computer architecture strongly suggest the need to improve the arithmetic intensity (the compute to bandwidth ratio) for greater performance in time-critical applications, such as interactive 3D graphics. At the same time, advances in stream programming abstraction for graphics processors (GPUs) have enabled us to use parallel algorithm design methods for GPU programming. Inspired by these developments, this paper explores the interactions between multiple data streams to improve arithmetic intensity and address the input geometry bandwidth bottleneck for interactive 3D graphics applications. We introduce the idea of creating vertex and transformation streams that represent large point data sets via their interaction. We discuss how to factor such point datasets into a set of source vertices and transformation streams by identifying the most common translations amongst vertices. We accomplish this by identifying peaks in the cross-power spectrum of the dataset in the Fourier domain. We validate our approach by integrating it with a view-dependent point rendering system and show significant improvements in input geometry bandwidth requirements as well as rendering frame rates.

Further details of this work are available here.

- Vertex-Transformation Streams, Y. Kim, C.H. Lee, and A. Varshney, Graphical Models, Vol 68, No. 4, July 2006, pp 371 - 383 (PDF 2.9 MB)

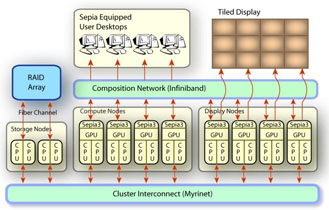

We are building a high-performance computing and visualization cluster that takes advantage of the synergies afforded by coupling central processing units (CPUs), graphics processing units (GPUs), displays, and storage. The infrastructure is being used to support a broad program of computing research that deals with understanding, augmenting, and leveraging the power of heterogeneous vector computing enabled by GPU co-processors. The driving force here is the availability of cheap, powerful, and programmable GPUs through their commercialization in interactive 3D graphics applications such as interactive games. The CPU-GPU coupled cluster is enabling the pursuit of several new research directions in computing, as well as fostering a better understanding and fast solutiofostering to several existing interdisciplinary problems through a visualization-assisted computational steering environment. Additionally, it has the potential to cast several high-value problems on to a better spot on the price-performance curve.

Our ongoing research on this cluster falls into several broad interdisciplinary computing areas. We are exploring the visualization of very large (terabyte-sized) datasets and algorithms for parallel rendering. In high-performance computing we are developing and analyzing efficient algorithms for querying very large scientific simulation datasets as well as modeling complex systems when uncertainty is included in models. We plan to use our infrastructure for several applications in computational biology including computational modeling and visualization of proteins, conformational steering in protein structure determination, protein folding, drug design, large-scale phylogeny visualization, and sequence alignment. We are also using the cluster for applications in real-time 3D virtual audio, real-time computer vision, and for efficient compilation of signal processing algorithms.

Further details of this work are available here.

Research over the last decade has built a solid mathematical foundation for representation and analysis of 3D meshes in graphics and geometric modeling. Much of this work however does not explicitly incorporate models of low-level human visual attention. In this research we introduce the idea of mesh saliency as a measure of regional importance for graphics meshes. Our notion of saliency is inspired by low-level human visual system cues. We define mesh saliency in a scale-dependent manner using a center-surround operator on Gaussian-weighted mean curvatures. We observe that such a definition of mesh saliency is able to capture what most would classify as visually interesting regions on a mesh. The human-perception-inspired importance measure computed by our mesh saliency operator results in more visually pleasing results in processing and viewing of 3D meshes, compared to using a purely geometric measure of shape, such as curvature. We discuss how mesh saliency can be incorporated in graphics applications such as mesh simplification and viewpoint selection and present examples that show visually appealing results from using mesh saliency.

Further details of this work are available here.

- Mesh Saliency, C. H. Lee, A. Varshney, and David Jacobs, ACM SIGGRAPH 2005 (Accepted) (PDF 18.7 MB)

We propose a new approach to progressively compress time-dependent geometry. Our approach exploits correlations in motion vectors to achieve better compression. We use unsupervised learning techniques to detect good clusters of motion vectors. For each detected cluster, we build a hierarchy of motion vectors using pairwise agglomerative clustering, and succinctly encode the hierarchy using entropy encoding. We demonstrate our approach on a client-server system that we have built for downloading time-dependent geometry.

Further details of this work are available here.

- Unsupervised Learning Applied to Progressive Compression of Time-Dependent Geometry, T. Baby, Y. Kim, and A. Varshney, Computers and Graphics, Vol 29, No 3, June 2005, pp 451 - 461 (PDF 1.7 MB)

Protein docking is a Grand Challenge problem that is crucial to our understanding of biochemical processes. Several protein docking algorithms use shape complementarity as the primary criterion for evaluating the docking candidates. The intermolecular volume and area between docked molecules is useful as a measure of the shape complementarity. In this research we discuss an algorithm for interactively computing intermolecular negative volume and the area of docking site using graphics hardware. Our method leverages the recent advances in the 3D graphics hardware to achieve interactive rates of performance. Using this method scientists can interactively study various possible docking conformations and visualize the quality of the steric fit.

Further details of this work are available here.

- Computing and Displaying Intermolecular Negative Volume for Docking, C. H. Lee and A. Varshney, Scientific Visualization: Extracting Information and Knowledge from Scientific Datasets, Editors: G.-P. Bonneau, T. Ertl, G. M. Nielson, Springer-Verlag.

We introduce Light Collages - a lighting design system for effective visualization based on principles of human perception. Artists and illustrators enhance perception of features with lighting that is locally consistent and globally inconsistent. Inspired by these techniques, we design the placement of light sources to convey a greater sense of realism and better perception of shape with globally inconsistent lighting. Our algorithm segments the input model into a set of patches using a curvature-based-watershed method. The light placement function models the appropriateness of light directions for illuminating the model. This is done by using the curvature-based segmentation as well as the diffuse and specular illumination at every vertex. Lights are placed and assigned to patches based on the light placement function. Silhouette lighting and proximity shadows are added for feature enhancement.

Further details of this work are available here.

- Light Collages: Lighting Design for Effective Visualization, Chang Ha Lee, Xuejun Hao and Amitabh Varshney, IEEE Visualization 2004 , Austin, TX, October 2004.

We propose a scheme for modeling point sample geometry with statistical analysis. In our scheme we depart from the current schemes that deterministically represent the attributes of each point sample. We show how the statistical analysis of a densely sampled point model can be used to improve the geometry bandwidth bottleneck and to do randomized rendering without sacrificing visual realism. We first carry out a hierarchical principal component analysis (PCA) of the model. This stage partitions the model into compact local geometries by exploiting local coherence. Our scheme handles vertex coordinates, normals, and color. The input model is reconstructed and rendered using a probability distribution derived from the PCA analysis. We demonstrate the benefits of this approach in all stages of the graphics pipeline: (1) orders of magnitude improvement in the storage and transmission complexity of point geometry, (2) direct rendering from compressed data, and (3) view-dependent randomized rendering.

Further details of this work are available here.

- Statistical Point Geometry, A. Kalaiah and A. Varshney, Eurographics Symposium on Geometry Processing , June 2003, pp 113-122.

- Statistical Geometry Representation for Efficient Transmission and Rendering, A. Kalaiah and A. Varshney, ACM Transactions on Graphics , Vol 24, No 2, April 2005, pp 348 - 373.

We have proposed a simple lighting model to incorporate subsurface scattering effects within the local illumination framework. Subsurface scattering is relatively local due to its exponential falloff and has little effect on the appearance of neighboring objects. These observations have motivated us to approximate the BSSRDF model and to model subsurface scattering effects by using only local illumination.

In our approach we build the neighborhood information as a preprocess and modify the traditional local illumination model into a run-time two-stage process. In the first stage we compute the reflection and transmission of light on the surface. The second stage involves bleeding the scattering effects from a vertex's neighborhood to produce the final result. We then show how to merge the run-time two-stage process into a run-time single-stage process using pre-computed integral. The complexity of our run-time algorithm is O(N), where N is the number of vertices. Using this approach, we achieve interactive frame rates with about one to two orders of magnitude speedup compared with the state-of-the-art methods.

Further details of this work are available here.

- Interactive Subsurface Scattering for Translucent Meshes X. Hao, T. Baby and A. Varshney ACM Symposium on Interactive 3D Graphics 2003, pp 75 - 82

- Real-Time Rendering of Translucent Meshes X. Hao and A. Varshney ACM Transactions on Graphics , Vol 23, No 2, April 2004, pp 120 - 142

We have recently proposed variable-precision geometry transformations and lighting to accelerate 3D graphics rendering. Multiresolution approaches reduce the number of primitives to be rendered; our approach complements the multiresolution techniques as it reduces the precision of each graphics primitive. Our method relates the minimum number of bits of accuracy required in the input data to achieve a desired accuracy in the display output. We achieve speedup by taking advantage of the SIMD parallelism for arithmetic operations, now increasingly common on modern processors. In our research we have derived the mathematical groundwork for performing variable-precision geometry transformations and lighting for 3D graphics. In particular, we explore the relationship between the distance of a given sample from the viewpoint, its location in the view-frustum, to the required accuracy with which it needs to be transformed and lighted to yield a given screen-space error bound.

The left images of the Chateau Azay le Rideau (123K triangles) and Cyberware Venus (268K triangles) show rendering by conventional floating-point transformation and lighting, whereas the right images show variable-precision rendering. The latter are a factor of three faster (Pentium III, 933 MHz, 512MB RDRAM).

Further details and a sample implementation of this work is available here.

- Variable-Precision Rendering X. Hao and A. Varshney Proceedings, ACM Symposium on Interactive 3D Graphics March 19 - 21, 2001, Research Triangle Park, NC, pp 149 - 158

We have developed an algorithm for rapid computation of the smooth molecular surface. Our algorithm is analytical, easily parallelizable, and generates triangulated molecular surface. One of the important factors that influences the position and orientation of the protein with respect to the substrate in protein-substrate docking is the geometric fit or surface complementarity. Traditionally, the interface has been studied by using a clipping plane that is moved along the z-axis in the screen space. This does not readily convey the three-dimensional structure of the interface to the biochemist. We have defined the molecular interface surfaces between two molecular units, as a family of surfaces parametrized by the probe-radius α and interface radius β. The molecular interface surfaces are derived from approximations to the power-diagrams over the participating molecular units. Molecular surfaces provide biochemists with a powerful tool to study surface complementarity and to efficiently characterize the interactions during a protein-substrate docking.

The top images show Crambin and its molecular surface (probe radius 1.4 Angstroms) and the bottom images show Trasthyretin domains and their molecular interface surface (α = 1.0 Angstrom, β = 2.4 Angstroms).

- Defining, Computing, and Visualizing Molecular Interfaces A. Varshney, F. P. Brooks, Jr., D. C. Richardson, W. V. Wright, and D. Manocha Proceedings of the IEEE Visualization '95 Oct 29 - Nov 3, 1995, Atlanta, GA, pp 36 - 43

- Linearly Scalable Computation of Smooth Molecular Surfaces A. Varshney, F. P. Brooks, Jr., and W. V. Wright IEEE Computer Graphics and Applications Sept 1994, Vol. 14, No. 5, pp 19 - 25

- Interactive Visualization of Weighted Three-dimensional Alpha Hulls A. Varshney, F. P. Brooks, Jr., and W. V. Wright Third Annual Video Review of Computational Geometry in Proceedings of the Tenth Annual Symposium on Computational Geometry Stony Brook, NY, June 6 - 8, 1994, pp 395 - 396

- Fast Analytical Computation of Richards's Smooth Molecular Surface A. Varshney and F. P. Brooks, Jr. Proceedings of the IEEE Visualization '93 Oct 25 - 29, 1993, San Jose, CA, pp 300 - 307.

The previous methods to compute smooth molecular surface assumed that each atom in a molecule has a fixed position without thermal motion or uncertainty. In real world, the position of an atom in a molecule is fuzzy because of its uncertainty in protein structure determination and thermal energy of the atom.

For representing thermal vibrations and uncertainty of atoms, we have proposed a method to compute fuzzy molecular surfaces. We have also implemented a program for interactively visualizing three-dimensional molecular structures including the fuzzy molecular surface using multi-layered transparent surfaces, where the surface of each layer has a different confidence level and the transparency is associated with the confidence level.

Further details of our work on fuzzy molecular surfaces can be found here.

- Representing Thermal Vibrations and Uncertainty in Molecular Surfaces C.H. Lee and A. Varshney SPIE Conference on Visualization and Data Analysis 2002, San Jose, CA

We have developed a novel point rendering primitive, Differential Point, that captures the local differential geometry information in the vicinity of a sampled point. This representation uses the principal curvature directions and values along with the local normal to efficiently render illuminated and shaded samples. Until now, point-based rendering approaches could not efficiently vary the shading across a single primitive. Our approach shows how this can be achieved on a per-pixel basis using commercially-available hardware (nVIDIA's GeForce2 and GeForce3 cards). In our approach each differential point is approximated by the closest of 256 quantized differential point representations and is rendered as a normal-mapped rectangle. Per-pixel shading is achieved by using the per-pixel normal (by normal map) and interpolated half vector and light vector by cube vector map for illumination computations in the register combiners. The images show a teapot being rendered under diffuse (left), specular (middle), and diffuse with specular (right) illuminations with 25K samples at about 12 frames per second on a nVIDIA's GeForce2 card.

Further details can be found here.

- Modeling and Rendering of Points with Local Geometry A. Kalaiah and A. Varshney IEEE Transactions on Visualization and Computer Graphics Vol. 9, No. 1, January 2003, pp 30 -- 42

- Differential Point Rendering A. Kalaiah and A. Varshney Rendering Techniques '01 editors S. J. Gortler and K. Myszkowski, Springer-Verlag, August 2001

The recent growth of interest in virtual environments has been accompanied by a corresponding increase in the types of devices for sensory feedback, especially visual feedback. Our target application is collaborative visualization-assisted computational steering for problems in computational biology and computer-aided mechanical design. The head-coupled displays are ill suited to applications in which the goal is to interact with other people or for tasks that take several hours (due to eye and neck fatigue). Spatially immersive displays, such as wall-sized tiled displays, allow long work periods, offer a high field of view and resolution, and afford a strong self-presence. Wall-sized tiled displays are more supportive to collaboration and learning than regular monitors. Users prefer to stay longer in such displays, they prefer to move and discuss the datasets more, and repeatedly inspect, walk around and see the displayed datasets from different viewpoints. We are currently exploring the design and building of high-resolution, wall-sized displays that can be quickly assembled, aligned using software and algorithms, and allow direct user interaction.

- A Real-time Seamless Tiled Display System for 3D Graphics Zhiyun Li and Amitabh Varshney Proceedings of Seventh Annual Symposium on Immersive Projection Technology (IPT 2002) , March 24 - 25, 2002, Orlando, FL

Haptic display with force feedback is often necessary in several virtual environments. To enable haptic rendering of large datasets we have introduced a novel approach to reduce the complexity of the rendered dataset. We construct a continuous, multiresolution hierarchy of the model during the pre-processing and then at run time we use high-detail representation for regions around the haptic-probe pointer and coarser representations farther away. Using our algorithm we are able to haptically render one to two orders of magnitude larger datasets than otherwise possible. Our approach is orthogonal to the' previous work done in accelerating haptic rendering and thus can be used with them.

We have also worked on the development of a multisensory virtual environment with visual, haptic, and aural feedbacks for simulating the five-axis CNC milling process. Haptic rendering provides the user with kinesthetic and tactile information. Kinesthetic information is displayed by the cutting force of a milling machine. The tactile information is conveyed by the haptic texturing. Aural rendering simulates the machine sound and provides the aural feedback to the user. Using ideas from the concepts of image-based rendering, haptic and aural rendering are accelerated by pre-sampling related environment? parameters in a perception-dependent way.

- Haptic and Aural Rendering of a Virtual Milling Process C. Chang, A. Varshney, and Q. J. Ge Proceedings, ASME Sixth Design for Manufacturing Conference Sept 9 - 12, 2001, Pittsburgh, PA.

- Continously-Adaptive Haptic Rendering J. El-Sana and A. Varshney Virtual Environments 2000 editors J. Mulder and R. van Liere, Springer-Verlag, 2000, pp. 135 - 144

We have pioneered view-dependent geometry and topology simplifications for level-of-detail-based renderings of large models. Our approaches proceed by preprocessing the input dataset into a binary tree, the view-dependence tree of general vertex-pair collapses. A subset of the Delaunay edges is used to limit the number of vertex pairs considered for topology simplification. Dependencies to avoid mesh foldovers in manifold regions of the input object are stored in the view-dependence tree in an implicit fashion. We have observed that implicitizing the mesh dependencies not only reduces the space requirements by a factor of two, it also highly localizes the memory accesses at run time. The view-dependence tree is used at run time to generate the triangles for display. We have also developed a cubic-spline-based distance metric that can be used to unify the geometry and topology simplifications by considering the vertex positions and normals in an integrated manner.

- Generalized View-Dependent Simplification J. El-Sana and A. Varshney Computer Graphics Forum (Eurographics '99) Volume 18, Number 3, 1999, pp 83 - 94

- View-Dependent Topology Simplification J. El-Sana and A. Varshney Virtual Environments '99 editors M. Gervautz, A. Hildebrand, D. Schmalstieg, Springer-Verlag, 1999, pp 11 - 22

- Parallel Construction and Navigation of View-Dependent Virtual Environments J. El-Sana and A. Varshney SPIE '99 Conference on Visual Data Exploration and Analysis Jan 23 - 29, 1999, San Jose, CA

- Adaptive Real-Time Level-of-detail-based Rendering for Polygonal Models J. Xia, J. El-Sana, and A. Varshney IEEE Transactions on Visualization and Computer Graphics Vol. 3, No. 2, June 1997, pp 171 - 183

- Dynamic View-Dependent Simplification for Polygonal Models J. Xia and A. Varshney Proceedings of the IEEE Visualization '96 Oct 27 - Nov 1, 1996, San Francisco, CA, pp 327 - 334

Triangle strips are a widely used hardware supported data structure to compactly represent and efficiently render polygonal meshes. We have explored the efficient generation of triangle strips as well as their variants. We have developed efficient algorithms for partitioning polygonal meshes into triangle strips. Triangle strips have traditionally used a buffer size of two vertices. We have also studied the impact of larger buffer sizes and various queuing disciplines on the effectiveness of triangle strips. View-dependent simplification has emerged as a powerful tool for graphics acceleration in visualization of complex environments. However, in a view-dependent framework the triangle mesh connectivity changes at every frame making it difficult to use triangle strips. To address this we have developed a novel data-structure, Skip Strip, that efficiently maintains triangle strips during such view-dependent changes. A Skip Strip stores the vertex hierarchy nodes in a skip-list-like manner with path compression. We anticipate that Skip Strips will provide a road-map to combine rendering acceleration techniques for static datasets, typical of retained-mode graphics applications, with those for dynamic datasets found in immediate-mode applications.

- Efficiently Computing and Updating Triangle Strips for Real-Time Rendering J. El-Sana, F. Evans, A. Kalaiah, A. Varshney, S. Skiena, and E. Azanli Computer-Aided Design Vol. 32, No. 13, Nov 2000, pp 753 - 772

- Skip Strips: Maintaining Triangle Strips for View Dependent Rendering J. El-Sana and A. Varshney IEEE Visualization '99 Oct 24 - 29, 1999, San Francisco, CA, pp 131 - 138.

- Optimizing Triangle Strips for Fast Rendering F. Evans, S. Skiena, and A. Varshney Proceedings of the IEEE Visualization '96 Oct 27 - Nov 1, 1996, San Francisco, CA, pp 319 - 326

- Stripe: A Software Tool For Efficient Triangle Strips F. Evans, S. Skiena and A. Varshney Visual Proceedings, ACM SIGGRAPH Aug 4 - 9, 1996, New Orleans, LA, pp 153

As the complexity of the 3D object-space has increased beyond the bounded image-space resolution, image-based rendering has emerged as a viable alternative to conventional rendering schemes. Given a collection of depth-buffered images representing an environment from fixed viewpoints and view directions, our approach first constructs an image-space simplification of the scene as a pre-process and then reconstructs a view of this scene for arbitrary viewpoints and directions in real-time. We use the commonly available texture-mapping hardware for speed and partially rectify the disocclusion errors in image-based rendering. We are currently working on hybrid rendering techniques that integrate polygon- and image-based rendering.

- Integrating Polygon- and Image-based Rendering C. Chang, A. Varshney, and Q. J. Ge International Journal of Image and Graphics (under review)

- Hierarchical Image-based and Polygon-based Rendering for Large-Scale Visualizations C. Chang, A. Varshney, and Q. J. Ge Scientific Visualization 2001 editors H. Hagen, G. Farin, and B. Hamann, Springer-Verlag, 2001, (under review)

- Walkthroughs of Complex Environments using Image-Based Simplification L. Darsa, B. Costa, and A. Varshney Computers and Graphics Vol. 22, No. 1, January 1998, pp 25 - 34

- Navigating Static Environments Using Image-Space Simplification and Morphing L. Darsa, B. Costa, and A. Varshney Proceedings of the Symposium on 3D Interactive Graphics April 27 - 30, 1997, Providence, RI, pp 25 - 34

We have developed algorithms to simplify the genus of an object for virtual environments where interactivity is more important than topological fidelity. Genus simplification allows more aggressive simplification and consequently faster rendering than possible with just geometry simplification. We have proposed genus-simplification algorithms for polygonal meshes as well as volumetric datasets.

Our approach for polygonal datasets is based on identifying holes, tunnels, and cavities by extending the concept of alpha-shapes to polygonal meshes under the L∞ distance metric. We then generate valid triangulations to reduce the genus using the intuitive notion of sweeping a L∞ cube over the identified regions. Our approach can also be used for removal of small protuberances and repair of cracks in polygonal models within a unified framework. Our approach for volumetric datasets works by adopting a signal-processing approach to object detail elimination. We low-pass filter the object followed by thresholding and reconstruction.

- Topology Simplification for Polygonal Virtual Environments J. El-Sana and A. Varshney IEEE Transactions on Visualization and Computer Graphics Vol. 4, No. 2, June 1998, pp 133 - 144

- Controlled Simplification of Genus for Polygonal Models J. El-Sana and A. Varshney Proceedings of the IEEE Visualization '97 Oct 19 - Oct 24, 1997, Phoenix, AZ, pp 403 - 412

- Controlled Simplification of Object Topology T. He, L. Hong, A. Varshney, and S. Wang IEEE Transactions on Visualization and Computer Graphics Vol. 2, No. 2, June 1996, pp 171 - 184

- Voxel-Based Object Simplification T. He, L. Hong, A. Kaufman, A. Varshney, and S. Wang Proceedings of the IEEE Visualization '95 Oct 29 - Nov 3, 1995, Atlanta, GA, pp 296 - 303

Preserving the topology of the input dataset is an important criterion for some graphics application such as tolerancing, drug design, and geological and medical volume visualization. For such applications we have developed the approach of Simplification Envelopes that generates a multiresolution hierarchy which preserves the input topology and maintains global error bounds. Our approach guarantees that all points of an approximation are within a user-specifiable distance ε from the original model and that all points of the original model are within a distance ε from the approximation. Simplification envelopes provide a general framework within which a large collection of existing simplification algorithms can run. We demonstrate this technique in conjunction with two algorithms, one local, the other global. The local algorithm provides a fast method for generating approximations to large input meshes (at least hundreds of thousands of triangles). The global algorithm provides the opportunity to avoid local minima and possibly achieve better simplifications as a result.

- Simplification Envelopes J. Cohen, A. Varshney, D. Manocha, G. Turk, H. Weber, P. Agarwal, F. P. Brooks, Jr. and W. V. Wright SIGGRAPH 96 Proceedings, Annual Conference Series, ACM SIGGRAPH Aug 4 - 9, 1996, New Orleans, LA, pp 119 - 128

Fine Gesture Recognition

We have developed VType, an interface that enables a user wearing virtual reality gloves to enter text while in an immersive virtual world. Engineering VType required developing new techniques for two distinct problems: (1) extracting clean signals from the noisy data representing finger movements from the gloves, and (2) algorithmically resolving ambiguity on an inherently overloaded keyboard. Our motivation for this project is to push the envelope for the technology to recognize fine gestures, such as small finger movements during typing, for applications in virtual environments. Our preliminary user studies suggest that accurate text entry is possible using low-cost, low-resolution data gloves. Further, our work on overloaded keyboard design suggests new directions in text-entry systems for the disabled.

© Copyright 2013, Graphics and Visual Informatics Laboratory, University of Maryland, All rights reserved.

Web Accessibility