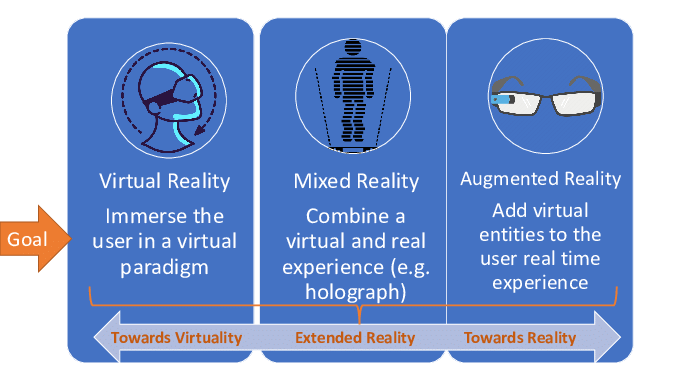

AR, VR, and MR, collectively referred to as XR, are becoming ubiquitous for human-computer interaction with limitless applications and potential use. This course examines advances on real-time multi-modal XR systems in which the user is 'immersed' in and interacts with a simulated 3D environment. The topics will include display, modeling, 3D graphics, haptics, audio, locomotion, animation, applications, immersion, and presence and how they interact to create convincing virtual environments. We'll explore these fields and

the current/future research directions.

By the end of the class, students will be understand a wide range of research problems in XR, as well as be able to understand the future of XR as a link between physical and virtual worlds as described in the "Metaverse" visions.

The class assignments will involve interacting with the above topics in the context of a real XR device, with the goal of building the skills to develop powerful multi-modal XR applications. Assignments may be completed in Unity or Unreal Engine 4, with our full technical support for either one.

Prior knowledge or experience with game development or XR is not necessary to succeed in the course! Unity and UE4 both use object-oriented programming, with the technical learning experience of this class focusing on APIs and application design rather than programming skill, so intermediate experience with Java, C#, C++, etc. is sufficient.

Most students will work with the headset provided (shown left below), which allows for development on one's own mobile phone. It contains an iOS/Android-compatible remote controller, and the back is open, exposing the smartphone camera to allow for AR and vision-app development. Students may also use their own devices, such as an Oculus Quest (or any other HMD that can provide hand-tracking and controller input), which should not affect the assignment difficulty.