39 Multiple Issue Processors II

Dr A. P. Shanthi

The objectives of this module are to discuss in detail about the VLIW architectures and discuss the various implementation and design issues related to the VLIW style of architectures.

The previous module discussed the need for multiple issue processors. We also discussed in detail about statically scheduled and dynamically scheduled superscalars. Now, we will discuss the other type of multiple issue processors, viz., VLIW processors.

VLIW (Very Long Instruction Word) processors: In this style of architectures, the compiler formats a fixed number of operations as one big instruction (called a bundle) and schedules them. With few numbers of instructions, say 3, it is usually called LIW (Long Instruction Word). There is a change in the instruction set architecture, i.e., 1 program counter points to 1 bundle (not 1 operation). The operations in a bundle are issued in parallel. The bundles follow a fixed format and so the decode operations are done in parallel. It is assumed that there are enough FUs for the types of operations that can issue in parallel. We can also look at pipelined FUs. Example machines that follow this style include the Multiflow & Cydra 5 (8 to 16 operations) in the 1980’s, IA-64 (3 operations), Crusoe (4 operations) and TM32 (5 operations).

The main goals of the hardware design in a processor are to reduce the hardware complexity, to shorten the cycle time for better performance and to reduce power requirements. The VLIW designs reduce hardware complexity by the following features:

- less multiple-issue hardware

- no dependence checking for instructions within a bundle

- can be fewer paths between instruction issue slots & FUs

- simpler instruction dispatch

- no out-of-order execution, no instruction grouping

- ideally no structural hazard checking logic

All this leads to reduction in hardware complexity which in turn affects the cycle time and power consumption.

Though the hardware is less complex, the compiler becomes more complicated. We need the support of the compiler to increase ILP. Since there are multiple operations packed into one bundle with the idea of keeping the functional units busy, there must be enough parallelism in a code sequence to fill the available operation slots. This parallelism is usually uncovered by using techniques like loop unrolling and software scheduling that we discussed in the previous modules, and then scheduling these instructions appropriately. If the unrolling generates straightline code, then local scheduling techniques, which operate on a single basic block can be used. On the other hand, if finding and exploiting the parallelism requires scheduling code across branches, a substantially more complex global scheduling algorithm must be used. Global scheduling algorithms are not only more complex in structure, but they must deal with significantly more complicated tradeoffs in optimization, since moving code across branches is expensive.

Trace scheduling, is one of these global scheduling techniques developed specifically for VLIWs. The details of trace scheduling are beyond the scope of this lecture. But we will at the basic concepts involved here. Trace scheduling is a way to organize the global code motion process, so as to simplify the code scheduling by incurring the costs of possible code motion on the less frequent paths. There are two steps to trace scheduling. The first step, called trace selection, tries to find a likely sequence of basic blocks whose operations will be put together into a smaller number of instructions called a trace. Loop unrolling and static branch prediction will be used so that the resultant trace is a straight-line sequence resulting from concatenating many basic blocks. Once a trace is selected, the second process, called trace compaction, tries to squeeze the trace into a small number of wide instructions. Trace compaction is code scheduling; hence, it attempts to move operations as early as it can in a sequence (trace), packing the operations into as few wide instructions (or issue packets) as possible. Predication, which is a hardware support that allows some conditional branches to be eliminated, extending the usefulness of local scheduling and enhancing the performance of global scheduling are also extensively used.

Thus, it is seen that the compiler is responsible for uncovering parallelism and also detecting hazards and hiding the latencies associated with them. Structural hazards are handled by making sure that no two operations within a bundle are scheduled to the same functional unit and no two operations are scheduled to the same memory bank. The latencies associated with memory are handled by data prefetching and by hoisting loads above stores, with support from the hardware. Data hazards are handled by ensuring that there are no data hazards among instructions in a bundle. Control hazards are dealt using predicated execution and static branch prediction.

Let us now look at a loop that we are already familiar with and see how this can be scheduled on a VLIW processor. The code below shows a thrice unrolled and scheduled code that runs in 11 clock cycles, taking 3.67 clock cycles per iteration.

1 Loop:L.D F0,0(R1)

2 L.D F6,-8(R1)

3 L.D F10,-16(R1)

4 ADD.D F4,F0,F2

5 ADD.D F8,F6,F2

6 ADD.D F12,F10,F2

7 S.D 0(R1),F4

8 S.D -8(R1),F8

9 DSUBUI R1,R1,#24

10 BNEZ R1,LOOP

11 S.D 8(R1),F12 ; 8-24 = -16

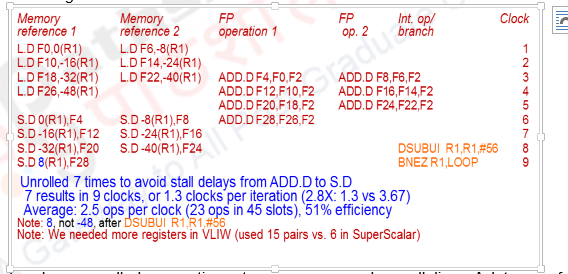

Assuming, a VLIW processor that packs two memory operations, two floating point operations and one integer or branch operation in one bundle, a probable schedule is shown below in Figure 23.1.

The loop has been unrolled seven times to uncover enough parallelism. A latency of one has been assumed between the Load and the Add using it, and a latency of two has been assumed between the Add and the corresponding Store. The first clock cycle issues only the two Load operations. The second clock cycle also issues only the two subsequent Loads. The third clock cycle issues two more Loads and also two Adda corresponding to the first two Loads. This is continued. Note that the first set of Stores are issued two clock cycles after the corresponding Adds. Note that the last Store uses an address 8(R1) instead of -48(R1) as the DSUBUI instruction has been moved ahead and modifies R1 by -56. This code takes 9 clock cycles for seven iterations, resulting in a rate of 1.3 clocks per iteration.

Drawbacks of the VLIW Processors: For the original VLIW model, there are both technical and logistical problems. The technical problems are the increase in code size and the limitations of the lock-step operation. The increase in code size is due to the ambitious unrolling that is done and also because of the fact that we are not able to effectively use the slots. The schedule discussed earlier itself shows that only about 60% of the functional units are used, so almost half of each instruction was empty. In most VLIWs, an instruction may need to be left completely empty if no operations can be scheduled. To handle this code size increase, clever encodings are sometimes used. For example, there may be only one large immediate field for use by any functional unit. Another technique is to compress the instructions in main memory and expand them when they are read into the cache or decoded.

Early VLIWs operated in a lock-step fashion as there was no hazard detection hardware at all. Because of this, a stall in any functional unit pipeline would cause the entire processor to stall, since all the functional units must be kept synchronized. Although a compiler may be able to schedule the deterministic functional units to prevent stalls, predicting which data accesses will encounter a cache stall and scheduling them is very difficult. Hence, caches needed to be blocking and would cause all the functional units to stall. This is too much of a restriction. In more recent processors, the functional units operate more independently, and the compiler is used to avoid hazards at issue time, while hardware checks allow for unsynchronized execution once instructions are issued.

Binary code compatibility has also been a major logistical problem for VLIWs. Different numbers of functional units and functional unit latencies require different versions of the code. This requirement makes migrating between successive implementations, or between implementations with different issue widths, more difficult than it is for a superscalar design. One possible solution to this binary code compatibility is object-code translation or emulation. This technology is developing quickly and could play a significant role in future migration schemes. Another approach is to temper the strictness of the approach so that binary compatibility is still feasible. This later approach is used in the IA-64 architecture.

Advantages of Superscalar Architectures over VLIW: When compared to the VLIW architectures, the superscalars have the following advantages:

- Old codes still run without any problem

- o Like those tools you have that came as binaries

- The hardware detects whether the instruction pair is a legal dual issue pair

- o If not they are run sequentially

- Little impact on code density

- o You do not have to fill all the slots where you cannot issue instructions with just NOP’s

- Compiler issues are very similar

- o Still need to do instruction scheduling anyway

- o Dynamic issue hardware is there so the compiler does not have to be too conservative

Explicitly Parallel Instruction Computing (EPIC) is a term coined in 1997 by the HP – Intel alliance based on the VLIW style of architectures. As VLIW processors issue a fixed number of instructions formatted as a bundle, with the parallelism among instructions explicitly indicated by the instruction, they are also known as Explicitly Parallel Instruction Computers. VLIW and EPIC processors are inherently statically scheduled by the compiler. The Itanium processor is an example of this style of architectures. The complete discussion of this architecture is beyond the scope of this discussion. However, some of the characteristics of the architecture are listed below:

- Bundle of instructions

- 128 bit bundles

- 3 41-bit instructions/bundle

- 2 bundles can be issued at once

- Fully predicated ISA

- Registers

- 128 integer & FP registers

- 128 additional registers for loop unrolling & similar optimizations

- 8 indirect branch registers

- miscellaneous other registers

- ISA & Microarchitecture seem complicated (some features of out-of-order processors)

- not all instructions in a bundle need stall if one stalls (a scoreboard keeps track of produced source operands)

- multi-level branch prediction

- register remapping to support rotating registers on the “register stack” which aid in software pipelining & dynamically sized register windows

- special hardware for “register window” overflow detection; special instructions for saving & restoring the register stack

- Speculative state cannot be stored to memory

- special instructions check integer register poison bits to detect whether value is speculative (speculative loads or exceptions)

- OS can override the ban (e.g., for a context switch)

- different mechanism for floating point (status registers)

- Array address post-increment & loop control

Comparison between Superscalars and VLIW Processors: Superscalar processors have more complex hardware for instruction scheduling as they perform out-of-order execution and there are more paths between instruction issue structure and functional units. This will result in slower cycle times, more chip area and more power consumption. VLIW architectures also will have more functional units if there is support for full predication leading to the same possible consequences. VLIW architectures obviously have larger code size. The estimates of IA-64 code is up to 2X – 4X over x86. This will lead to increase in instruction bandwidth requirements and decrease in instruction cache effectiveness. They also require a more complex compiler. Superscalars can more efficiently execute pipeline-independent code. So, they do not have to recompile if we change the implementation.

To summarize, we have looked at the need for multiple issue processors. We have discussed the VLIW style of multiple issue processors. VLIW processors form instruction bundles consisting of a number of instructions and the bundles are issued statically. Hazard detection and issue are done by the compiler. The compiler has to be complicated and uses different techniques for exploiting ILP. Early VLIWs were quite rigid in their instruction formats and effectively required recompilation of programs for different versions of the hardware. To reduce this inflexibility and enhance performance of the approach, several innovations have been incorporated into more recent architectures of this type. This second generation of VLIW architectures is the approach being pursued for desktop and server markets. VLIW architectures have certain advantages and disadvantages compared to superscalar processors.

| Web Links / Supporting Materials |

| Computer Organization and Design – The Hardware / Software Interface, David A. Patterson and John L. Hennessy, 4th Edition, Morgan Kaufmann, Elsevier, 2009. |

| Computer Architecture – A Quantitative Approach , John L. Hennessy and David A. Patterson, 5th Edition, Morgan Kaufmann, Elsevier, 2011. |