Researchers from UMD and Rice University Develop Imaging Technology That Can ‘See’ Hidden Objects

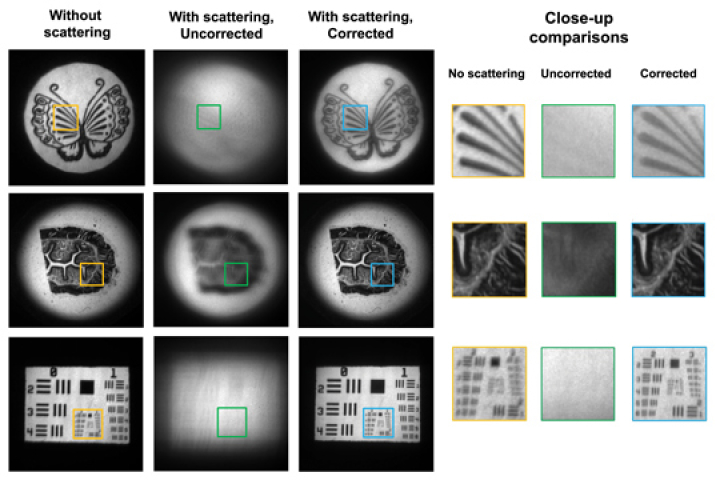

Researchers from the University of Maryland and Rice University created full-motion video technology that could potentially be used to make cameras that peer through fog, smoke, driving rain, murky water, skin, bone and other media that reflect scattered light and obscure objects from view.

UMD Computer Science Assistant Professor Christopher Metzler and Ph.D. student Brandon Feng collaborated with Rice University Professor Ashok Veeraraghavan and Ph.D. student Haiyun Guo to develop the imaging technology they named NeuWS (neural wavefront shaping). The technology, which was described in a paper published June 28, 2023 in the journal Science Advances, opens new possibilities for enhanced visibility in challenging environments.

“Our study bridges the gap between current events in computer vision or generative AI and the wider optical imaging field,” Feng said. “We are now able to see through scattered layers and recover whatever scene or object is behind them. That has been a problem for quite some time and now we are able to solve it."

NeuWS is based on the concept that light waves possess two fundamental properties: magnitude and phase. The research team’s technology lies in the ability to measure the phase of incoming light, which is crucial for overcoming scattering effects. Directly measuring phase is impractical due to the high-frequency nature of optical light. Instead, they utilize "wavefronts"—single measurements containing both phase and intensity information—and employ advanced computational processing to rapidly decipher the phase information from several hundred wavefront measurements per second.

By harnessing state-of-the-art spatial light modulators, which can capture multiple measurements per second, the researchers successfully demonstrated the capture of full-motion video of objects moving through media that scatter light.

“What we've done is use recent advances in machine learning and statistical signal processing to solve a long-standing and serious problem in optics,” Metzler said. “Science Advances is one of the top research journals for computer science and the wider scientific world, and I am very excited about this milestone.”

In one set of experiments, for example, a microscope slide containing a printed image of an owl or a turtle was spun on a spindle and filmed by an overhead camera. Light-scattering media were placed between the camera and target slide, and the researchers measured NeuWS’s ability to correct for light scattering. Examples of scattering media included onion skin, slides coated with nail polish, slices of chicken breast tissue and light-diffusing films.

For each of these, the experiments showed NeuWS could correct for light scattering and produce clear video of the spinning figures. This technology could have profound implications across various sectors.

In environmental monitoring, it could aid in detecting and tracking objects obscured by fog, smoke or adverse weather conditions, improving situational awareness and safety. In medical imaging, it has the potential to enhance diagnostics by enabling clearer visualization of tissues and structures hidden by scattering media such as skin and bone. Additionally, in underwater exploration and security applications, NeuWS could revolutionize imaging systems by enabling better visibility in murky water or through obstructing barriers.

“In all of these circumstances, and others, the real technical problem is scattering,” said Veeraraghavan, who is also a UMD alum. He received his master’s degree and Ph.D. in electrical engineering from UMD in 2004 and 2008, respectively. “There’s a lot of work to be done before we can actually build prototypes in each of those application domains, but the approach we have demonstrated could traverse them.”

—Story by Samuel Malede Zewdu, CS Communications

##

In addition to Metzler and Feng, UMD computer science Ph.D. student Mingyang Xie also co-authored the paper.

The research was supported by the Air Force Office of Scientific Research (Award No. FA9550- 22-1-0208), the National Science Foundation (Award Nos. 1652633, 1730574 and 1648451) and the National Institutes of Health (Award No. DE032051), and partial funding for open access was provided by the University of Maryland Libraries’ Open Access Publishing Fund. This story does not necessarily reflect the views of these organizations.

The paper, “NeuWS: Neural Wavefront Shaping for Guidestar-Free Imaging Through Static and Dynamic Scattering Media,” by Brandon Y. Feng, Haiyun Guo, Mingyang Xie, Vivek Boominathan, Manoj K. Sharma, Ashok Veeraraghavan and Christopher A. Metzler, was published on June 28, 2023 in Science Advances.

The Department welcomes comments, suggestions and corrections. Send email to editor [-at-] cs [dot] umd [dot] edu.