UMD and Harvard Researchers Develop Robust Defense Against Malicious AI Prompts

Researchers from the University of Maryland and Harvard University have developed an innovative defense against adversarial prompts that aim to trick large language models (LLMs) into producing harmful content.

LLMs, such as ChatGPT and Google Bard, are advanced artificial intelligence systems trained on vast amounts of text data. They are designed to understand, generate and interact using human-like language, making them valuable tools for tasks such as translation, content generation and answering queries. However, their vast knowledge and adaptability also pose challenges in ensuring they operate safely and as intended.

These language models, designed for public use, have built-in safety measures known as "model alignment." Their primary objective is to decline any user requests that might lead to the production of harmful content. Yet, recent advancements have shown these models to be vulnerable to adversarial prompts. These prompts are maliciously crafted sequences designed to bypass these safety measures.

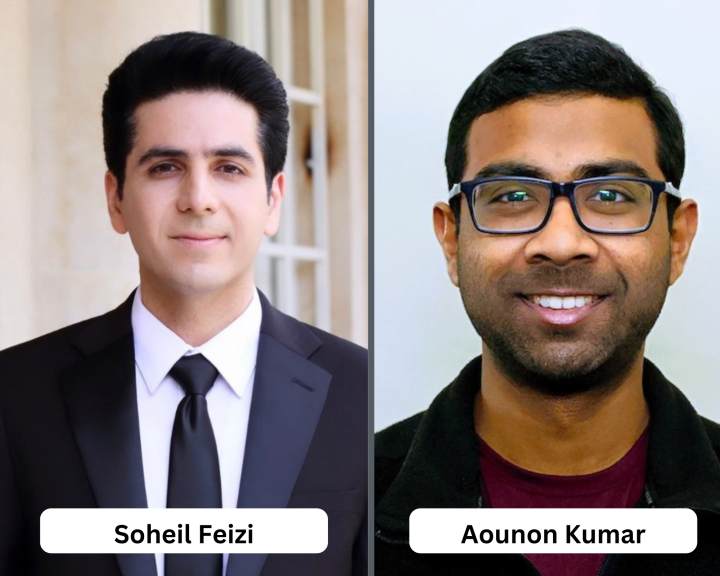

That is where “erase-and-check" makes all the difference. The pioneering framework is from a joint team comprising Associate Professor Soheil Feizi and his former Ph.D. student Aounon Kumar from UMD’s Department of Computer Science and Chirag Agarwal, Hima Lakkaraju and Suraj Srinivas from Harvard University. The technique, illustrated in their research work titled “Certifying LLM Safety Against Adversarial Prompting,” increases the robustness of LLMs by erasing individual tokens (chunks of text that a model reads) and inspecting the resulting sequences with a dedicated safety filter.

As Kumar notes, the group’s recent research has the potential to mitigate harmful outputs from LLMs, particularly in response to the potential creation of dangerous tools that can do much harm to society.

“Our work focuses on certifying the safety of large language models against adversarial prompting. Despite training models like ChatGPT to produce safe outputs aligned with human values, research shows these safety measures can be bypassed,” Kumar said. “Such adversarial sequences can be automated and are transferable across prompts. We aim to defend against these attacks, ensuring the model recognizes and resists harmful prompts, even when modified.”

The researchers explain that if any subsequences or the input prompt are detected as harmful by the filter, then the entire input prompt is labeled as harmful. This novel approach guarantees that any adversarial modification of a prompt, up to a specified size, will also be identified as harmful.

Their framework was tested against three types of attacks: appending an adversarial sequence at the end of a prompt, inserting the sequence anywhere within the prompt and infusing adversarial tokens at arbitrary positions within the prompt.

The researchers are confident with the preliminary results that indicate that "erase-and-check" provides strong safety guarantees against harmful prompts without compromising the performance of safe ones.

Feizi believes this breakthrough is a significant step towards ensuring the responsible and safe use of AI-driven language models in public domains.

“This is the first provable defense against adversarial prompts. We hope our work inspires other researchers to improve certifiable defenses despite some efficiency issues,” Feizi said. “In addition, I couldn’t be more ecstatic that this research is a collaborative effort with Harvard and our team at UMD. Everyone’s work has been integral in the process.”

Story by Samuel Malede Zewdu, CS Communications

The Department welcomes comments, suggestions and corrections. Send email to editor [-at-] cs [dot] umd [dot] edu.